使用Python实现深度学习模型:Transformer模型

Transformer模型自提出以来,已经成为深度学习领域,尤其是自然语言处理(NLP)中的一种革命性模型。与传统的循环神经网络(RNN)和长短期记忆网络(LSTM)不同,Transformer完全依赖于注意力机制来捕捉序列中的依赖关系。这使得它能够更高效地处理长序列数据。在本文中,我们将详细介绍Transformer模型的基本原理,并使用Python和TensorFlow/Keras实现一个简单的Transformer模型。

1. Transformer模型简介

Transformer模型由编码器(Encoder)和解码器(Decoder)组成,每个编码器和解码器层都由多头自注意力机制和前馈神经网络(Feed-Forward Neural Network)组成。

1.1 编码器(Encoder)

编码器的主要组件包括:

- 自注意力层(Self-Attention Layer):计算序列中每个位置对其他位置的注意力分数。

- 前馈神经网络(Feed-Forward Neural Network):对每个位置的表示进行独立的非线性变换。

1.2 解码器(Decoder)

解码器与编码器类似,但有额外的编码器-解码器注意力层,用于捕捉解码器输入与编码器输出之间的关系。

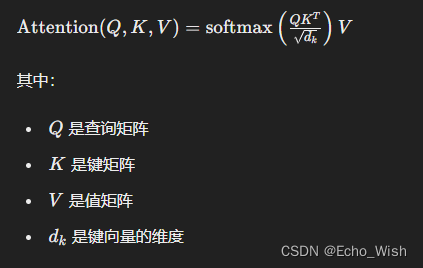

1.3 注意力机制

注意力机制的核心公式如下:

2. 使用Python和TensorFlow/Keras实现Transformer模型

下面我们将使用Python和TensorFlow/Keras实现一个简单的Transformer模型,用于机器翻译任务。

2.1 安装TensorFlow

首先,确保安装了TensorFlow:

pip install tensorflow

2.2 数据准备

我们使用TensorFlow内置的英文-德文翻译数据集。

import tensorflow as tf

import tensorflow_datasets as tfds# 加载数据集

examples, metadata = tfds.load('ted_hrlr_translate/pt_to_en', with_info=True, as_supervised=True)

train_examples, val_examples = examples['train'], examples['validation']# 准备tokenizer

tokenizer_en = tfds.deprecated.text.SubwordTextEncoder.build_from_corpus((en.numpy() for pt, en in train_examples), target_vocab_size=2**13)

tokenizer_pt = tfds.deprecated.text.SubwordTextEncoder.build_from_corpus((pt.numpy() for pt, en in train_examples), target_vocab_size=2**13)# 定义tokenizer函数

def encode(lang1, lang2):lang1 = [tokenizer_pt.vocab_size] + tokenizer_pt.encode(lang1.numpy()) + [tokenizer_pt.vocab_size+1]lang2 = [tokenizer_en.vocab_size] + tokenizer_en.encode(lang2.numpy()) + [tokenizer_en.vocab_size+1]return lang1, lang2def tf_encode(pt, en):result_pt, result_en = tf.py_function(encode, [pt, en], [tf.int64, tf.int64])result_pt.set_shape([None])result_en.set_shape([None])return result_pt, result_en# 设置缓冲区大小

BUFFER_SIZE = 20000

BATCH_SIZE = 64# 预处理数据

train_dataset = train_examples.map(tf_encode)

train_dataset = train_dataset.cache()

train_dataset = train_dataset.shuffle(BUFFER_SIZE).padded_batch(BATCH_SIZE)

train_dataset = train_dataset.prefetch(tf.data.experimental.AUTOTUNE)val_dataset = val_examples.map(tf_encode)

val_dataset = val_dataset.padded_batch(BATCH_SIZE)

2.3 实现Transformer模型组件

我们首先实现一些基础组件,如位置编码(Positional Encoding)和多头注意力(Multi-Head Attention)。

2.3.1 位置编码

位置编码用于在序列中加入位置信息。

import numpy as npdef get_angles(pos, i, d_model):angle_rates = 1 / np.power(10000, (2 * (i // 2)) / np.float32(d_model))return pos * angle_ratesdef positional_encoding(position, d_model):angle_rads = get_angles(np.arange(position)[:, np.newaxis],np.arange(d_model)[np.newaxis, :],d_model)angle_rads[:, 0::2] = np.sin(angle_rads[:, 0::2])angle_rads[:, 1::2] = np.cos(angle_rads[:, 1::2])pos_encoding = angle_rads[np.newaxis, ...]return tf.cast(pos_encoding, dtype=tf.float32)

2.3.2 多头注意力

class MultiHeadAttention(tf.keras.layers.Layer):def __init__(self, d_model, num_heads):super(MultiHeadAttention, self).__init__()self.num_heads = num_headsself.d_model = d_modelassert d_model % self.num_heads == 0self.depth = d_model // self.num_headsself.wq = tf.keras.layers.Dense(d_model)self.wk = tf.keras.layers.Dense(d_model)self.wv = tf.keras.layers.Dense(d_model)self.dense = tf.keras.layers.Dense(d_model)def split_heads(self, x, batch_size):x = tf.reshape(x, (batch_size, -1, self.num_heads, self.depth))return tf.transpose(x, perm=[0, 2, 1, 3])def call(self, v, k, q, mask):batch_size = tf.shape(q)[0]q = self.wq(q) # (batch_size, seq_len, d_model)k = self.wk(k) # (batch_size, seq_len, d_model)v = self.wv(v) # (batch_size, seq_len, d_model)q = self.split_heads(q, batch_size) # (batch_size, num_heads, seq_len_q, depth)k = self.split_heads(k, batch_size) # (batch_size, num_heads, seq_len_k, depth)v = self.split_heads(v, batch_size) # (batch_size, num_heads, seq_len_v, depth)scaled_attention, attention_weights = self.scaled_dot_product_attention(q, k, v, mask)scaled_attention = tf.transpose(scaled_attention, perm=[0, 2, 1, 3])concat_attention = tf.reshape(scaled_attention, (batch_size, -1, self.d_model))output = self.dense(concat_attention)return output, attention_weightsdef scaled_dot_product_attention(self, q, k, v, mask):matmul_qk = tf.matmul(q, k, transpose_b=True)dk = tf.cast(tf.shape(k)[-1], tf.float32)scaled_attention_logits = matmul_qk / tf.math.sqrt(dk)if mask is not None:scaled_attention_logits += (mask * -1e9)attention_weights = tf.nn.softmax(scaled_attention_logits, axis=-1)output = tf.matmul(attention_weights, v)return output, attention_weights

2.4 构建Transformer模型

def point_wise_feed_forward_network(d_model, dff):return tf.keras.Sequential([tf.keras.layers.Dense(dff, activation='relu'),tf.keras.layers.Dense(d_model)])class EncoderLayer(tf.keras.layers.Layer):def __init__(self, d_model, num_heads, dff, rate=0.1):super(EncoderLayer, self).__init__()self.mha = MultiHeadAttention(d_model, num_heads)self.ffn = point_wise_feed_forward_network(d_model, dff)self.layernorm1 = tf.keras.layers.LayerNormalization(epsilon=1e-6)self.layernorm2 = tf.keras.layers.LayerNormalization(epsilon=1e-6)self.dropout1 = tf.keras.layers.Dropout(rate)self.dropout2 = tf.keras.layers.Dropout(rate)def call(self, x, training, mask):attn_output, _ = self.mha(x, x, x, mask)attn_output = self.dropout1(attn_output, training=training)out1 = self.layernorm1(x + attn_output)ffn_output = self.ffn(out1)ffn_output = self.dropout2(ffn_output, training=training)out2 = self.layernorm2(out1 + ffn_output)return out2class DecoderLayer(tf.keras.layers.Layer):def __init__(self, d_model, num_heads, dff, rate=0.1):super(DecoderLayer, self).__init__()self.mha1 = MultiHeadAttention(d_model, num_heads)self.mha2 = MultiHeadAttention(d_model, num_heads)self.ffn = point_wise_feed_forward_network(d_model, dff)self.layernorm1 = tf.keras.layers.LayerNormalization(epsilon=1e-6)self.layernorm2 = tf.keras.layers.LayerNormalization(epsilon=1e-6)self.layernorm3 = tf.keras.layers.LayerNormalization(epsilon=1e-6)self.dropout1 = tf.keras.layers.Dropout(rate)self.dropout2 = tf.keras.layers.Dropout(rate)self.dropout3 = tf.keras.layers.Dropout(rate)def call(self, x, enc_output, training, look_ahead_mask, padding_mask):attn1, attn_weights_block1 = self.mha1(x, x, x, look_ahead_mask)attn1 = self.dropout1(attn1, training=training)out1 = self.layernorm1(x + attn1)attn2, attn_weights_block2 = self.mha2(enc_output, enc_output, out1, padding_mask)attn2 = self.dropout2(attn2, training=training)out2 = self.layernorm2(out1 + attn2)ffn_output = self.ffn(out2)ffn_output = self.dropout3(ffn_output, training=training)out3 = self.layernorm3(out2 + ffn_output)return out3, attn_weights_block1, attn_weights_block2class Encoder(tf.keras.layers.Layer):def __init__(self, num_layers, d_model, num_heads, dff, input_vocab_size, maximum_position_encoding, rate=0.1):super(Encoder, self).__init__()self.d_model = d_modelself.num_layers = num_layersself.embedding = tf.keras.layers.Embedding(input_vocab_size, d_model)self.pos_encoding = positional_encoding(maximum_position_encoding, d_model)self.enc_layers = [EncoderLayer(d_model, num_heads, dff, rate) for _ in range(num_layers)]self.dropout = tf.keras.layers.Dropout(rate)def call(self, x, training, mask):seq_len = tf.shape(x)[1]x = self.embedding(x)x *= tf.math.sqrt(tf.cast(self.d_model, tf.float32))x += self.pos_encoding[:, :seq_len, :]x = self.dropout(x, training=training)for i in range(self.num_layers):x = self.enc_layers[i](x, training, mask)return xclass Decoder(tf.keras.layers.Layer):def __init__(self, num_layers, d_model, num_heads, dff, target_vocab_size, maximum_position_encoding, rate=0.1):super(Decoder, self).__init__()self.d_model = d_modelself.num_layers = num_layersself.embedding = tf.keras.layers.Embedding(target_vocab_size, d_model)self.pos_encoding = positional_encoding(maximum_position_encoding, d_model)self.dec_layers = [DecoderLayer(d_model, num_heads, dff, rate) for _ in range(num_layers)]self.dropout = tf.keras.layers.Dropout(rate)def call(self, x, enc_output, training, look_ahead_mask, padding_mask):seq_len = tf.shape(x)[1]attention_weights = {}x = self.embedding(x)x *= tf.math.sqrt(tf.cast(self.d_model, tf.float32))x += self.pos_encoding[:, :seq_len, :]x = self.dropout(x, training=training)for i in range(self.num_layers):x, block1, block2 = self.dec_layers[i](x, enc_output, training, look_ahead_mask, padding_mask)attention_weights[f'decoder_layer{i+1}_block1'] = block1attention_weights[f'decoder_layer{i+1}_block2'] = block2return x, attention_weightsclass Transformer(tf.keras.Model):def __init__(self, num_layers, d_model, num_heads, dff, input_vocab_size, target_vocab_size, pe_input, pe_target, rate=0.1):super(Transformer, self).__init__()self.encoder = Encoder(num_layers, d_model, num_heads, dff, input_vocab_size, pe_input, rate)self.decoder = Decoder(num_layers, d_model, num_heads, dff, target_vocab_size, pe_target, rate)self.final_layer = tf.keras.layers.Dense(target_vocab_size)def call(self, inp, tar, training, enc_padding_mask, look_ahead_mask, dec_padding_mask):enc_output = self.encoder(inp, training, enc_padding_mask)dec_output, attention_weights = self.decoder(tar, enc_output, training, look_ahead_mask, dec_padding_mask)final_output = self.final_layer(dec_output)return final_output, attention_weights# 设置Transformer参数

num_layers = 4

d_model = 128

dff = 512

num_heads = 8

input_vocab_size = tokenizer_pt.vocab_size + 2

target_vocab_size = tokenizer_en.vocab_size + 2

dropout_rate = 0.1# 创建Transformer模型

transformer = Transformer(num_layers, d_model, num_heads, dff, input_vocab_size, target_vocab_size, pe_input=1000, pe_target=1000, rate=dropout_rate)

2.5 定义损失函数和优化器

loss_object = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True, reduction='none')def loss_function(real, pred):mask = tf.math.logical_not(tf.math.equal(real, 0))loss_ = loss_object(real, pred)mask = tf.cast(mask, dtype=loss_.dtype)loss_ *= maskreturn tf.reduce_sum(loss_)/tf.reduce_sum(mask)optimizer = tf.keras.optimizers.Adam(learning_rate=0.001)

2.6 训练模型

# 定义train_step

@tf.function

def train_step(inp, tar):tar_inp = tar[:, :-1]tar_real = tar[:, 1:]enc_padding_mask, look_ahead_mask, dec_padding_mask = create_masks(inp, tar_inp)with tf.GradientTape() as tape:predictions, _ = transformer(inp, tar_inp, True, enc_padding_mask, look_ahead_mask, dec_padding_mask)loss = loss_function(tar_real, predictions)gradients = tape.gradient(loss, transformer.trainable_variables)optimizer.apply_gradients(zip(gradients, transformer.trainable_variables))return loss# 训练模型

EPOCHS = 20

for epoch in range(EPOCHS):total_loss = 0for (batch, (inp, tar)) in enumerate(train_dataset):batch_loss = train_step(inp, tar)total_loss += batch_lossprint(f'Epoch {epoch+1}, Loss: {total_loss/len(train_dataset)}')

3. 总结

在本文中,我们详细介绍了Transformer模型的基本原理,并使用Python和TensorFlow/Keras实现了一个简单的Transformer模型。通过本文的教程,希望你能够理解Transformer模型的工作原理和实现方法,并能够应用于自己的任务中。随着对Transformer模型的理解加深,你可以尝试实现更复杂的变种,如BERT和GPT等。