Linux下USB设备图像采集

操作系统 : Linux

采集方式 : v4l2(video4linux2)

设备目录 :/dev/video0

Linux系统

Linux采集的核心组件名称叫:v4l2即video4linux2的简称。是Linux中关于视频设备的内核驱动,在Linux中,视频设备是设备文件,可以像访问普通文件一样对其进行读写,摄像头设备文件位置是/dev/video0。

查看相机设备

方法一,直接通过ls查看/dev/目录下设备列表

ls /dev/video*

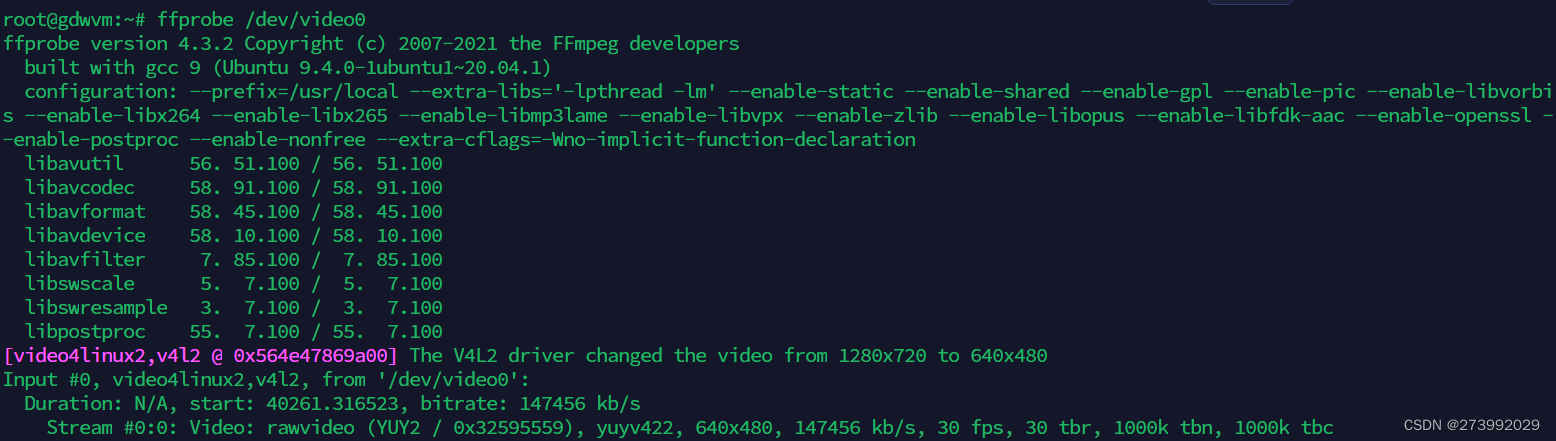

方法二,通过ffmpeg的ffprobe命令来查看连接到系统中的摄像头设备:

FFmpeg命令行安装

sudo apt-get install ffmpeg

ffprobe /dev/video0

video0设备采集出来的裸帧格式是:yuyv422,帧率30fps,分辨率640x480,因此在保存摄像头数据的时候,先对yuyu422的格式进行变化,将其变换为yuv420p的格式,然后再进行编码保存为h264的文件。

video0设备采集出来的裸帧格式是:yuyv422,帧率30fps,分辨率640x480,因此在保存摄像头数据的时候,先对yuyu422的格式进行变化,将其变换为yuv420p的格式,然后再进行编码保存为h264的文件。

v4l2 常用命令

获取设备列表

v4l2-ctl --list-devices

#List supported video formats and resolutions of default video device

v4l2-ctl --list-formats-ext#List supported video formats and resolutions of a specific video device:

v4l2-ctl --list-formats-ext --device path/to/video_device

#eg:

v4l2-ctl --list-formats-ext --device /dev/video0#Get all details of a video device:

v4l2-ctl --all --device path/to/video_device

#eg:

v4l2-ctl --all --device /dev/video0#Capture a JPEG photo with a specific resolution from video device:

v4l2-ctl --device path/to/video_device --set-fmt-video=width=width,height=height,pixelformat=MJPG --stream-mmap --stream-to=path/to/output.jpg --stream-count=1

#eg:

v4l2-ctl --device /dev/video0 --set-fmt-video=width=1280,height=720,pixelformat=MJPG --stream-mmap --stream-to=/home/nvidia/Pictures/video0-output.jpg --stream-count=1#Capture a raw video stream from video device:

v4l2-ctl --device path/to/video_device --set-fmt-video=width=width,height=height,pixelformat=format --stream-mmap --stream-to=path/to/output --stream-count=number_of_frames_to_capture

#eg:

v4l2-ctl --device /dev/video0 --set-fmt-video=width=1280,height=720,pixelformat=MJPG --stream-mmap --stream-to=/home/nvidia/Pictures --stream-count=10#List all video device's controls and their values:

v4l2-ctl --list-ctrls --device /path/to/video_device

#eg:

v4l2-ctl --list-ctrls --device /dev/video0

测试相机可用性

$ cheese -d /dev/video0

$ ffplay -f v4l2 -input_format bayer_bggr16le -video_size 640x480 -i /dev/video0

代码

cmmon.h

#ifndef COMMON_H

#define COMMON_H#ifdef __cplusplus

extern "C" {

#endif

#include <linux/videodev2.h>//c varable

extern struct v4l2_fmtdesc fmtd[20];

extern unsigned char * displaybuf; //v4l2 video buffer

extern int current_video_state;

typedef struct buffer{void *start;unsigned int length;

}buffer;//define

#define SRC_WIDTH 1280

#define SRC_HEIGHT 720

#define DST_WIDTH 1280

#define DST_HEIGHT 720

#define NB_BUFFER 4#ifdef __cplusplus

}

#endif

#endif

v4l2.h

#ifndef V4L2_H

#define V4L2_H#include <fcntl.h>

#include <linux/fb.h>

#include <linux/videodev2.h>

#include <poll.h>

#include <pthread.h>

#include <stdint.h>

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <sys/ioctl.h>

#include <sys/mman.h>

#include <sys/stat.h>

#include <sys/time.h>

#include <sys/types.h>

#include <time.h>

#include <unistd.h>

#include <errno.h>

#include "config.h"#ifdef __cplusplus

extern "C" {

#endif//function

int c_OpenDevice(char* video);

void c_CloseDevice(int videofd);

int c_FormatDevice(unsigned int pixformat, int videofd);

int c_RequestBuffer(buffer *vbuffer, int videofd);

int c_GetBuffer(unsigned char* yuvBuffer, buffer *vbuffer, int videofd);

void c_DeintDevice(int videofd, buffer *vbuffer);

void c_NV12_TO_RGB24(unsigned char *yuyv, unsigned char *rgb, int width, int height);

void c_yuyv_to_rgb(unsigned char *yuyvdata, unsigned char *rgbdata, int w, int h);//varable#ifdef __cplusplus

}

#endif

#endifCAMERATHREAD1.H

#ifndef CAMERATHREAD1_H

#define CAMERATHREAD1_H#include <QObject>

#include <QThread>

#include <QDebug>class CameraThread1 : public QThread

{Q_OBJECT

public:CameraThread1();void run() override;void getCam1Buf();bool previewCam1 = true;QString camera1Dev="";signals:void showCamera1(unsigned char *buffer);

};#endif // CAMERATHREAD1_HMAINWINDOW.H

#ifndef MAINWINDOW_H

#define MAINWINDOW_H#include <QMainWindow>

#include <QLabel>

#include "common.h"

#include "camerathread1.h"QT_BEGIN_NAMESPACE

namespace Ui { class MainWindow; }

QT_END_NAMESPACEclass MainWindow : public QMainWindow

{Q_OBJECTpublic:MainWindow(QWidget *parent = nullptr);~MainWindow();

void checkIspServer();private:Ui::MainWindow *ui;// QLabel *camera1;CameraThread1 *cameraThread1;Common common;public slots:void displayCam1Buf(unsigned char *buffer);

camerathread1.cpp

#include "camerathread1.h"

#include "v4l2.h"CameraThread1::CameraThread1()

{}void CameraThread1::run()

{msleep(200);getCam1Buf();

}void CameraThread1::getCam1Buf()

{unsigned char *yuvBuffer = (unsigned char*)malloc(SRC_WIDTH * SRC_HEIGHT * 3);unsigned char *rgbBuffer = (unsigned char*)malloc(SRC_WIDTH * SRC_HEIGHT * 3);buffer * vbuffer;vbuffer = (buffer*)calloc (NB_BUFFER, sizeof (*vbuffer));int fd = c_OpenDevice(camera1Dev.toLocal8Bit().data());if(fd < 0) return;c_FormatDevice(V4L2_PIX_FMT_YUYV, fd);c_RequestBuffer(vbuffer, fd);while (previewCam1) {if(c_GetBuffer(yuvBuffer, vbuffer, fd) != 0) return;//c_NV12_TO_RGB24(yuvBuffer, rgbBuffer, SRC_WIDTH, SRC_HEIGHT);c_yuyv_to_rgb(yuvBuffer, rgbBuffer, SRC_WIDTH, SRC_HEIGHT);emit showCamera1(rgbBuffer);msleep(1000/30);}//close camerac_DeintDevice(fd, vbuffer);c_CloseDevice(fd);

}v4l2.c

#include "v4l2.h"int buf_type = -1;

int current_video_state = 0;struct v4l2_fmtdesc fmtd[];

struct v4l2_format format;unsigned char * displaybuf = NULL;

unsigned char * rgb24 = NULL;int c_OpenDevice(char *video)

{struct v4l2_capability cap;struct v4l2_fmtdesc fmtdesc;/* open video */int videofd = open(video, O_RDWR);if ( -1 == videofd ) {printf("Error: cannot open %s device\n",video);return videofd;}printf("The %s device was opened successfully.\n", video);/* check capability */memset(&cap, 0, sizeof(struct v4l2_capability));if ( ioctl(videofd, VIDIOC_QUERYCAP, &cap) < 0 ) {printf("Error: get capability.\n");goto fatal;}/* query all pixformat */if (cap.capabilities & V4L2_CAP_VIDEO_CAPTURE){buf_type = V4L2_BUF_TYPE_VIDEO_CAPTURE;}else if(cap.capabilities & V4L2_CAP_VIDEO_CAPTURE_MPLANE){buf_type = V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE;}else{printf("Error: application not support this device %s.\n",video);goto fatal;}memset(fmtd, 0, sizeof(fmtd));//note: nedd clean array first.fmtdesc.index=0;fmtdesc.type=buf_type;while(ioctl(videofd, VIDIOC_ENUM_FMT, &fmtdesc) != -1) {fmtd[fmtdesc.index] = fmtdesc;// printf("\t%d.%s\n",fmtdesc.index+1,fmtdesc.description);fmtdesc.index++;}return videofd;

fatal:c_CloseDevice(videofd);

}int c_FormatDevice(unsigned int pixformat, int videofd)

{/* set format */memset(&format, 0, sizeof(struct v4l2_format));format.type = buf_type;if (format.type == V4L2_BUF_TYPE_VIDEO_CAPTURE){format.fmt.pix.width = SRC_WIDTH;format.fmt.pix.height = SRC_HEIGHT;format.fmt.pix.pixelformat = pixformat;format.fmt.pix.field = V4L2_FIELD_ANY;printf("VIDIO_S_FMT: type=%d, w=%d, h=%d, fmt=0x%x, field=%d\n",format.type, format.fmt.pix.width,format.fmt.pix.height, format.fmt.pix.pixelformat,format.fmt.pix.field);}else{format.fmt.pix_mp.width = SRC_WIDTH;format.fmt.pix_mp.height = SRC_HEIGHT;format.fmt.pix_mp.pixelformat = pixformat;format.fmt.pix_mp.field = V4L2_FIELD_ANY;printf(">> VIDIO_S_FMT: type=%d, w=%d, h=%d, fmt=0x%x, field=%d\n",format.type, format.fmt.pix_mp.width,format.fmt.pix_mp.height, format.fmt.pix_mp.pixelformat,format.fmt.pix_mp.field);}if (ioctl(videofd, VIDIOC_S_FMT, &format) < 0) {printf("Error: set format %d.\n", errno);return errno;}/* get format */if (ioctl(videofd, VIDIOC_G_FMT, &format) < 0) {printf("Error: get format %d.\n", errno);return errno;}printf("VIDIO_G_FMT: type=%d, w=%d, h=%d, fmt=0x%x, field=%d\n",format.type, format.fmt.pix.width,format.fmt.pix.height, format.fmt.pix.pixelformat,format.fmt.pix.field);return 0;

}int c_RequestBuffer(buffer *vbuffer, int videofd)

{struct v4l2_requestbuffers reqbuf;struct v4l2_buffer v4l2_buf;/* buffer preparation */memset(&reqbuf, 0, sizeof(struct v4l2_requestbuffers));reqbuf.count = NB_BUFFER;reqbuf.type = buf_type;reqbuf.memory = V4L2_MEMORY_MMAP;if (ioctl(videofd, VIDIOC_REQBUFS, &reqbuf) < 0) {printf("Error: request buffer error=%d.\n",errno);goto fatal;}//map buffersfor (unsigned int i = 0; i < reqbuf.count; i++) {memset(&v4l2_buf, 0, sizeof(struct v4l2_buffer));v4l2_buf.index = i;v4l2_buf.type = buf_type;v4l2_buf.memory = V4L2_MEMORY_MMAP;if (v4l2_buf.type == V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE) {struct v4l2_plane buf_planes[format.fmt.pix_mp.num_planes];v4l2_buf.m.planes = buf_planes;v4l2_buf.length = format.fmt.pix_mp.num_planes;}if (ioctl(videofd, VIDIOC_QUERYBUF, &v4l2_buf) < 0) {printf("Error: query buffer %d.\n", errno);goto fatal;}if (v4l2_buf.type == V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE) {for (int j = 0; j < format.fmt.pix_mp.num_planes; j++) {vbuffer[i * format.fmt.pix_mp.num_planes + j].start= mmap(0, v4l2_buf.m.planes[j].length, PROT_READ,MAP_SHARED, videofd, v4l2_buf.m.planes[j].m.mem_offset);vbuffer[i * format.fmt.pix_mp.num_planes + j].length= v4l2_buf.m.planes[j].length;}}else{//V4L2_BUF_TYPE_VIDEO_CAPTUREvbuffer[i].start = mmap(0, v4l2_buf.length, PROT_READ, MAP_SHARED, videofd,v4l2_buf.m.offset);vbuffer[i].length = v4l2_buf.length;}if (vbuffer[i].start == MAP_FAILED) {printf("Error: mmap buffers.\n");goto fatal;}}//queue buffersfor (int i = 0; i < reqbuf.count; ++i) {memset(&v4l2_buf, 0, sizeof(struct v4l2_buffer));v4l2_buf.index = i;v4l2_buf.type = buf_type;v4l2_buf.memory = V4L2_MEMORY_MMAP;if (v4l2_buf.type == V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE) {struct v4l2_plane buf_planes[format.fmt.pix_mp.num_planes];v4l2_buf.m.planes = buf_planes;v4l2_buf.length = format.fmt.pix_mp.num_planes;}if (ioctl(videofd, VIDIOC_QBUF, &v4l2_buf) < 0) {printf("Error: queue buffers, ret:%d i:%d\n", errno, i);goto fatal;}}printf("Queue buf done.\n");//stream onif (ioctl(videofd, VIDIOC_STREAMON, &buf_type) < 0) {printf("Error: streamon failed erron = %d.\n",errno);goto fatal;}//open successcurrent_video_state = 1;return 0;fatal:printf("init camera fial!\n");current_video_state = 0;c_CloseDevice(videofd);return -1;

}int c_GetBuffer(unsigned char* yuvBuffer, buffer *vbuffer, int videofd)

{struct v4l2_buffer v4l2_buf;int buf_index = -1;int planes_num = format.fmt.pix_mp.num_planes;memset(yuvBuffer, 0, SRC_WIDTH * SRC_HEIGHT * 3);// dqbuf from video nodememset(&v4l2_buf, 0, sizeof(struct v4l2_buffer));v4l2_buf.type = buf_type;v4l2_buf.memory = V4L2_MEMORY_MMAP;if (v4l2_buf.type == V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE) {struct v4l2_plane planes[planes_num];v4l2_buf.m.planes = planes;v4l2_buf.length = planes_num;}if (ioctl(videofd, VIDIOC_DQBUF, &v4l2_buf) < 0) {printf("Error: dequeue buffer, errno %d\n", errno);return errno;}buf_index = v4l2_buf.index;if (v4l2_buf.type == V4L2_BUF_TYPE_VIDEO_CAPTURE_MPLANE) {for(int i = 0;i < planes_num; i++){memcpy(yuvBuffer, vbuffer[buf_index].start, format.fmt.pix_mp.plane_fmt[i].sizeimage);}}else{// V4L2_BUF_TYPE_VIDEO_CAPTUREmemcpy(yuvBuffer, vbuffer[buf_index].start, format.fmt.pix.sizeimage);}if (ioctl(videofd, VIDIOC_QBUF, &v4l2_buf) < 0) {printf("Error: queue buffer.\n");return errno;}return 0;

}void c_CloseDevice(int videofd)

{close(videofd);

}void c_DeintDevice(int videofd, buffer *vbuffer)

{struct v4l2_requestbuffers v4l2_rb;// if(current_video_state != 1)

// return;if(ioctl(videofd, VIDIOC_STREAMOFF, &buf_type) < 0 ){printf("Error: stream close failed erron= %d\n", errno);return;}for (int i = 0; i < NB_BUFFER; i++){if((i < NB_BUFFER -1) && (vbuffer[i].length != vbuffer[i+1].length))munmap (vbuffer[i].start, vbuffer[i+1].length);//first buffer.length maybe not currentelsemunmap (vbuffer[i].start, vbuffer[i].length);}memset(&v4l2_rb, 0, sizeof(struct v4l2_requestbuffers));v4l2_rb.count = 0;v4l2_rb.type = buf_type;v4l2_rb.memory = V4L2_MEMORY_MMAP;if (ioctl(videofd, VIDIOC_REQBUFS, &v4l2_rb) < 0)printf("Error: release buffer error=%d.\n",errno);free(vbuffer);vbuffer=NULL;

}void c_NV12_TO_RGB24(unsigned char *data, unsigned char *rgb, int width, int height)

{int index = 0;unsigned char *ybase = data;unsigned char *ubase = &data[width * height];unsigned char Y, U, V;int R, G, B;for (int y = 0; y < height; y++) {for (int x = 0; x < width; x++) {//YYYYYYYYUVUVY = ybase[x + y * width];U = ubase[y / 2 * width + (x / 2) * 2];V = ubase[y / 2 * width + (x / 2) * 2 + 1];R = Y + 1.4075 * (V - 128);G = Y - 0.3455 * (U - 128) - 0.7169 * (V - 128);B = Y + 1.779 * (U - 128);if(R > 255)R = 255;else if(R < 0)R = 0;if(G > 255)G = 255;else if(G < 0)G = 0;if(B > 255)B = 255;else if(B < 0)B = 0;rgb[index++] = R; //Rrgb[index++] = G; //Grgb[index++] = B; //B}}

}void c_yuyv_to_rgb(unsigned char *yuyvdata, unsigned char *rgbdata, int w, int h)

{int r1, g1, b1;int r2, g2, b2;for (int i = 0; i < w * h / 2; i++){char data[4];memcpy(data, yuyvdata + i * 4, 4);// Y0 U0 Y1 V1-->[Y0 U0 V1] [Y1 U0 v1]unsigned char Y0 = data[0];unsigned char U0 = data[1];unsigned char Y1 = data[2];unsigned char V1 = data[3];r1 = Y0 + 1.4075 * (V1-128); if(r1>255)r1=255; if(r1<0)r1=0;g1 =Y0 - 0.3455 * (U0-128) - 0.7169 * (V1-128); if(g1>255)g1=255; if(g1<0)g1=0;b1 = Y0 + 1.779 *(U0-128); if(b1>255)b1=255; if(b1<0)b1=0;r2 = Y1+1.4075* (V1-128) ;if(r2>255)r2=255; if(r2<0)r2=0;g2 = Y1- 0.3455 *(U0-128) - 0.7169*(V1-128); if(g2>255)g2=255;if(g2<0)g2=0;b2 = Y1+ 1.779 * (U0-128);if(b2>255)b2=255;if(b2<0)b2=0;rgbdata[i * 6 + 0] = r1;rgbdata[i * 6 + 1] = g1;rgbdata[i * 6 + 2] = b1;rgbdata[i * 6 + 3] = r2;rgbdata[i * 6 + 4] = g2;rgbdata[i * 6 + 5] = b2;}

}mainwindow.cpp

#include "mainwindow.h"

#include "ui_mainwindow.h"#include <QDebug>

#include <QProcess>

#include <QStorageInfo>

#include <QDirIterator>

#include <libudev.h>

#include <QSocketNotifier>MainWindow::MainWindow(QWidget *parent): QMainWindow(parent), ui(new Ui::MainWindow)

{ui->setupUi(this);cameraThread1 = new CameraThread1;//cameraThread1->camera1Dev = "/dev/video-camera0";connect(cameraThread1, &CameraThread1::showCamera1, this, &MainWindow::displayCam1Buf);cameraThread1->start();}void MainWindow::displayCam1Buf(unsigned char *buffer)

{QImage img;QPixmap pixmap;img = QImage(buffer, 1280, 720, QImage::Format_RGB888);QPixmap scaledPixmap = pixmap.fromImage(img);ui->camera1->setPixmap(scaledPixmap);// qDebug("<<<<<< %s %d\n",__FILE__,__LINE__);

}MainWindow::~MainWindow()

{delete ui;

}