Huggingface 笔记:大模型(Gemma2B,Gemma 7B)部署+基本使用

1 部署

1.1 申请权限

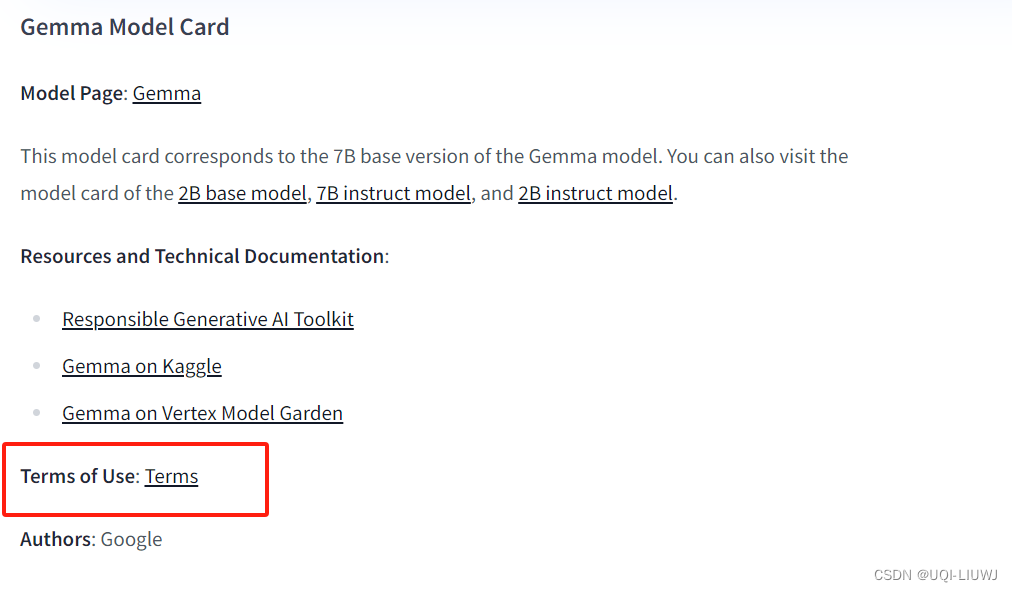

在huggingface的gemma界面,点击“term”以申请gemma访问权限

https://huggingface.co/google/gemma-7b

然后接受条款

1.2 添加hugging对应的token

如果直接用gemma提供的代码,会出现如下问题:

from transformers import AutoTokenizer, AutoModelForCausalLMtokenizer = AutoTokenizer.from_pretrained("google/gemma-7b")

model = AutoModelForCausalLM.from_pretrained("google/gemma-7b")input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt")outputs = model.generate(**input_ids)

print(tokenizer.decode(outputs[0]))

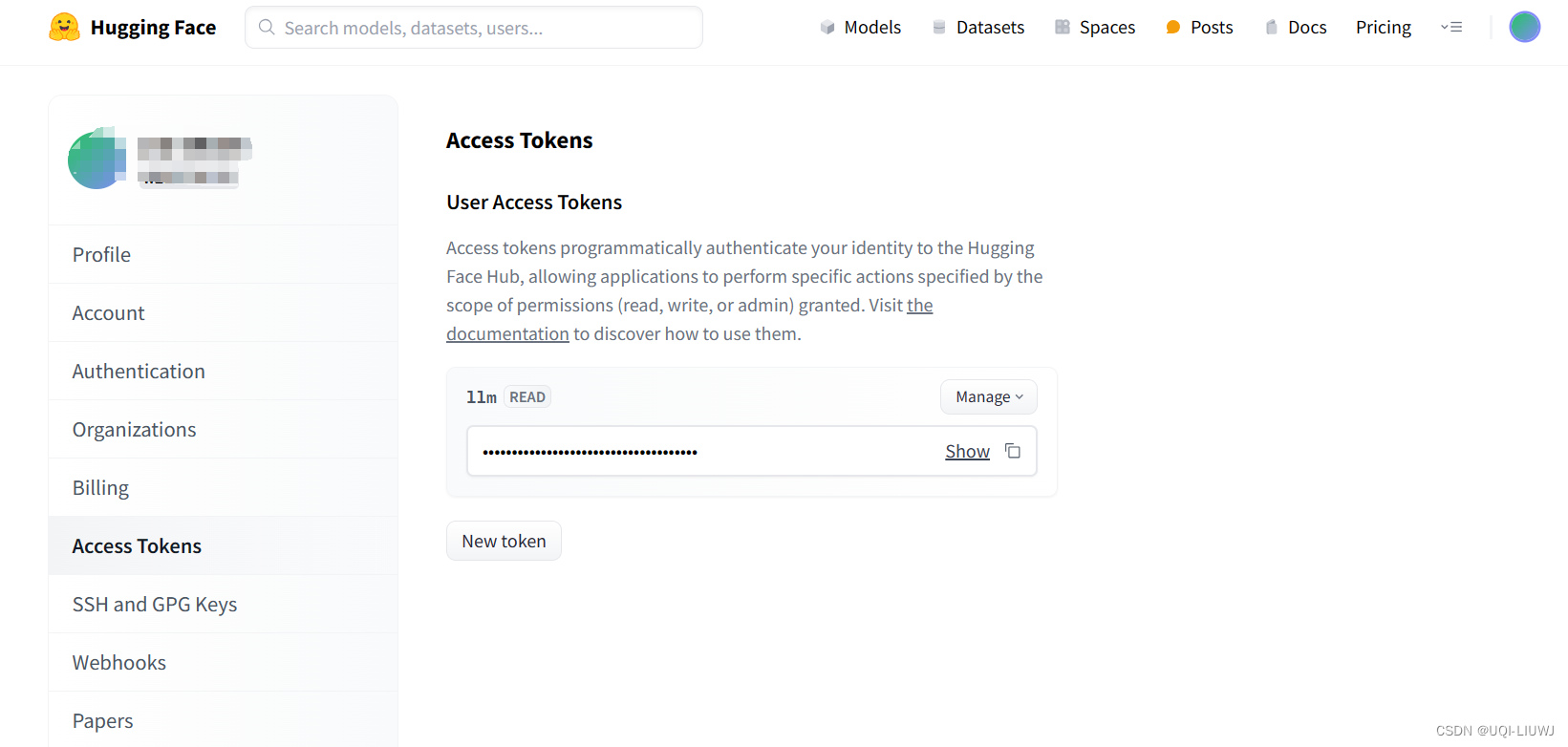

这时候就需要添加自己hugging的token了:

import os

os.environ["HF_TOKEN"] = '....'token的位置在:

2 gemma 模型官方样例

2.0 gemma介绍

- Gemma是Google推出的一系列轻量级、最先进的开放模型,基于创建Gemini模型的相同研究和技术构建。

- 它们是文本到文本的、仅解码器的大型语言模型,提供英语版本,具有开放的权重、预训练的变体和指令调优的变体。

- Gemma模型非常适合执行各种文本生成任务,包括问答、摘要和推理。它们相对较小的尺寸使得可以在资源有限的环境中部署,例如笔记本电脑、桌面电脑或您自己的云基础设施,使每个人都能获得最先进的AI模型,促进创新。

2.1 文本生成

2.1.1 CPU上执行

from transformers import AutoTokenizer, AutoModelForCausalLM

'''

AutoTokenizer用于加载预训练的分词器

AutoModelForCausalLM则用于加载预训练的因果语言模型(Causal Language Model),这种模型通常用于文本生成任务

'''tokenizer = AutoTokenizer.from_pretrained("google/gemma-2b",token='。。。')

#加载gemma-2b的预训练分词器

model = AutoModelForCausalLM.from_pretrained("google/gemma-2b",token='。。。')

#加载gemma-2b的预训练语言生成模型

'''

使用其他几个进行文本续写,其他的地方是一样的,就这里加载的预训练模型不同:

"google/gemma-2b-it"

"google/gemma-7b"

"google/gemma-7b-it"

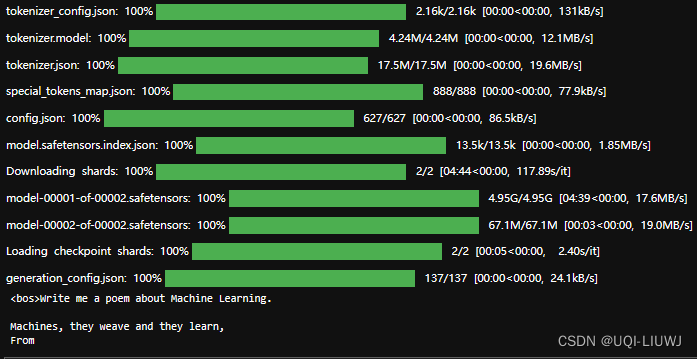

'''input_text = "Write me a poem about Machine Learning."

#定义了要生成文本的初始输入

input_ids = tokenizer(input_text, return_tensors="pt")

#使用前面加载的分词器将input_text转换为模型可理解的数字表示【token id】

#return_tensors="pt"表明返回的是PyTorch张量格式。outputs = model.generate(**input_ids)

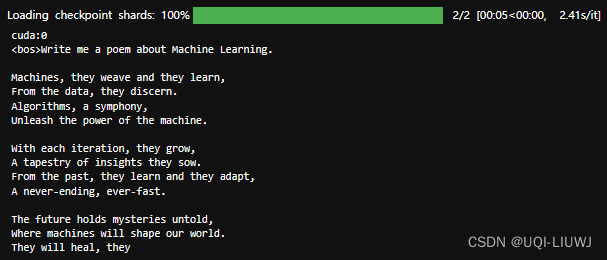

#使用模型和转换后的输入input_ids来生成文本print(tokenizer.decode(outputs[0]))

#将生成的文本令牌解码为人类可读的文本,并打印出来

2.1.2 GPU上执行

多GPU

'''

前面的一样

'''

model = AutoModelForCausalLM.from_pretrained("google/gemma-2b", device_map="auto")input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt").to(model.device)'''

后面的一样

'''指定单GPU

'''

前面的一样

'''

model = AutoModelForCausalLM.from_pretrained("google/gemma-2b", device_map="cuda:0")input_text = "Write me a poem about Machine Learning."

input_ids = tokenizer(input_text, return_tensors="pt").to(model.device)'''

后面的一样

'''2.1.3 设置生成文本的长度

其他的不变(和2.1.1比),只修改outputs这一行

outputs = model.generate(**input_ids,max_length=100)

2.2 使用chat格式

目前gemma我没试出来同时放n个不同的chat怎么搞,目前只放了一个

2.2.1 模型部分

和文本生成相同,从预训练模型中导入一个分词器一个CausalLM

# pip install accelerate

from transformers import AutoTokenizer, AutoModelForCausalLMtokenizer = AutoTokenizer.from_pretrained("google/gemma-2b-it")

model = AutoModelForCausalLM.from_pretrained("google/gemma-2b-it", device_map="cuda:0")2.2.2 获取prompt

chat=[{"role": "user", "content": "I am going to Paris, what should I see?"},{"role": "assistant","content": """\

Paris, the capital of France, is known for its stunning architecture, art museums, historical landmarks, and romantic atmosphere. Here are some of the top attractions to see in Paris:

1. The Eiffel Tower: The iconic Eiffel Tower is one of the most recognizable landmarks in the world and offers breathtaking views of the city.

2. The Louvre Museum: The Louvre is one of the world's largest and most famous museums, housing an impressive collection of art and artifacts, including the Mona Lisa.

3. Notre-Dame Cathedral: This beautiful cathedral is one of the most famous landmarks in Paris and is known for its Gothic architecture and stunning stained glass windows.

These are just a few of the many attractions that Paris has to offer. With so much to see and do, it's no wonder that Paris is one of the most popular tourist destinations in the world.""",},{"role": "user", "content": "What is so great about #1?"},]prompt = tokenizer.apply_chat_template(chat, tokenize=False,add_generation_prompt=True)

#tokenize=False:这个参数控制是否在应用模板之后对文本进行分词处理。False表示不进行分词处理#add_generation_prompt=True:这个参数控制是否在处理后的文本中添加生成提示。

#True意味着会添加一个提示,这个提示通常用于指导模型进行下一步的文本生成

#添加的提示是:<start_of_turn>modelprint(prompt)

'''

<bos><start_of_turn>user

I am going to Paris, what should I see?<end_of_turn>

<start_of_turn>model

Paris, the capital of France, is known for its stunning architecture, art museums, historical landmarks, and romantic atmosphere. Here are some of the top attractions to see in Paris:

1. The Eiffel Tower: The iconic Eiffel Tower is one of the most recognizable landmarks in the world and offers breathtaking views of the city.

2. The Louvre Museum: The Louvre is one of the world's largest and most famous museums, housing an impressive collection of art and artifacts, including the Mona Lisa.

3. Notre-Dame Cathedral: This beautiful cathedral is one of the most famous landmarks in Paris and is known for its Gothic architecture and stunning stained glass windows.

These are just a few of the many attractions that Paris has to offer. With so much to see and do, it's no wonder that Paris is one of the most popular tourist destinations in the world.<end_of_turn>

<start_of_turn>user

What is so great about #1?<end_of_turn>

<start_of_turn>model

'''2.2.3 分词

inputs = tokenizer.encode(prompt, add_special_tokens=False, return_tensors="pt")

inputs

'''

tensor([[ 2, 106, 1645, 108, 235285, 1144, 2319, 577, 7127,235269, 1212, 1412, 590, 1443, 235336, 107, 108, 106,2516, 108, 29437, 235269, 573, 6037, 576, 6081, 235269,603, 3836, 604, 1277, 24912, 16333, 235269, 3096, 52054,235269, 13457, 82625, 235269, 578, 23939, 13795, 235265, 5698,708, 1009, 576, 573, 2267, 39664, 577, 1443, 575,7127, 235292, 108, 235274, 235265, 714, 125957, 22643, 235292,714, 34829, 125957, 22643, 603, 974, 576, 573, 1546,93720, 82625, 575, 573, 2134, 578, 6952, 79202, 7651,576, 573, 3413, 235265, 108, 235284, 235265, 714, 91182,9850, 235292, 714, 91182, 603, 974, 576, 573, 2134,235303, 235256, 10155, 578, 1546, 10964, 52054, 235269, 12986,671, 20110, 5488, 576, 3096, 578, 51728, 235269, 3359,573, 37417, 25380, 235265, 108, 235304, 235265, 32370, 235290,76463, 41998, 235292, 1417, 4964, 57046, 603, 974, 576,573, 1546, 10964, 82625, 575, 7127, 578, 603, 3836,604, 1277, 60151, 16333, 578, 24912, 44835, 5570, 11273,235265, 108, 8652, 708, 1317, 476, 2619, 576, 573,1767, 39664, 674, 7127, 919, 577, 3255, 235265, 3279,712, 1683, 577, 1443, 578, 749, 235269, 665, 235303,235256, 793, 5144, 674, 7127, 603, 974, 576, 573,1546, 5876, 18408, 42333, 575, 573, 2134, 235265, 107,108, 106, 1645, 108, 1841, 603, 712, 1775, 1105,1700, 235274, 235336, 107, 108, 106, 2516, 108]])

'''2.2.4 生成结果

和文本生成一样,也是model.generate

outputs = model.generate(input_ids=inputs.to(model.device), max_new_tokens=500)

print(tokenizer.decode(outputs[0]))

'''

<bos><start_of_turn>user

I am going to Paris, what should I see?<end_of_turn>

<start_of_turn>model

Paris, the capital of France, is known for its stunning architecture, art museums, historical landmarks, and romantic atmosphere. Here are some of the top attractions to see in Paris:

1. The Eiffel Tower: The iconic Eiffel Tower is one of the most recognizable landmarks in the world and offers breathtaking views of the city.

2. The Louvre Museum: The Louvre is one of the world's largest and most famous museums, housing an impressive collection of art and artifacts, including the Mona Lisa.

3. Notre-Dame Cathedral: This beautiful cathedral is one of the most famous landmarks in Paris and is known for its Gothic architecture and stunning stained glass windows.

These are just a few of the many attractions that Paris has to offer. With so much to see and do, it's no wonder that Paris is one of the most popular tourist destinations in the world.<end_of_turn>

<start_of_turn>user

What is so great about #1?<end_of_turn>

<start_of_turn>model

The Eiffel Tower is one of the most iconic landmarks in the world and offers breathtaking views of the city. It is a symbol of French engineering and architecture and is a must-see for any visitor to Paris.<eos>

'''