unity depth texture-01

https://docs.unity3d.com/Manual/SL-CameraDepthTexture.html

camera’s depth texture

a camera can generate a depth, depth+normals, or motion vector texture. this is a minimalistic 简约的 g-buffer texture that can be used for post-processing effects or to implement custom lighting modes (e.g. light pre-pass). it is also possible to build similar textures yourself, using Shader Replacement feature.

the camera’s depth texture mode can be enabled using Camera.depthTextureMode variable from script.

there are three possible depth texture modes:

1/ DepthTextureMode.depth: a dpeth texture.

2/ DepthTextureMode.DepthNormals: depth and view space normals packed into one texture.

3/ DepthTextureMode.MotionVectors: per-pixel screen space motion of each screen texel for the current frame. packed intoa RG16 texture.

there are flags, so it is possible to specify any combination of the above textures.

DepthTextureMode.Depth texture

this builds a screen-sized depth texture.

depth texture is rendered using the same shader passes are used for shadow caster rendering (ShowCaster pass type). so by extension, if a shader does not support shadow casting. (i.e. there is no shadow caster pass in the shader of any of the fallbacks), then objects using that shader will not show up in the depth texture.

就是说必须要有使用了ShadowCaster通道的物体,才能最后加入到深度图中去。

- make your shader fallback to some other shader that has a shadow casting pass, or

- if u are using surface shaders, adding an addshadow directive will make them generate a shadow pass too.

note that only “opaque” objects (that which have their materials and shaders setup to use render queue <=2500) are rendered into the depth texture.

只有是不透明的物体才会被写入到深度图中去。

DepthTextureMode.DepthNormals texture

this builds a screen-sized 32 bit (bit/channel) texture, where view space normals are encoded into R&G channels, and depth is encoded in B&A channels. normals are encoded using stereographic projection, and depth is 16 bit value packed into two 8 bit channels.

UntiyCG.cginc include file has a helper function DecodeDepthNormal to decode depth and normal from the encoded pixel. returned depth is in 0…1 range.

for example on how to use the depth and normals texture, please refer to the EdgeDetection image effect in the Shader Replacement example project or Screen Space Ambient Occlusion Image Effect.

DepthTextureMode.MotionVectors texture

this builds a screen-size RG 16(16-bit flat/channle) texture, where screen space pixel motion is encoded into the R&G channels. the pixel motion is encoded in screen uv space.

when sampling from this texture motion from the encoded pixel is returned in a range of -1…1. this will be the uv offset from the last frame to the current frame.

tips and tricks

Camera inspector indicates when a camera is rendering a depth or a depth+nromals texture.

the way that depth textures are requested from the Camera (Camera.depthTextureMode) might mean that after u disable an effect that needed them, the camera might still continue rendering them. if there are multiple effects present on a camera, where each of them needs the depth texture, there is no good way to automatically disable depth texture rendering if u disable the individual effects.

when implementing complex shaders or image effects, keep rendering differences between platforms in mind. in particular, using depth texture in an image effect often needs special handing on direct3d + anti-aliasing.

in some cases, the dpeth texture might come directly from the native z buffer. if u see artifacts in your depth texture, make sure that the shaders that use it do not write into the z buffer (use ZWrite off).

shader variables

depth texture are available for sampling in shaders as global shader properties. by declaring a sampler called _CameraDepthTexture u will be able to sample the main depth texture for the camera.

_CameraDepthTexture always refers to the camera’s primary depth texture. by contrast, u can use _LastCameraDepthTexture to refer to the last depth texture rendered by any camera. this could be useful for example if u render a half-resolution depth texture in script using a secondary camera want to make it available to a post-process shader.

the motion vectors texture (when enabled) is available in shaders as a global shader property. by declaring a sampler called ‘_CameraMotionVectorsTexture’ u can sample the texture for the currently rendering camera.

under the hood

depth texture can come directly from the actual depth buffer, or be rendered in a separate pass, depending on the rendering path used and the hardware. typically when using deferred shading or legacy deferred lighting rendering paths, the depth texture come ‘for free’ since they are a product of the g-buffer rendering anyway.

when the DepthNormals texture is rendered in a separate pass, this is done through Shader Replacement. hence it is important to have correct “RenderType” tag in your shaders.

when enabled, the MotionVectors texture always comes from a extra render pas. unity will render moving game obejcts into this buffer, and construct their motion from the last frame to the current frame.

https://docs.unity3d.com/Manual/SL-DepthTextures.html

using depth texture

it is possible to Render Textures where each pixel contains a high-precision Depth value. this is mostly used when some effects need the Scene’s depth to be available (for example, soft particles, screen space ambient occlusion and translucency would all need the scene’s depth). iamge effects ofthen use depth textures too.

pixel values in the depth texture range between 0 and 1, with a non-linear distribution. precision is usually 32 or 16 bits, depending on configuration and platform used. when reading from the depth texture, a high precision value in a range between 0 and 1 is returned. if u need to get distance from the camera, or an otherwise linear 0-1 value, compute that manually using helper macros (see below).

depth textures are supported on most modern hardware and graphics apis. special requirements are listed below:

1/ d3d 11+ (windows), opengl 3+ (mac/linux). opengl 3s 3.0+ (android/.ios), metal (ios) and consoles like ps4/xbox one all support depth textures.

2/ opengl es 2.0 (ios/andriod) requires gl oes depth texture extension to be present.

3/ webgl requires webgl depth texture extension.

For example, this shader would render depth of its GameObjects

Shader "MyShader/RenderDepth"

{

SubShader

{

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include"UnityCG.cginc"

struct VertexData

{

float4 pos:POSITION;

};

struct V2F

{

float4 pos:POSITION;

float2 depth : TEXCOORD0;

};

V2F vert(VertexData v)

{

V2F res;

res.pos = UnityObjectToClipPos(v.pos);

UNITY_TRANSFER_DEPTH(res.depth);

return res;

}

float4 _Color;

fixed4 frag(V2F v) :SV_Target

{

UNITY_OUTPUT_DEPTH(v.depth);

}

ENDCG

}

}

}

以上是官方的例子代码:

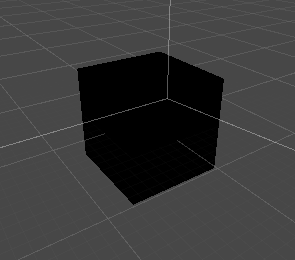

效果为黑色:

为啥呢?

官方文档:https://docs.unity3d.com/Manual/SL-DepthTextures.html

说到:

UNITY_TRANSFER_DEPTH(o):on platforms with native depth textures this macro does nothing at all, because z buffer value is rendered implicitly.

如果平台支持native的深度图则这个宏不做任何事情。

同样的:

UNITY_OUTPUT_DEPTH(i):on platforms with native depth textures this macro always returns zero, because z buffer value is rendered implicitly.

如果平台支持原生的深度图,那么这个函数就会返回0。

我们在unity安装目录中的shader相关的文件中找到如下的定义:

// Legacy; used to do something on platforms that had to emulate depth textures manually. Now all platforms have native depth textures.

#define UNITY_TRANSFER_DEPTH(oo)

// Legacy; used to do something on platforms that had to emulate depth textures manually. Now all platforms have native depth textures.

#define UNITY_OUTPUT_DEPTH(i) return 0

我们的是unity 2018.2.7f1

也就是这个是过时的,UNITY_TRANSFER_DEPTH转为了空操作;而UNITY_OUTPUT_DEPTH则返回0,所以是黑色。

那么究竟该如何显示深度图呢????

参考文章:

https://blog.csdn.net/puppet_master/article/details/52819874

https://www.jianshu.com/p/4e8162ed0c8d

http://disenone.github.io/2014/03/27/unity-depth-minimap

关于如何获取深度图有几种方法,这里我们先学习下使用后处理的方式。

得到如下的方法,是使用后处理的方式,直接读取unity给我们提供的_CameraDepthTexture变量即可。具体如下:

首先是后处理的脚本,用于开启要使用深度图,提供源头给shader。

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

[ExecuteInEditMode]

public class OpenDepthMode : MonoBehaviour

{

private Material mat;

public Shader shader;

private void OnEnable()

{

GetComponent<Camera>().depthTextureMode |= DepthTextureMode.Depth;

}

private void OnDisable()

{

GetComponent<Camera>().depthTextureMode &= ~DepthTextureMode.Depth;

}

private void OnRenderImage(RenderTexture source, RenderTexture destination)

{

if(mat == null)

{

mat = new Material(shader);

}

if(mat != null)

{

Graphics.Blit(source, destination, mat);

}

}

}

shader代码:

Shader "MyShader/RenderDepth"

{

SubShader

{

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include"UnityCG.cginc"

sampler2D _CameraDepthTexture;

struct VertexData

{

float4 pos:POSITION;

float2 uv:TEXCOORD0;

};

struct V2F

{

float4 pos:POSITION;

float2 uv : TEXCOORD0;

};

V2F vert(VertexData v)

{

V2F res;

res.pos = UnityObjectToClipPos(v.pos);

res.uv = v.uv;

return res;

}

float4 _Color;

fixed4 frag(V2F v) :SV_Target

{

float depth = SAMPLE_DEPTH_TEXTURE(_CameraDepthTexture, 1 - v.uv);

depth = Linear01Depth(depth);

return float4(depth, depth, depth, 1);

}

ENDCG

}

}

}

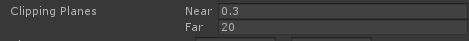

可是结果是:

全白的屏幕。

参考网址:

https://www.jianshu.com/p/80a932d1f11e

https://blog.csdn.net/where_is_my_keyboard/article/details/78794156

一个是加入:fallback,就是要保证必须有ShowCaster

二个是修改:摄像机的clip panel的near和far不要让far太大了

三个是注意dx上的uv反转:

if (_MainTex_TexelSize.y < 0) //像素的y小于则说明进行了反转

res.uv.y = 1 - res.uv.y;

完整的代码如下:

Shader "MyShader/RenderDepth"

{

SubShader

{

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

#include"UnityCG.cginc"

sampler2D _CameraDepthTexture;

struct VertexData

{

float4 pos:POSITION;

float2 uv:TEXCOORD0;

};

struct V2F

{

float4 pos:POSITION;

float2 uv : TEXCOORD0;

};

float4 _MainTex_TexelSize; //内置变量,四维数组(1/width, 1/height, width, height)

V2F vert(VertexData v)

{

V2F res;

res.pos = UnityObjectToClipPos(v.pos);

res.uv = v.uv;

if (_MainTex_TexelSize.y < 0) //像素的y小于则说明进行了反转

res.uv.y = 1 - res.uv.y;

return res;

}

float4 _Color;

fixed4 frag(V2F v) :SV_Target

{

float depth = SAMPLE_DEPTH_TEXTURE(_CameraDepthTexture, v.uv);

depth = Linear01Depth(depth);

return float4(depth, depth, depth, 1);

}

ENDCG

}

}

Fallback "Diffuse" //这里不加Fallback也可以,加了之后,是说明使用此shader的物体,也会加入到深度图中去

}

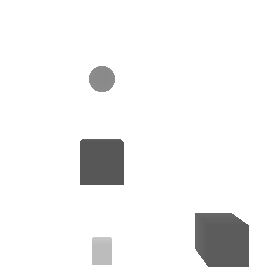

最终结果为:

ok,至此深度的第一种显示方式完毕。

这两个物体使用的是,上面的shader。其他的两个物体使用的是默认的材质。默认的材质里有fallback,故加入了深度图中。

至于要不要开启深度图模式的,我测了下,不需要开,也正常采样深度图,不知道为啥。