BIOS-CXL CxlLib 源码解析

文章目录

- 前言

- 1. CxlLib.c

- 1.1. GetNextCxlDevice()

- 1.2. IsGangedCxlDevice()

- 1.3. EnablePcieHierarchyForCxlDp()

- 1.4. ProgramCXLRcrbBar()

- 1.5. AllocateMmioForCxlPortCxl11()

- 1.6. EnumerateCxlDeviceCxl11()

- 1.7. DetectCxlTopology()

- 1.8. IsType3Device()

- 1.9. GetCxlDevCapCxl11()

- 1.10. EnableCxlCacheCxl11()

- 1.11. GetResDistHob()

- 1.12. InitializeComputeExpressLinkSpr()

- 1.13. ProgramSncRegistersOnCxl()

- 1.14. ConfigureCxl20HieForCxl11()

- 1.15. ConfigureCxl11Hierarchy()

- 1.16. ConfigureCxl20Device()

- 1.17. ConfigureCxl20Bridge()

- 1.18. ParseCxl20Hierarchy()

- 1.19. ConfigureCxlStackUncore()

- 1.20. InitializeComputeExpressLink20()

前言

如果需要PDF,可以去 Github Markdown 文件 上下载 markdown 文件,自行转录。记得顺手点星收藏。

1. CxlLib.c

1.1. GetNextCxlDevice()

- 通过遍历 CurrentSocket 与 CurrentStack 获取下一个 CXL 端口,GetCxlStatus() 已经是 CXL 模式;

1.2. IsGangedCxlDevice()

- 检查插在端口的 CXL 设备是否是一个有两个链路的成组的设备

/**

Routine to check if CXL device attached to the current CXL port(specified by CurrentSocket/CurrentStack)

is a ganged device that has two CXL links.

@param[in] KtiInternalGlobal Pointer to the KTI RC internal global structure

@param[in] CurrentSocket Socket Index of the current CXL port

@param[in] CurrentStack Stack number of the current CXL port

@param[out] NextSocket Pointer to the Socket Index of the 2nd CXL port if it is a ganged CXL device

@param[out] NextStack Pointer to the Stack number of the 2nd CXL port if it is a ganged CXL device

@retval TRUE - It is a ganged CXL device, 2nd CXL port is returned in NextSocket/NextStack.

@retval FALSE - It is not a ganged CXL device, NextSocket/NextStack are undetermined.

**/

BOOLEAN

EFIAPI

IsGangedCxlDevice (

IN KTI_HOST_INTERNAL_GLOBAL *KtiInternalGlobal,

IN UINT8 CurrentSocket,

IN UINT8 CurrentStack,

OUT UINT8 *NextSocket,

OUT UINT8 *NextStack

)

{

KTI_STATUS KtiStatus;

UINT8 SocketId, StackId;

KTI_HOST_OUT *KtiVar;

KtiVar = KTIVAR;

if ((NextSocket == NULL) || (NextStack == NULL)) {

KtiDebugPrintFatal ((KTI_DEBUG_ERROR, "Error: Input NULL pointer!\n"));

return FALSE;

}

SocketId = CurrentSocket;

StackId = CurrentStack;

// 遍历所有设备,直到发现与当前查询的端口设备一样的 VID 和序列号

do {

// 调用上一个函数

KtiStatus = GetNextCxlDevice (

SocketId,

StackId,

NextSocket,

NextStack,

FALSE

);

if (KtiStatus == KTI_SUCCESS) { //Find another CXL device

if ((KtiVar->CxlInfo[CurrentSocket][CurrentStack].VendorId == KtiVar->CxlInfo[*NextSocket][*NextStack].VendorId) &&

(KtiVar->CxlInfo[CurrentSocket][CurrentStack].SerialNum == KtiVar->CxlInfo[*NextSocket][*NextStack].SerialNum)) {

// 是一个成组的 CXL 加速器,有两个链路

// It is a ganged CXL accelerator that has two CXL links

//

return TRUE;

}

SocketId = *NextSocket;

StackId = *NextStack;

}

} while (KtiStatus == KTI_SUCCESS);

return FALSE;

}

1.3. EnablePcieHierarchyForCxlDp()

- 这个例行程序将设置 CXL DP的配置空间中的 Secondary and Subodinate bus registers ,从而能使能 PCIe hierarchy;

/**

This routine will program the Secondary and Subodinate bus registers in CXL DP's

configuration space so as to enable the PCIe hierarchy behind the CXL DP.

@param[in] KtiInternalGlobal - KTIRC Internal Global data

@param[in] Socket - Socket ID

@param[in] Stack - Stack number

@retval KTI_SUCCESS

**/

KTI_STATUS

EFIAPI

EnablePcieHierarchyForCxlDp (

IN KTI_HOST_INTERNAL_GLOBAL *KtiInternalGlobal,

IN UINT8 Socket,

IN UINT8 Stack

)

{

UINT8 BusBase;

UINT32 RcrbBaseAddr;

EFI_STATUS Status;

KTI_HOST_OUT *KtiVar;

KtiVar = KTIVAR;

BusBase = KtiVar->CpuInfo[Socket].CpuRes.StackRes[Stack].BusBase;

// 获取 RCRB 基地址

RcrbBaseAddr = (UINT32) GetRcrbBar (Socket, Stack, TYPE_CXL_RCRB);

if (RcrbBaseAddr == 0) {

return KTI_FAILURE;

}

//

// CXL DP's RCRB follows PCIe spec and looks like Type1 configuration space.

// Program CXL DP Secondary and Subordinate bus to be BusBase + 1.

//

// 设置 RCRB 配置空间中的 Secondary and Subordinate 两个寄存器

Status = SetCxlDownstreamSecBus ((UINTN) RcrbBaseAddr, BusBase + 1);

Status |= SetCxlDownstreamSubBus ((UINTN) RcrbBaseAddr, BusBase + 1);

return EFI_ERROR (Status)?KTI_FAILURE:KTI_SUCCESS;

}

1.4. ProgramCXLRcrbBar()

- 例行程序为特定 CXL 使能 stack 设置 CXL RCRB BAR 。这个函数执行了3个不同的操作: 1.设置 RCRB 寄存器;2. 设置 Secondary and Subordinat Bus Numbers; 3. 通过发出内存访问操作间接编程 UP RCRB,上游端口捕获链路训练后收到的第一个内存访问的高地址位[63:12]作为上游端口 RCRB 的基地址。

/**

Routine to program the CLX RCRB BAR for the specified CLX enabled stack

This function performs 3 different operations.

1. Programs RCRB register

2. Programs Secondary and Subordinate Bus numbers for DP

3. Indirectly programs UP RCRB by issuing memory access operation.

Refer CXL 1.1 Rev. 1.1 Section 7.2.1.2. "the upstream port captures the

upper address bits[63:12] of the first memory access received after link

training as the base address for the upstream port RCRB"

@param[in] KtiInternalGlobal Pointer to the KTI RC internal global structure

@param[in] Socket Socket Index

@param[in] Stack Stack number

@retval KTI_SUCCESS

**/

KTI_STATUS

EFIAPI

ProgramCXLRcrbBar (

IN KTI_HOST_INTERNAL_GLOBAL *KtiInternalGlobal,

IN UINT8 Socket,

IN UINT8 Stack

)

{

UINT32 CxlDpRcrbBar;

EFI_STATUS KtiStatus;

KTI_HOST_OUT *KtiVar;

KtiVar = KTIVAR;

CxlDpRcrbBar = KtiVar->CpuInfo[Socket].CpuRes.StackRes[Stack].MmiolBase;

KtiVar->CpuInfo[Socket].CpuRes.StackRes[Stack].RcrbBase = CxlDpRcrbBar;

//

// Set the RCRBBAR with the first 8KB mmiol within the stack

// 使用 stack 的第一个8KB 设置 RCRBBAR

KtiStatus = SetRcrbBar (Socket, Stack, TYPE_CXL_RCRB, CxlDpRcrbBar);

if (EFI_ERROR(KtiStatus)) {

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "\nSet Rcrb Bar function returns %r\n", KtiStatus));

return KtiStatus;

}

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "\nCXL: Program Secondary and Subordinate bus for DP.\n"));

// 调用上一个函数设置 Secondary and Subordinate bus

KtiStatus = EnablePcieHierarchyForCxlDp (KtiInternalGlobal, Socket, Stack);

if (KtiStatus != KTI_SUCCESS) {

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "\nPci Heirarchy configuration failed %r \n", KtiStatus));

return KTI_FAILURE;

}

// 当端口已经使能

if (GetCxlStatus (Socket, Stack) == AlreadyInCxlMode) { //Only needed when CXL port enabled

//

// Set up the CXL UP RCRB BAR by trigger a read to the CXL UP RCRB BAR

// HW will help to set the BAR for CXL UP. Use MmioRead for now.

// 上游端口捕获链路训练后收到的第一个内存访问的高地址位[63:12]作为上游端口 RCRB 的基地址

// 通过触发读操作去设置 CXL UP RCRB BAR基地址

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "Socket %d Stack %d Configure UP Rcrb start\n", Socket, Stack));

KtiMmioRead32 (CxlDpRcrbBar + CXL_RCRB_BAR_SIZE_PER_PORT);

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "Socket %d Stack %d Configure UP Rcrb end\n", Socket, Stack));

}

return KTI_SUCCESS;

}

1.5. AllocateMmioForCxlPortCxl11()

- 例行程序, 为 CXL 1.1 DP\UP RCRB 分配 MMIO 资源以及设置 MEMBAR0 寄存器

/**

Routine to allocate MMIO resource and program the MEMBAR0 register for CXL DP and UP RCRB Fox Cxl 1.1.

--------------------- ==> Base = KtiVar->CpuInfo[Socket].CpuRes.StackRes[Stack].MmiolBase

| DP RCRB(4KB) |

--------------------- ==> Base = DP RCRB Base + 4KB

| UP RCRB(4KB) |

--------------------- ==> Reserved to meet MEMBAR0 alignment

| Reserved |

| xxxKB |

--------------------- ==> DP MEMBAR0 Base

| DP MEMBAR0 |

| 128KB |

--------------------- ==> Base = DP MEMBAR0 Base + 128KB

| UP MEMBAR0 |

| 128KB |

---------------------

@param[in] KtiInternalGlobal Pointer to the KTI RC internal global structure

@param[in] Socket Socket Index

@param[in] Stack Stack number

@retval KTI_SUCCESS - Function completed successfully

@retval KTI_FAILURE - Function failed

**/

KTI_STATUS

EFIAPI

AllocateMmioForCxlPortCxl11 (

IN KTI_HOST_INTERNAL_GLOBAL *KtiInternalGlobal,

IN UINT8 Socket,

IN UINT8 Stack

)

{

EFI_STATUS Status;

UINT32 DpRcrbBar, MemResBase;

UINT64 MemBar0Base, MemBar0Size;

// 获取 RCRB 基地址

DpRcrbBar = (UINT32) GetRcrbBar (Socket, Stack, TYPE_CXL_RCRB);

if (DpRcrbBar == 0) {

return KTI_FAILURE;

}

KtiDebugPrintInfo1 ((KTI_DEBUG_INFO1, "\n Program MEMBAR0 for CXL Downstream port\n"));

// CXL_RCRB_BAR_SIZE 0x2000 8K, 8K 后面是保留区域

// 预留区域是为了 MEMBAR0 起始地址对齐,详情请见 RcrbAccessLib.c:ProgramMemBar0Register()

MemResBase = DpRcrbBar + CXL_RCRB_BAR_SIZE;

Status = ProgramMemBar0Register (DpRcrbBar,

CXL_PORT_RCRB_MEMBAR0_LOW_OFFSET,

MemResBase,

TRUE,

&MemBar0Base,

&MemBar0Size

);

//

// Enable PCICMD.mse for CXL DP port

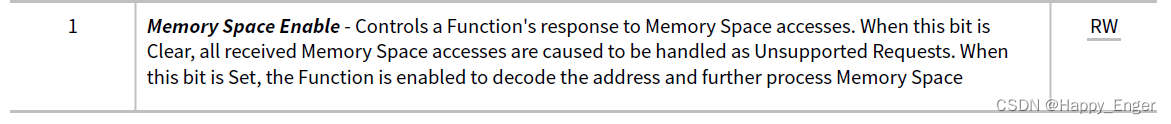

// 设置使能 Memory Space Enable

ProgramPciCommandRegister (Socket, DpRcrbBar, TRUE);

if (GetCxlStatus (Socket, Stack) == AlreadyInCxlMode) { //Only needed when CXL port enabled

KtiDebugPrintInfo1 ((KTI_DEBUG_INFO1, "\n Program MEMBAR0 for CXL Upstream port\n"));

MemResBase = (UINT32) (MemBar0Base + MemBar0Size);

//

// Per PCIe spec, the bridge assumes that the lower 20 address bits, Address[19:0],

// of the memory base address (not implemented in the Memory Base register) are zero.

// Similarly, the bridge assumes that the lower 20 address bits, Address[19:0], of the

// memory limit address (not implemented in the Memory Limit register) are F FFFFh. Thus,

// the bottom of the defined memory address range will be aligned to a 1MB boundary and the

// top of the defined memory address range will be one less than a 1 MB boundary.

//

// 内存地址范围下部定义 1MB 对齐,上部小于 1MB 边界

//

// CXL 1.1 Spec Chapter 7.2 Memory Mapped Registers requests that the RCRBs do not overlap

// with the MEMBAR0 regions. Also, note that the upstream port's MEMBAR0 region must fall

// within the range specified by the downstream port's memory base and limit register.

//

// CXL 1.1 规定, RCRB 不能与 MEMBAR0 区域重叠,注意上游端口的 MEMBAR0 区域必须在下游端口内存base 和 limit 寄存器指定的范围内

//

// CXL DP bridge knows how to handle the memory transactions on below regions:

// CXL DP 桥知道如何处理以下区域的内存转换:

// (1) DP RCRB & MEMBAR0 region: both are targeting DP bridge itself, no need forward

// DP RCRB 与 MEMBAR0 区域

// (2) UP RCRB region: HW knows it should forward such memory transaction to UP RCRB since

// DP/UP RCRB is a 8KB continuous region

// UP RCRB 区域: HW 知道它应该将此类内存事务转发给 UP RCRB,因为 UP/DP RCRB 是一个连续的 8K 区域

// So above regions do not need to fall within the memory range(1MB aligned) specified by

// DP's Memory Base/Limit register.

// 因此上述区域不需要落在 DP 的 Memory Base/Limit 寄存器指定的内存范围(1MB 对齐)内。

//

// Move the MemResBase to next 1MB boundary

// 1 MB 对齐

MemResBase = (MemResBase + CXL_UP_MEMBAR0_ALIGNMENT - 1) & (~(CXL_UP_MEMBAR0_ALIGNMENT - 1));

// 配置 UP Membar0

Status |= ProgramMemBar0Register (DpRcrbBar + CXL_RCRB_BAR_SIZE_PER_PORT,

CXL_PORT_RCRB_MEMBAR0_LOW_OFFSET,

MemResBase,

TRUE,

&MemBar0Base,

&MemBar0Size

);

//

// Enable CXL11 upstream mem bar

//

Cxl11UpMemBarEnable (Socket, Stack, MemResBase);

//

// Enable PCICMD.mse for CXL UP port

// 使能 CXL UP port 的 Command 寄存器的 Memory space enabled

ProgramPciCommandRegister (Socket, DpRcrbBar + CXL_RCRB_BAR_SIZE_PER_PORT, TRUE);

}

return EFI_ERROR (Status)?KTI_FAILURE:KTI_SUCCESS;

}

1.6. EnumerateCxlDeviceCxl11()

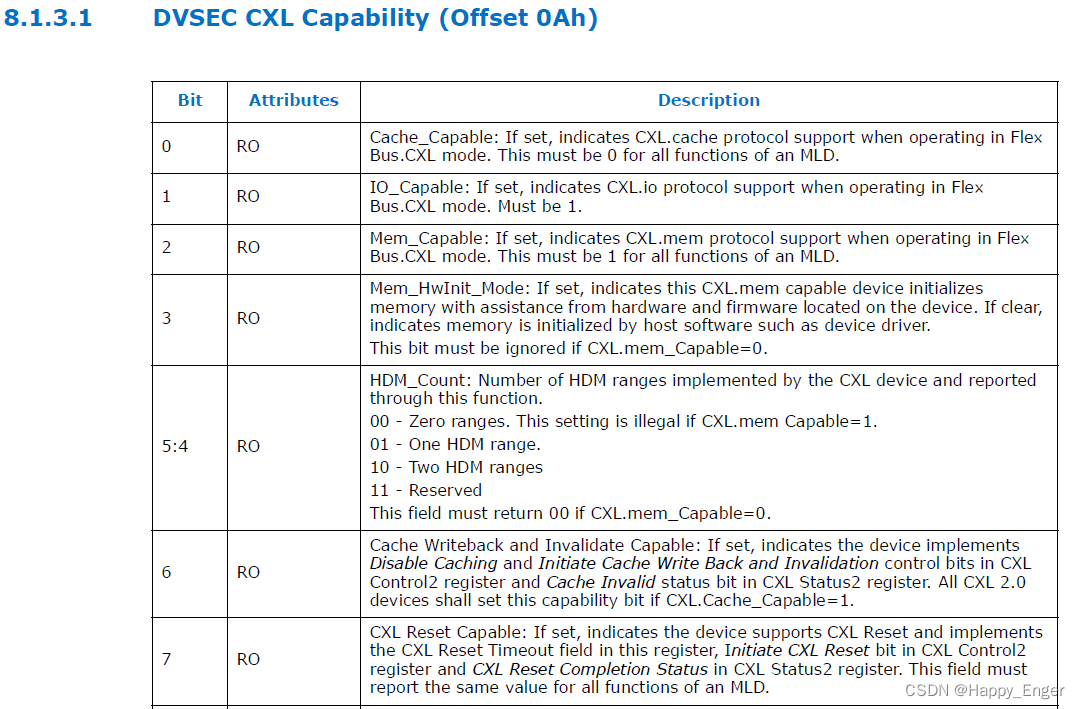

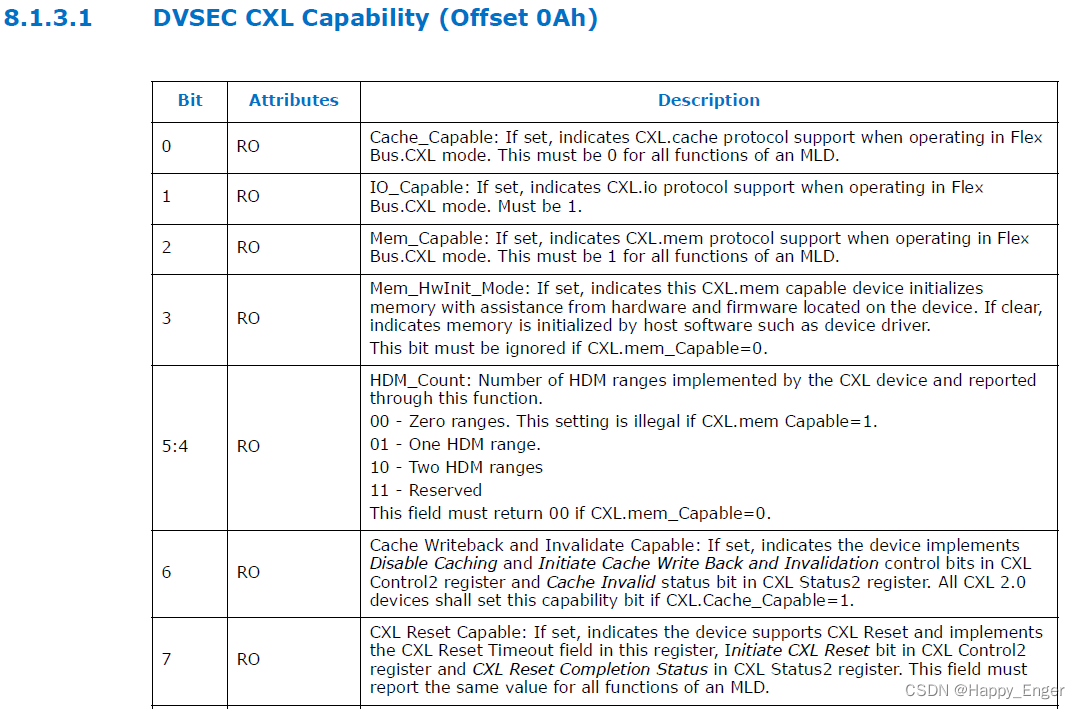

- CXL 1.1 的 DP UP 端口枚举 CXL设备, 主要是读取设备 CXL_DEVICE_DVSEC DeviceCapability 信息并保存;

/**

Routine to enumerate the CXL devices under the specified CXL DP & UP port for Cxl1.1.

@param[in] KtiInternalGlobal Pointer to the KTI RC internal global structure

@param[in] Socket Socket Index

@param[in] Stack Stack number

@retval KTI_SUCCESS

**/

KTI_STATUS

EFIAPI

EnumerateCxlDeviceCxl11 (

IN KTI_HOST_INTERNAL_GLOBAL *KtiInternalGlobal,

IN UINT8 Socket,

IN UINT8 Stack

)

{

CXL_DVSEC_FLEX_BUS_DEVICE_CAPABILITY CxlDevCap;

EFI_STATUS Status;

KTI_HOST_OUT *KtiVar;

KtiVar = KTIVAR;

// 首先检查状态是否使能

if (GetCxlStatus (Socket, Stack) != AlreadyInCxlMode) {

KtiDebugPrintInfo1 ((KTI_DEBUG_INFO1, "The Stack is not enabled as CXL!\n"));

return KTI_FAILURE;

}

//

// Check if the CXL endpoint device is existed

// 通过 VID 检查设备是否存在

KtiVar->CxlInfo[Socket][Stack].VendorId = GetCxlVid (Socket, Stack);

if (KtiVar->CxlInfo[Socket][Stack].VendorId == 0xFFFF) {

SetCxlStatus (Socket, Stack, NotInCxlMode);

ClearCxlBitMap (Socket, Stack);

KtiDebugPrintInfo1 ((KTI_DEBUG_INFO1, "No CXL endpoint device is attached! Disable the CXL PORT\n"));

return KTI_FAILURE;

}

KtiVar->CxlInfo[Socket][Stack].SerialNum = GetCxlDevSerialNum (Socket, Stack);

// 读CXL 1.1 设备 DVSEC CXL_DEVICE_DVSEC 的寄存器 DeviceCapability 2字节

Status = Cxl11DeviceDvsecRegRead16 (

Socket,

Stack,

OFFSET_OF (CXL_1_1_DVSEC_FLEX_BUS_DEVICE, DeviceCapability),

CXL_DEVICE_DVSEC,

&CxlDevCap.Uint16

);

if ((CxlDevCap.Uint16 == 0) || (Status != EFI_SUCCESS)) {

SetCxlStatus (Socket, Stack, NotInCxlMode);

ClearCxlBitMap (Socket, Stack);

KtiDebugPrintInfo1 ((KTI_DEBUG_INFO1,

"Can't find DVSEC structure's Flex Bus Capability on the Cxl endpoint device! Disable the CXL Port\n"));

return KTI_FAILURE;

}

// 字段如上图

if (CxlDevCap.Bits.HdmCount && (!CxlDevCap.Bits.MemCapable)) {

// 如果有 HDM , 但是不支持 .mem 能力,这是非法设置

KtiDebugPrintInfo1 ((KTI_DEBUG_INFO1, "CXL Capabilty Structure's HDM_Count is illegal!\n"));

} else {

KtiVar->CxlInfo[Socket][Stack].Hdm_Count = (UINT8) CxlDevCap.Bits.HdmCount;

}

// 填充记录其他字段

KtiVar->CxlInfo[Socket][Stack].Cache_Capable = (UINT8) CxlDevCap.Bits.CacheCapable;

KtiVar->CxlInfo[Socket][Stack].Mem_Capable = (UINT8) CxlDevCap.Bits.MemCapable;

KtiVar->CxlInfo[Socket][Stack].Mem_HwInit_Mode = (UINT8) CxlDevCap.Bits.MemHwInitMode;

KtiVar->CxlInfo[Socket][Stack].Viral_Capable = (UINT8) CxlDevCap.Bits.ViralCapable;

return KTI_SUCCESS;

}

1.7. DetectCxlTopology()

- 检测 CXL 拓扑,填充 KtiVar->CxlInfo[CurrentSocket][CurrentStack] 的 CxlTopologyType、SecondPortSocket、SecondPortStack 字段

/**

Routine to detect the CXL topology.

Note:

This routine must be invoked after CXL device enumeration.

@param[in] KtiInternalGlobal Pointer to the KTI RC internal global structure

@retval KTI_SUCCESS

**/

KTI_STATUS

EFIAPI

DetectCxlTopology (

IN KTI_HOST_INTERNAL_GLOBAL *KtiInternalGlobal

)

{

UINT8 CurrentSocket, CurrentStack;

UINT8 NextSocket, NextStack;

KTI_STATUS KtiStatus;

BOOLEAN IsGangedDevice;

KTI_HOST_OUT *KtiVar;

KtiVar = KTIVAR;

//

// Try to find the first valid CXL device if any

// 寻找一个 CXL 设备

KtiStatus = GetNextCxlDevice (

0,

0,

&NextSocket,

&NextStack,

TRUE

);

if (KtiStatus == KTI_FAILURE) {

KtiDebugPrintFatal ((KTI_DEBUG_ERROR, "\nNot find any valid CXL device!\n"));

return KTI_SUCCESS;

}

while (KtiStatus == KTI_SUCCESS) {

CurrentSocket = NextSocket;

CurrentStack = NextStack;

if (KtiVar->CxlInfo[CurrentSocket][CurrentStack].CxlTopologyType == CXL_TOPOLOGY_UNDETERMINED) {

//

// Detected topology type for current CXL device if its topology type is undetermine

// 如果它的拓扑类型是 undetermine, 那么探测当前设备的类型

IsGangedDevice = IsGangedCxlDevice (

KtiInternalGlobal,

CurrentSocket,

CurrentStack,

&NextSocket,

&NextStack

);

if (IsGangedDevice) {

//

// This accelerator has two CXL links

// 如果这个加速器有两条链路,能够在不同的Socket 和 Stack 中找到同一个设备,则记录拓扑类型

if (CurrentSocket == NextSocket) {

KtiVar->CxlInfo[CurrentSocket][CurrentStack].CxlTopologyType = TWO_CXL_LINK_ONE_SOCKET;

KtiVar->CxlInfo[NextSocket][NextStack].CxlTopologyType = TWO_CXL_LINK_ONE_SOCKET;

} else {

KtiVar->CxlInfo[CurrentSocket][CurrentStack].CxlTopologyType = TWO_CXL_LINK_TWO_SOCKET;

KtiVar->CxlInfo[NextSocket][NextStack].CxlTopologyType = TWO_CXL_LINK_TWO_SOCKET;

}

KtiVar->CxlInfo[CurrentSocket][CurrentStack].SecondPortSocket = NextSocket;

KtiVar->CxlInfo[CurrentSocket][CurrentStack].SecondPortStack = NextStack;

KtiVar->CxlInfo[NextSocket][NextStack].SecondPortSocket = CurrentSocket;

KtiVar->CxlInfo[NextSocket][NextStack].SecondPortStack = CurrentStack;

} else {

//

// This accelerator has only one CXL link

// 这个加速器只有一个链接

KtiVar->CxlInfo[CurrentSocket][CurrentStack].CxlTopologyType = ONE_CXL_LINK_ONE_SOCKET;

KtiVar->CxlInfo[CurrentSocket][CurrentStack].SecondPortSocket = CurrentSocket; //Invalid field, make it point to itself

KtiVar->CxlInfo[CurrentSocket][CurrentStack].SecondPortStack = CurrentStack; //Invalid field, make it point to itself

}

}

KtiStatus = GetNextCxlDevice (

CurrentSocket,

CurrentStack,

&NextSocket,

&NextStack,

FALSE

);

}

return KTI_SUCCESS;

}

1.8. IsType3Device()

- 判断设备是不是 Type3 设备,不支持 .cache 并且支持 .mem 能力

/**

IsType3Device function helps to find if CXL device supports Type3 device or not

using device capabilities.

@param[in] *CxlDevCap

@retval VOID

**/

BOOLEAN

IsType3Device (

IN CXL_DEVICE_PARAMETER *CxlDevCap

)

{

return ((CxlDevCap->CacheEnable == 0) && (CxlDevCap->MemEnable == 1))? TRUE:FALSE;

}

1.9. GetCxlDevCapCxl11()

- 读CXL1.1 设备 DVSEC_ID_0,保存 .cache 和 .mem 能力到 CXL_DEVICE_PARAMETER *CxlDevCap 中;

/**

GetCxlDevCap reads CXL devices DVSEC_ID_0 capability for Cxl 1.1.

@param[in] Socket Device's socket number

@param[in] Stack Box Instane, 0 based

@param[in] *CxlDevCap Pointer to structure where the cap is returned

@retval KTI_SUCCESS Successfully read register

@retval KTI_FAILURE Registers read operation failed

**/

KTI_STATUS

EFIAPI

GetCxlDevCapCxl11 (

IN UINT8 Socket,

IN UINT8 Stack,

IN OUT CXL_DEVICE_PARAMETER *CxlDevCap

)

{

EFI_STATUS Status;

CXL_DVSEC_FLEX_BUS_DEVICE_CAPABILITY CxlDevCapReg;

//

//Step 1: Check if the CXL(IAL) device is Cache capable;

//During Cxl Device Enumeration, capabilities are read to KTIVAR

// 读寄存器

Status = Cxl11DeviceDvsecRegRead16 (

Socket,

Stack,

OFFSET_OF (CXL_1_1_DVSEC_FLEX_BUS_DEVICE, DeviceCapability),

CXL_DEVICE_DVSEC,

&CxlDevCapReg.Uint16

);

// 保存结果

CxlDevCap->CacheEnable = (UINT8)CxlDevCapReg.Bits.CacheCapable;

CxlDevCap->MemEnable = (UINT8)CxlDevCapReg.Bits.MemCapable;

return Status;

}

1.10. EnableCxlCacheCxl11()

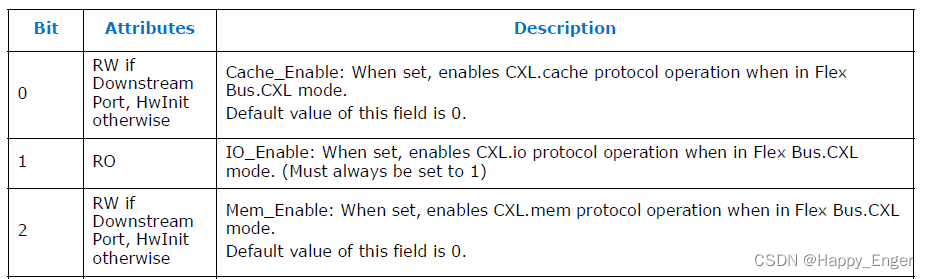

- 如果 1.1 设备支持 .cache 能力,此函数使能 .cache;使能 UP/DP 端口以及设备的 .Cache 能力

/**

This function will enable the Cxl.$ if the CXL device is cache capable for Cxl 1.1.

@param[in] Socket Device's socket number.

@param[in] Stack Box Instane, 0 based.

@retval KTI_SUCCESS Successfully enabled Cxl.$.

@retval KTI_FAILURE Failed to enable Cxl.$.

**/

KTI_STATUS

EFIAPI

EnableCxlCacheCxl11 (

IN UINT8 Socket,

IN UINT8 Stack

)

{

EFI_STATUS Status;

CXL_DVSEC_FLEX_BUS_DEVICE_CONTROL CxlDevCtlRegSetMask, // both these locals

CxlDevCtlRegClearMask; // are for same purpose

CXL_1_1_DVSEC_FLEX_BUS_PORT_CONTROL CxlPortControl;

//

//Step 1: Set the Cache_Enable for CXL DP, UP and CXL device if CXL device is Cache capable

//

CxlPortControl.Uint16 = 0;

//Step 1.1: Set the Cache_Enable for CXL DP

// 设置 CXL DP 的 PortControl 的 Cache 使能位, 使能端口 Cache 能力, 如上图

CxlPortControl.Bits.CacheEnable = 1;

Status = CxlPortDvsecRegisterAndThenOr16 (

Socket,

Stack,

FALSE,

OFFSET_OF (CXL_1_1_DVSEC_FLEX_BUS_PORT, PortControl),

~CxlPortControl.Uint16,

CxlPortControl.Uint16

);

if (Status != EFI_SUCCESS) {

KtiDebugPrintFatal ((KTI_DEBUG_ERROR, "\nFailed to set Control.Cache_Enable for CXL downstream port!\n"));

return KTI_FAILURE;

}

//Step 1.2: Set the Cache_Enable for CXL UP

// 设置 CXL UP 的 PortControl 的 Cache 使能位, 使能端口 Cache 能力,如上图

Status = CxlPortDvsecRegisterAndThenOr16 (

Socket,

Stack,

TRUE,

OFFSET_OF (CXL_1_1_DVSEC_FLEX_BUS_PORT, PortControl),

~CxlPortControl.Uint16,

CxlPortControl.Uint16

);

if (Status != EFI_SUCCESS) {

KtiDebugPrintFatal ((KTI_DEBUG_ERROR, "\nFailed to set Control.Cache_Enable for CXL upstream port!\n"));

return KTI_FAILURE;

}

//Step 1.3: Set the Cache_Enable for CXL Device

// 设置设备的 Cache_enable 位,使能 Cache 能力,如上图

CxlDevCtlRegSetMask.Uint16 = 0; // init w/ 0 for the fields to be set

CxlDevCtlRegSetMask.Bits.CacheEnable = 1;

//

//Step 2. Set the Cache_SF_Coverage, Cache_SF_Granularity and Cache_Clean_Eviction on the CXL device side.

//

// ToDo: Need to get the detailed settings for below fields

// Performance hint to the device. 设备性能提示

CxlDevCtlRegClearMask.Uint16 = 0xFFFF; // init w/ 1 for the fields to be cleared

// 0x00: Indicates no Snoop Filter coverage on the Host

CxlDevCtlRegClearMask.Bits.CacheSfCoverage = 0;

// 000: Indicates 64B granular tracking on the Host

CxlDevCtlRegClearMask.Bits.CacheSfGranularity = 0;

// 0: Indicates clean evictions from device caches are needed for best performance

CxlDevCtlRegClearMask.Bits.CacheCleanEviction = 0;

// 写入寄存器

Status = Cxl11DeviceDvsecRegAndThenOr16 (

Socket,

Stack,

OFFSET_OF (CXL_1_1_DVSEC_FLEX_BUS_DEVICE, DeviceControl),

CXL_DEVICE_DVSEC,

CxlDevCtlRegSetMask.Uint16 ^ CxlDevCtlRegClearMask.Uint16, //XOR set & clear masks value for AND mask

CxlDevCtlRegSetMask.Uint16

);

if (Status != EFI_SUCCESS) {

KtiDebugPrintFatal ((KTI_DEBUG_ERROR, "\nFailed to config Control register for CXL Device!\n"));

return KTI_FAILURE;

}

return KTI_SUCCESS;

}

1.11. GetResDistHob()

- 未知

/**

Get Resource Distribution Hob

@param[in] None

@retval STACK_RES_DISTRIBUTION_HOB* Pointer to Hob

**/

STACK_RES_DISTRIBUTION_HOB *

GetResDistHob (

)

{

STACK_RES_DISTRIBUTION_HOB *StackResDistHob;

EFI_HOB_GUID_TYPE *GuidHob;

StackResDistHob = NULL;

GuidHob = GetFirstGuidHob (&gIioStackResDistributionHobGuid);

StackResDistHob = (STACK_RES_DISTRIBUTION_HOB *) GET_GUID_HOB_DATA (GuidHob);

return StackResDistHob;

}

1.12. InitializeComputeExpressLinkSpr()

- 初始化 SPR CXL。 此函数检测启用 CXL1.1 已经使能的stacks 并配置 CXL 非核心参数;如果 CXL EP 设备支持 .cache 协议, 在桥和设备中将使能 .cache 协议;

/**

InitializeComputeExpressLinkSpr detecs CXL11 enabled stacks and configure CXL uncore parameters.

If CXL EP device supports cxl cache protocol, cache protocol enabled in bridges and EP devices.

@param[in] SocketData - Pointer to socket specific data

@param[in] KtiInternalGlobal - Pointer to the KTI RC internal global structure

@retval None

**/

VOID

EFIAPI

InitializeComputeExpressLinkSpr (

IN KTI_SOCKET_DATA *SocketData,

IN KTI_HOST_INTERNAL_GLOBAL *KtiInternalGlobal

)

{

UINT8 SocketId, StackId;

BOOLEAN CxlDevPresent;

KTI_STATUS KtiStatus;

EFI_STATUS Status;

UINT8 CxlSecLvl;

UINT8 CxlStcge, CxlSdcge, CxlDlcge, CxlLtcge;

CXL_DEVICE_PARAMETER CxlDevCap;

KTI_HOST_IN *KtiSetup;

KTI_HOST_OUT *KtiVar;

KtiVar = KTIVAR;

KtiSetup = KTISETUP;

CxlDevPresent = FALSE;

for (SocketId = 0; SocketId < MAX_SOCKET; SocketId++) {

if (SocketData->Cpu[SocketId].Valid == FALSE) {

continue;

}

if (SocketData->Cpu[SocketId].SocType != SOCKET_TYPE_CPU) {

continue;

}

for (StackId = 0; StackId < MAX_CXL_PER_SOCKET; StackId++) {

if (!StackPresent (SocketId, StackId)) {

continue;

}

if (KtiVar->CxlInfo[SocketId][StackId].CxlStatus != AlreadyInCxlMode) {

continue;

}

// 以上检测通过,端口已使能

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "\n\nCXL: Port location: Socket=%X, Stack=%X\n", SocketId, StackId));

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "\nCXL: Program the RCRB Bar.\n"));

// 设置初始化 RCRBBAR, 1.设置 RCRB 寄存器;2. 设置 Secondary and Subordinat Bus Numbers; 3. UP Port 记录基地址

KtiStatus = ProgramCXLRcrbBar (KtiInternalGlobal, SocketId, StackId);

if (KtiStatus == KTI_FAILURE) {

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "\nCXL: Cxl Rcrb Program fails %r\n", KtiStatus));

}

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "\nCXL: Enumerate CXL devices.\n"));

// 枚举设备,主要是保存寄存器 CXL_DEVICE_DVSEC DeviceCapability 中部分能力信息

KtiStatus = EnumerateCxlDevice (KtiInternalGlobal, SocketId, StackId);

if (KtiStatus == KTI_SUCCESS) {

CxlDevPresent = TRUE;

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "\nCXL: Allocate MMIO resource for CXL DP and UP.\n"));

// 为 CXL 1.1 DP\UP RCRB 分配 MMIO 资源以及设置 MEMBAR0 寄存器

KtiStatus = AllocateMmioForCxlPort (KtiInternalGlobal, SocketId, StackId);

if (KtiStatus != KTI_SUCCESS) {

KtiDebugPrintFatal ((KTI_DEBUG_ERROR, "\n\nProgramming MEMBAR0 failed!!\n\n"));

}

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "\nCXL: Collect and clear Error Status for RAS.\n"));

// 收集和清除 RAS 的错误状态,操作平台相关寄存器

Status = CxlCollectAndClearErrors (SocketId, StackId);

if (Status != EFI_SUCCESS) {

KtiDebugPrintFatal ((KTI_DEBUG_ERROR, "\n\nFailed to collect and clear Error Status for RAS.\n\n"));

}

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "\nCXL: Configure Security Policy.\n"));

CxlSecLvl = CXL_SECURITY_FULLY_TRUSTED;

if (KtiSetup->DfxParm.DfxCxlSecLvl < CXL_SECURITY_AUTO) {

CxlSecLvl = KtiSetup->DfxParm.DfxCxlSecLvl;

}

// 通过 CSR 寄存器 配置 CXL 的安全策略

Status = CxlConfigureSecurityPolicy (SocketId, StackId, CxlSecLvl);

if (Status != EFI_SUCCESS) {

KtiDebugPrintFatal ((KTI_DEBUG_ERROR, "\n\nFailed to configure CXL security policy!!\n\n"));

}

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "\nRead Cxl Cap\n"));

// 保存是否支持 .cache 和 .mem 能力到 CxlDevCap 中;

GetCxlDevCap (SocketId, StackId, &CxlDevCap);

if (CxlDevCap.CacheEnable == 1) {

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "\nCXL: Enable CXL.$ if capable.\n"));

// 如果支持 .cache 则使能

KtiStatus = EnableCxlCache (SocketId, StackId);

if (KtiStatus == KTI_SUCCESS) {

// 成功记录

KtiVar->CxlInfo[SocketId][StackId].Cache_Enabled = 1;

}

}

if (KtiInternalGlobal->CpuType == CPU_EMRSP) {

// determines prefetch enable or disable based on CXM memory type.

// 如果是 Type3 设备,则设置一些 KtiVar 的字段

DeterminePrefetchPolicy (KtiInternalGlobal, &CxlDevCap);

}

// 通过设置 CSR 寄存器配置连接电源管理

ConfigureLinkPowerManagement (SocketId, StackId);

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "\nCXL: Configure the CXL.Arb-Mux Clock Gating Enable bits\n"));

CxlStcge = CxlSdcge = CxlDlcge = CxlLtcge = KTI_ENABLE;

if (KtiSetup->DfxParm.DfxCxlStcge < KTI_AUTO) {

CxlStcge = KtiSetup->DfxParm.DfxCxlStcge;

}

if (KtiSetup->DfxParm.DfxCxlSdcge < KTI_AUTO) {

CxlSdcge = KtiSetup->DfxParm.DfxCxlSdcge;

}

if (KtiSetup->DfxParm.DfxCxlDlcge < KTI_AUTO) {

CxlDlcge = KtiSetup->DfxParm.DfxCxlDlcge;

}

if (KtiSetup->DfxParm.DfxCxlLtcge < KTI_AUTO) {

CxlLtcge = KtiSetup->DfxParm.DfxCxlLtcge;

}

// 通过 UsraCsr 寄存器,配置 CXL ARB_MUX 时钟

ConfigureCxlArbMuxCge (SocketId, StackId, CxlStcge, CxlSdcge, CxlDlcge, CxlLtcge);

//

// Silicon Workaround

// 平台相关

WorkaroundWithCxlEpDeviceInstalled (SocketId, KtiInternalGlobal->TotCha[SocketId]);

if (IsSiliconWorkaroundEnabled ("S22011750809")) {

ConfigureLinkAckTimer(SocketId, StackId);

}

}

}

}

if (CxlDevPresent) {

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "\nCXL: Detect topology.\n"));

// 设备存在,探测设备拓扑

DetectCxlTopology (KtiInternalGlobal);

}

}

1.13. ProgramSncRegistersOnCxl()

- 平台服务器相关寄存器设置,暂无资料

/**

Program SNC registers on CXL

@param[in] KtiInternalGlobal - KTIRC Internal Global data

@param[in] SocketId - Socket

@retval KTI_SUCCESS

**/

KTI_STATUS

EFIAPI

ProgramSncRegistersOnCxl (

IN KTI_HOST_INTERNAL_GLOBAL *KtiInternalGlobal,

IN UINT8 SocketId

)

{

UINT8 StackId;

UINT8 ReferenceStackId;

UINT32 Data32;

UINT32 Data32_Inst2;

UINT8 SncBaseIndex;

UINT8 NumChaPerCluster;

UINT8 BaseChaCluster1;

UINT8 BaseChaCluster2;

UINT8 BaseChaCluster3;

UINT8 BaseChaCluster4;

UINT8 BaseChaCluster5;

UINT8 UmaClusterEn;

UINT8 XorDefeatur;

UINT32 TwoLmMask;

UINT8 ChaCount;

EFI_STATUS Status;

KTI_HOST_OUT *KtiVar;

KtiVar = KTIVAR;

ChaCount = GetTotChaCount (SocketId);

for (ReferenceStackId = 0; ReferenceStackId < MAX_IIO_STACK; ReferenceStackId++) {

if (StackPresent (SocketId, ReferenceStackId)) {

break;

}

}

for (StackId = 0; StackId < MAX_CXL_PER_SOCKET; StackId++) {

if ((GetCxlStatus (SocketId, StackId) == AlreadyInCxlMode) &&

(KtiVar->CxlInfo[SocketId][StackId].Cache_Enabled == 1)) {

KtiDebugPrintInfo1 ((KTI_DEBUG_INFO1, "Program CXL SNC registers for socket: %d, stack: %d\n", SocketId, StackId));

for (SncBaseIndex = 0; SncBaseIndex <= MAX_CLUSTERS; SncBaseIndex++) {

//

// First read from IIO register, then write to CXL register

//

Status = IioGetSncIdx (SocketId, ReferenceStackId, SncBaseIndex, &Data32);

if (EFI_ERROR(Status)) {

DEBUG ((DEBUG_ERROR, "%r error returned from IioGetSncIdx for Index = %d\n", Status, SncBaseIndex));

break;

}

KtiDebugPrintInfo1 ((KTI_DEBUG_INFO1, "CXL SNC Base Address %d: 0x%08X \n", (SncBaseIndex + 1), Data32));

CxlSetSncBaseAddr (SocketId, StackId, SncBaseIndex, Data32);

}

IioGetSncUpperBase (SocketId, ReferenceStackId, &Data32, &Data32_Inst2);

CxlSetSncUpperBase (SocketId, StackId, Data32);

KtiDebugPrintInfo1 ((KTI_DEBUG_INFO1, "CXL SNC Upper Base Address: 0x%08X \n", Data32));

Data32 = IioGetCtrSncCfg (SocketId, ReferenceStackId);

CxlSetSncCfg (SocketId, StackId, Data32);

KtiDebugPrintInfo1 ((KTI_DEBUG_INFO1, "CXL_SNC_CONFIG: 0x%08X \n", Data32));

IioGetSncUncoreCfg (SocketId, ReferenceStackId, &NumChaPerCluster, &BaseChaCluster1, &BaseChaCluster2, &BaseChaCluster3, &BaseChaCluster4, &BaseChaCluster5);

CxlSetSncUncoreCfg (SocketId, StackId, NumChaPerCluster, BaseChaCluster1, BaseChaCluster2, BaseChaCluster3); // CxlSocLib is SPR specific

KtiDebugPrintInfo1 ((KTI_DEBUG_INFO1, "CXL_UNCORE_SNC_CONFIG: NumChaPerCluster:%d, BaseChaCluster1:%d, BaseChaCluster2:%d, BaseChaCluster3:%d\n", NumChaPerCluster, BaseChaCluster1, BaseChaCluster2, BaseChaCluster3));

IioGetUmaClusterCfg (SocketId, ReferenceStackId, &UmaClusterEn, &XorDefeatur);

CxlSetUmaClusterCfg (SocketId, StackId, UmaClusterEn, XorDefeatur);

KtiDebugPrintInfo1 ((KTI_DEBUG_INFO1, "CXL_UMA_CLUSTER_CFG: UmaClusterEn: %d, XorDefeatur: %d\n", UmaClusterEn, XorDefeatur));

IioGetSnc2LmAddrMask(SocketId , ReferenceStackId, &TwoLmMask);

CxlSetHashAddrMask (SocketId, StackId, TwoLmMask, ChaCount);

KtiDebugPrintInfo1 ((KTI_DEBUG_INFO1, "CXL_HASHADDRMASK: TwoLmMask: %d, ChaCount: %d\n", TwoLmMask, ChaCount));

}

}

return KTI_SUCCESS;

}

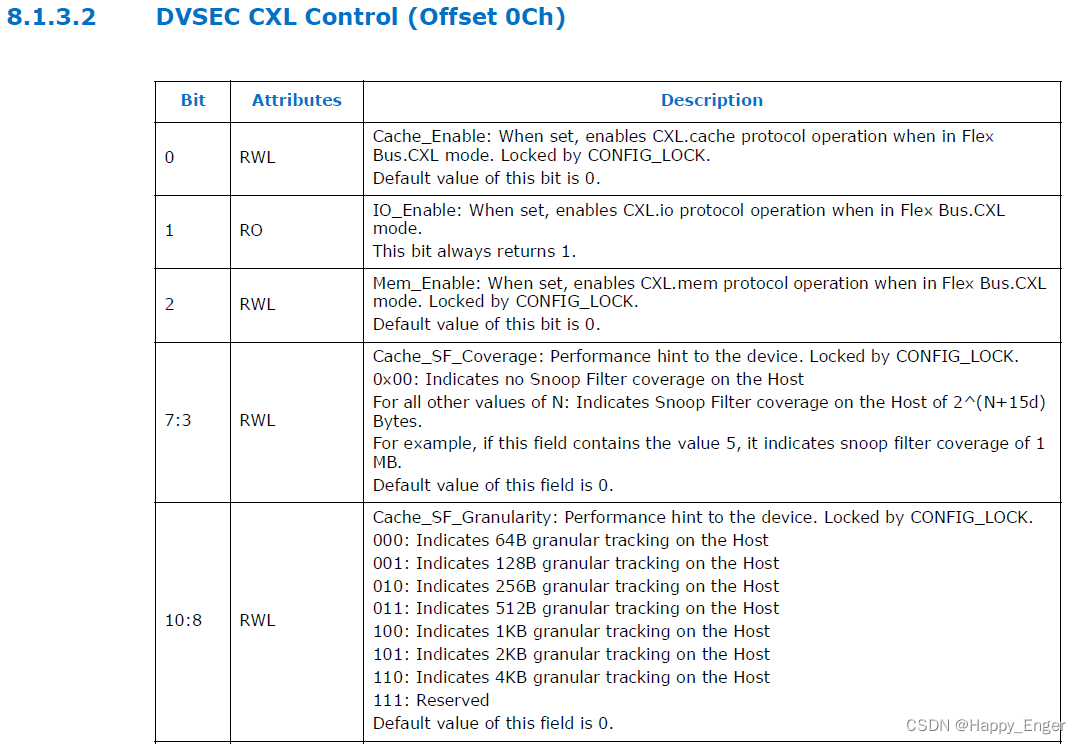

1.14. ConfigureCxl20HieForCxl11()

- 配置 CXL 1.1 设备链接的 CXL2.0 桥设备,主要是使能 .cache 协议。在 CXL2.0 总线架构中,可能有CXL1.1 设备链接在桥上。在这个配置中,如果在 rootport 和CXL1.1 设备之间有多个2.0桥存在,之间的所有 CXL2.0 桥必须配置为正确的 CXL11 设备功能。

/**

ConfigureCxl20HieForCxl11 configures CXL20 bridge devices in which CXL11 device is

connected to. In CLX20 bus hierarchy, there may be CXL11 device connected behind

CXL20 bridge. In this configuration, if there are multiple CXL20 bridges exist

between rootport and CXL11 device, all the CXL20 bridges in between must be

configured for proper CXL11 device functionality.

This function, enables cache protocol for CXL20 bridges in CXL11 physical hierarchy

@param[in] SocketId Cpu Socket to be configured

@param[in] StackId Stack number to be configured

@param[in] Cxl11Bus Cxl 11 Downstream port bus number

@retval None

**/

VOID

ConfigureCxl20HieForCxl11 (

IN UINT8 SocketId,

IN UINT8 StackId,

IN UINT8 Cxl11Bus

)

{

KTI_STACK_RESOURCE StackResource;

UINT8 Bus;

UINT8 Dev;

UINT8 Fun;

UINT8 LinkMode;

UINT16 Data16;

EFI_STATUS Status;

CXL_2_0_DVSEC_FLEX_BUS_PORT_CONTROL PortCtrl;

CXL_2_0_DVSEC_FLEX_BUS_PORT_CONTROL PortCtrlTemp;

// 获取 Stack 资源:总线,mem,io 资源

GetStackResource (SocketId, StackId, &StackResource);

CXL_DEBUG_LOG ("\tCxl20 bridge in Cxl11 start\n");

//

// Scan through CXL20 bus hierarchy

// 遍历 CXL 2.0 总线层级架构

for (Bus = StackResource.BusBase; Bus < StackResource.BusLimit; Bus++) {

// 遍历 32 个设备

for (Dev = 0; Dev < 32; Dev++) {

// 遍历 8 个功能

for (Fun = 0; Fun < 8; Fun++) {

// Check for device presence

// 利用 BDF 判断设备是否存在

if (!PciIsDevicePresentBdf (SocketId, Bus, Dev, Fun)) {

continue;

}

// Check for Bridge device

// 检查是不是桥

if (IsPciBridgeUsingBdf (SocketId, Bus, Dev, Fun)) {

// Check for CXL 20 capability

CXL_DEBUG_LOG ("\tBridge Device found %x %x %x\n", Bus, Dev, Fun);

// 通过桥端口的 DVSEC Cxl2p0Enable 位判断连接模式

LinkMode = GetCxl20PortOperatingMode (SocketId, Bus, Dev, Fun);

if (LinkMode != CXL20_MODE) {

CXL_DEBUG_LOG ("\t%x %x %x is not operating in CXL20 mode\n", Bus, Dev, Fun);

continue;

}

// Read Alt Bus base and Bus Limit

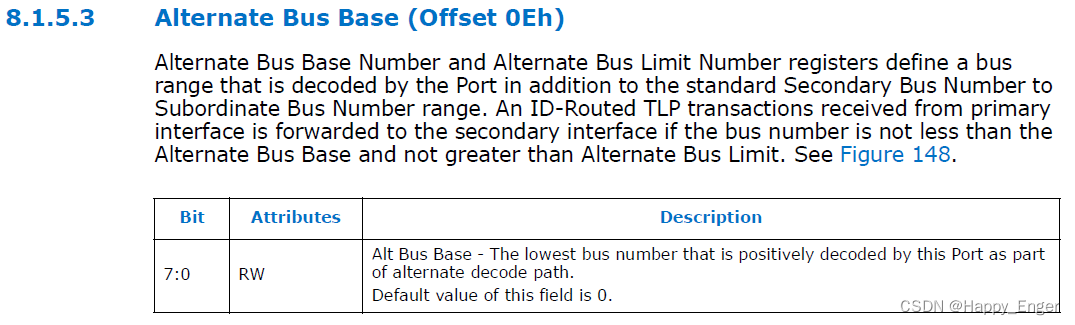

// 读Alt Bus base and Bus Limit, 寄存器如上图

Status = CxlDvsecRegRead (SocketId, Bus, Dev, Fun, CXL_PORT_DVSEC, \

OFFSET_OF(CXL_2_0_EXTENSIONS_DVSEC_PORTS ,AltBusBase), \

&Data16, UsraWidth16);

if (EFI_ERROR(Status)) {

KtiDebugPrintFatal ((KTI_DEBUG_ERROR, "\t %r error while reading to Port Dvsec\n", \

Status));

continue;

} else {

CXL_DEBUG_LOG ("\tAlt resource bus range for this bridge device is %x to %x\n", \

(UINT8)Data16, (UINT8)(Data16 >> 8));

}

//Check cxl11 bus is in Bridges's Alt Bus range

// 检查 CXL 1.1 总线是不是在 桥的总线范围内

if (Cxl11Bus > (UINT8)Data16 && Cxl11Bus < (UINT8)(Data16 >> 8)) {

// Enable Cache protocol for this cxl bridge

// 为 CXL 桥使能 .Cache 协议

PortCtrl.Bits.CacheEnable = 1;

PortCtrlTemp.Data16 = ~PortCtrl.Data16;

Status = CxlDvsecRegAndThenOr ( SocketId, Bus, Dev, Fun, CXL_PORT_DVSEC, \

OFFSET_OF(CXL_2_0_DVSEC_FLEX_BUS_PORT, PortControl), \

&PortCtrlTemp.Data16, &PortCtrl.Data16, UsraWidth16);

if (EFI_ERROR(Status)) {

KtiDebugPrintFatal ((KTI_DEBUG_ERROR, "\t %r error while writing Port Control\n", \

Status));

continue;

}

}

}

// Check for Multi-Function Device

// 检查是否是多功能设备

if ((Fun == 0) && (!PciIsMultiFunDevBdf (SocketId, Bus, Dev, Fun))) {

// 如果设备不是一个多功能的,设置完第一个就可以退出了

break;

}

}

}

}

CXL_DEBUG_LOG ("\tCxl20 bridge in Cxl11 end\n");

}

1.15. ConfigureCxl11Hierarchy()

- 该函数配置 CXL1.1 EP 设备和桥设备正确的功能。他检查设备是否存在,如果不存在,返回错误状态。如果存在,检查 .cache 协议是否支持。如果 EP 设备支持 .cache 协议,就使能它。并且使能 CXL1.1 UP 和 DP 的 .cache 协议。此外,如果有其他 CXL 2.0 桥存在,也要使能它的 .cache 协议能力。

/**

ConfigureCxl11Hierarchy configures CXL11 end point device and bridge devices for proper

functionality. It checks if CXL device exist, if not exist, return with error status.

If CXL device exist, it checks for CXL cache protocol support. If the end point device

supports cache protocol, enable cache protocol in it. And also, enable cache protocol

in CXL11 Up and Dp. In addition, if there are any other CXL20 bridges exist in the

physical hierarchy, cache protocol in CXL20 bridges is enabled.

@param[in] KtiInternalGlobal Kti Host Data structure

@param[in] *ResEntry Resource Pool for CXL11 hierarchy to be configured

@param[in] SocketId CPU Socket number to be configured

@param[in] StackId Stack number where CXL11 hierarchy exist

@retval KtiStatus KTI_FAILURE - No CXL devices found or error while configuring

KTI_SUCCESS - CXL11 hierarchy configured successfully

**/

KTI_STATUS

ConfigureCxl11Hierarchy (

IN KTI_HOST_INTERNAL_GLOBAL *KtiInternalGlobal,

IN STACK_RES_DISTRIBUTION *ResEntry,

IN UINT8 SocketId,

IN UINT8 StackId

)

{

UINT8 Bus;

UINT16 VendorId;

UINT16 Data16;

EFI_STATUS Status;

CXL_DVSEC_FLEX_BUS_DEVICE_CAPABILITY DeviceCap;

CXL_DVSEC_FLEX_BUS_DEVICE_CONTROL DeviceCtrl = {0};

CXL_1_1_DVSEC_FLEX_BUS_PORT_CONTROL PortCtrl;

CXL_DEBUG_LOG ("\tConfigure CXL11 hierarchy start\n");

//

// Check if CXL End point Device exist

// 检查 CXL EP 设备是否存在

Bus = GetCxlDPSecBus ((UINT32)ResEntry->RcrbBase);

// 读 CXL 设备 VID

VendorId = ReadCxlVenId (SocketId, Bus);

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "\t\tCxl11 device FOUND at Bus %x and Vendor Id: %X\n", \

Bus, VendorId));

if (VendorId == 0xFFFF) {

// 设备不存在,返回错误状态

CXL_DEBUG_LOG ("\t\tNo Cxl11 end point device found under bus %x exit without config\n", Bus);

return KTI_FAILURE;

}

//

// Read CXL Device Capability

// 读 CXL 设备的能力信息 CXL_DEVICE_DVSEC:CXL_1_1_DVSEC_FLEX_BUS_DEVICE:DeviceCapability 寄存器部分字段如上图

Status = CxlDvsecRegRead (SocketId, Bus, CXL_DEV_DEV, CXL_DEV_FUNC, \

CXL_DEVICE_DVSEC, OFFSET_OF (CXL_1_1_DVSEC_FLEX_BUS_DEVICE, DeviceCapability), \

&DeviceCap.Uint16, UsraWidth16);

if (EFI_ERROR (Status)) {

KtiDebugPrintFatal ((KTI_DEBUG_ERROR, "\t\t %r error while reading Flex bus Device Capability\n",

Status));

return KTI_FAILURE;

} else {

CXL_DEBUG_LOG ("\t\tFlex Bus Device Capability: %X\n", DeviceCap.Uint16);

}

//

// Check CXL Device Capability

// 检查 设备能力信息

if (DeviceCap.Bits.CacheCapable == 1) {

// 支持 .cache 协议

CXL_DEBUG_LOG ("\t\tCxl device supports cache protocol. configure cache protocol\n");

//

// Enable Cache protocol

// 使能 .cache 协议

Data16 = 0xFFFF;

DeviceCtrl.Bits.CacheEnable = 1;

// Ep Device

Status = CxlDvsecRegAndThenOr (SocketId, Bus, CXL_DEV_DEV, CXL_DEV_FUNC, \

CXL_DEVICE_DVSEC, OFFSET_OF(CXL_1_1_DVSEC_FLEX_BUS_DEVICE, DeviceControl), \

&Data16, &DeviceCtrl.Uint16, UsraWidth16);

if (EFI_ERROR(Status)) {

KtiDebugPrintFatal ((KTI_DEBUG_ERROR, "\t\t %r error while writing to Flex bus Device Control\n",

Status));

return KTI_FAILURE;

}

//

// Enable Cache protocol in Down stream port

// 使能 DP Cache 协议

CXL_DEBUG_LOG ("\t\tConfigure cache protocol on CXL11 DSP\n");

PortCtrl.Bits.CacheEnable = 1;

RcrbDvsecRegBlockAndThenOr ((UINT32)ResEntry->RcrbBase, \

FLEX_BUS_PORT_DVSEC_ID, \

OFFSET_OF(CXL_1_1_DVSEC_FLEX_BUS_PORT, PortControl), \

UsraWidth16, ~PortCtrl.Uint16, PortCtrl.Uint16);

//

// Enable Cache protocol in Up stream port

// 使能 UP Cache 协议

CXL_DEBUG_LOG ("\t\tConfigure cache protocol on CXL11 USP\n");

RcrbDvsecRegBlockAndThenOr ((UINT32)ResEntry->RcrbBase + CXL_RCRB_BAR_SIZE_PER_PORT, \

FLEX_BUS_PORT_DVSEC_ID, \

OFFSET_OF(CXL_1_1_DVSEC_FLEX_BUS_PORT, PortControl), \

UsraWidth16, ~PortCtrl.Uint16, PortCtrl.Uint16);

//

// Enable Cache protocol in CXL20 hierarchy

// 使能 2.0 桥的 .cache 能力

CXL_DEBUG_LOG ("\t\tConfigure Cxl20 switches and bridges in CXL11 path\n");

ConfigureCxl20HieForCxl11 (SocketId, StackId, Bus);

}

// 配置 DP UP 安全组件寄存器

ConfigureCxlCompRegsRcrb(KtiInternalGlobal, (UINT32)ResEntry->RcrbBase); // DP

ConfigureCxlCompRegsRcrb(KtiInternalGlobal, (UINT32)ResEntry->RcrbBase + CXL_RCRB_BAR_SIZE_PER_PORT); // UP

CXL_DEBUG_LOG ("\tConfigure CXL11 hierarchy end\n");

return KTI_SUCCESS;

}

1.16. ConfigureCxl20Device()

- 配置 CXL2.0 EP 设备。检查给定的设备是否支持 .cache 协议,如果支持则使能它,并将最后 .cache 的使能状态同步到参数 DevicePara 中。

/**

ConfigureCxl20Device configures CXL end point device. It checks if given CXL device

supports cache protocol, enables it. Also the device capability is updated to

DevicePara structure to communicate to parent.

@param[in] Seg Pci Segment or CPU Socket number to configure CXL20 hierarchy

@param[in] Bus Pci Bus number to be configured

@param[in] Dev Pci Device number to be configured

@param[in] Fun Pci Function number to be configured

@param[in] *DevicePara Device Parameter structure will be updated with CXL device

capabilities. It is used to communicate to its parent

@retval None

**/

VOID

ConfigureCxl20Device (

UINT8 Seg,

UINT8 Bus,

UINT8 Dev,

UINT8 Fun,

CXL_DEVICE_PARAMETER *DevicePara

)

{

UINT16 Data16;

EFI_STATUS Status;

USRA_ADDRESS CxlDevPciAddress;

CXL_2_0_DVSEC_FLEX_BUS_DEVICE_CAPABILITY DevCap;

CXL_2_0_DVSEC_FLEX_BUS_DEVICE_CONTROL DevCtrl;

CXL_DEBUG_LOG ("\t\tConfig Cxl cacheprotocol in device seg %x Bus %x Dev %x Fun %x start\n", \

Seg, Bus, Dev, Fun);

DevCtrl.Data16 = 0;

// 读 DVSEC 寄存器的值:CXL_DEVICE_DVSEC:CXL_2_0_DVSEC_FLEX_BUS_DEVICE:DeviceCapability

Status = CxlDvsecRegRead (Seg, Bus, Dev, Fun, CXL_DEVICE_DVSEC, \

OFFSET_OF(CXL_2_0_DVSEC_FLEX_BUS_DEVICE, DeviceCapability), \

&DevCap.Data16, UsraWidth16);

if (EFI_ERROR (Status)) {

CXL_DEBUG_LOG ("\t\t%r error while reading Device cap reg\n", Status);

CXL_DEBUG_LOG ("\t\tThis is non-CXL device\n");

return;

} else {

CXL_DEBUG_LOG ("\t\tDevice Capabilty = %X\n", DevCap.Data16);

CXL_DEBUG_LOG ("\t\tCacheCapable = %X\n", DevCap.Bits.CacheCapable);

}

// 通过 SBDF 生成 PCIe 物理地址

GenerateBasePciePhyAddress (Seg, Bus, Dev, Fun, \

UsraWidth16, &CxlDevPciAddress);

CxlDevPciAddress.Pcie.Offset = PCI_VENDOR_ID_OFFSET;

// 读取设备 VID

RegisterRead (&CxlDevPciAddress, &Data16);

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "\tCxl20 device FOUND at Bus %x Dev %x Fun %d Vendor Id: %X\n", \

Bus, Dev, Fun, Data16));

if (DevCap.Bits.CacheCapable == 1 && IioDfxCxlIsProtocolEnabled (Bus, CxlCache)) {

// 如果设备支持 .cache 能力,并且设备端口已经使能 .cache 协议

DevCtrl.Bits.CacheEnable = DevCap.Bits.CacheCapable;

Data16 = ~DevCtrl.Bits.CacheEnable;

// 使能设备的 .cache 协议能力

Status = CxlDvsecRegAndThenOr (Seg, Bus, Dev, Fun, CXL_DEVICE_DVSEC, \

OFFSET_OF(CXL_2_0_DVSEC_FLEX_BUS_DEVICE, DeviceControl), \

&Data16, &DevCtrl.Data16, UsraWidth16);

if (EFI_ERROR(Status)) {

CXL_DEBUG_LOG ("\t\t%r error while writing device ctrl reg\n", Status);

return;

} else {

CXL_DEBUG_LOG ("\t\tDevice cache protocol enabled\n");

}

// 设置参数的相应字段

DevicePara->CacheEnable = (UINT8)DevCap.Bits.CacheCapable;

} else {

CXL_DEBUG_LOG ("\t\tCXL Cache is disabled in setup for Bus = %X\n", Bus);

}

}

1.17. ConfigureCxl20Bridge()

- 配置 CXL 2.0 桥设备。检查CXL 设备是否支持 .cache 协议,如果支持则使能它,并将最后结果更新到参数 DevicePara 中。与上一个函数基本一致;

/**

ConfigureCxl20Bridge configures CXL bridge device. It checks if given CXL device

supports cache protocol, enables it. Also the device capability is updated to

DevicePara structure to communicate to parent.

@param[in] Seg Pci Segment or CPU Socket number to configure CXL20 hierarchy

@param[in] Bus Pci Bus number to be configured

@param[in] Dev Pci Device number to be configured

@param[in] Fun Pci Function number to be configured

@param[in] *DevicePara Device Parameter structure will be updated with CXL device

capabilities. It is used to communicate to its parent

@retval None

**/

VOID

ConfigureCxl20Bridge (

UINT8 Seg,

UINT8 Bus,

UINT8 Dev,

UINT8 Fun,

CXL_DEVICE_PARAMETER *DevicePara

)

{

UINT16 Data16;

EFI_STATUS Status;

CXL_2_0_DVSEC_FLEX_BUS_PORT_CAPABILITY PortCap;

CXL_2_0_DVSEC_FLEX_BUS_PORT_CONTROL PortCtrl;

CXL_DEBUG_LOG ("\t\tConfig Cxl cacheprotocol in Bridge seg %x Bus %x Dev %x Fun %x\n", \

Seg, Bus, Dev, Fun);

PortCtrl.Data16 = 0;

// 读端口的 DVSEC : CXL_FLEX_BUS_PORT_DVSEC:CXL_2_0_DVSEC_FLEX_BUS_PORT:PortCapability

Status = CxlDvsecRegRead (Seg, Bus, Dev, Fun, CXL_FLEX_BUS_PORT_DVSEC, \

OFFSET_OF(CXL_2_0_DVSEC_FLEX_BUS_PORT, PortCapability), \

&PortCap.Data16, UsraWidth16);

if (EFI_ERROR(Status)) {

CXL_DEBUG_LOG ("\t\t%r error while reading Port cap reg\n", Status);

return;

} else {

CXL_DEBUG_LOG ("\t\tPort Capabilty = %X\n", PortCap.Data16);

CXL_DEBUG_LOG ("\t\tCacheCapable = %X\n", PortCap.Bits.CacheCapable);

}

if (PortCap.Bits.CacheCapable == 1) {

// 如果端口支持 .cache 能力,则使能它。

PortCtrl.Bits.CacheEnable = PortCap.Bits.CacheCapable;

Data16 = ~PortCtrl.Bits.CacheEnable;

Status = CxlDvsecRegAndThenOr (Seg, Bus, Dev, Fun, CXL_FLEX_BUS_PORT_DVSEC, \

OFFSET_OF(CXL_2_0_DVSEC_FLEX_BUS_PORT, PortControl), \

&Data16, &PortCtrl.Data16, UsraWidth16);

if (EFI_ERROR(Status)) {

CXL_DEBUG_LOG ("\t\t%r error while writing Port ctrl reg\n", Status);

return;

} else {

CXL_DEBUG_LOG ("\t\tPort cache protocol enabled\n");

}

// 对参数进行更新

DevicePara->CacheEnable = (UINT8)PortCap.Bits.CacheCapable;

}

}

1.18. ParseCxl20Hierarchy()

- 该函数递归的配置所有 CXL 桥设备以及 EP 设备。使能所有设备的 .cache 能力,如果支持的话。

/**

ParseCxl20Hierarchy recursively configures all CXL bridges devices and end point devices.

It enables cache protocol in the whole CXL20 bus hierarchy if device is capable.

@param[in] Seg CPU Socket number to be configured

@param[in] StackId Stack number to be configured

@param[in] StartBus Parse starts at this bus number

@param[in] EndBus Parse ends at this bus number

@param[in] *DevicePara Device Parameter structure will be updated with CXL device

capabilities. It is used to communicate to its parent

@retval None

**/

VOID

ParseCxl20Hierarchy (

IN KTI_HOST_INTERNAL_GLOBAL *KtiInternalGlobal,

IN UINT8 Seg,+

IN UINT8 StackId,

IN UINT8 StartBus,

IN UINT8 EndBus,

IN CXL_DEVICE_PARAMETER *DevicePara

)

{

UINT8 Dev;

UINT8 Fun;

UINT8 SecBus;

UINT8 SubBus;

UINT32 Data32;

EFI_STATUS Status;

USRA_ADDRESS PcieAddrPtr;

CXL_DEVICE_PARAMETER New;

CXL_DEBUG_LOG ("\tConfigure Cxl2 hierarchy for Soc %x Bus Range %x to %x start\n", \

Seg, StartBus, EndBus);

// 检查参数是否正确有效

if (StartBus > EndBus || DevicePara == NULL) {

// Invalid input

return;

}

New.CacheEnable = 0;

// 遍历 32 个设备

for (Dev = 0; Dev < 32; Dev++) {

// 遍历 8 个功能

for (Fun = 0; Fun < 8; Fun++) {

CXL_DEBUG_LOG ("\t\tBus %x Dev %x Fun %x: ", StartBus, Dev, Fun);

if (!PciIsDevicePresentBdf (Seg, StartBus, Dev, Fun)) {

// 设备不存在

CXL_DEBUG_LOG ("Not Exist\n");

if (Fun == 0) {

break;

} else {

continue;

}

} else {

CXL_DEBUG_LOG ("Exist\n");

}

//

// Check for Bridge

// 检查是否是一个桥

if (IsPciBridgeUsingBdf (Seg, StartBus, Dev, Fun)) {

//

// Configure Bridge

// 配置桥

CXL_DEBUG_LOG ("\t\t Is a bridge\n");

// 生成 PCIe 物理地址

GenerateBasePciePhyAddress (Seg, StartBus, Dev, Fun, UsraWidth32, &PcieAddrPtr);

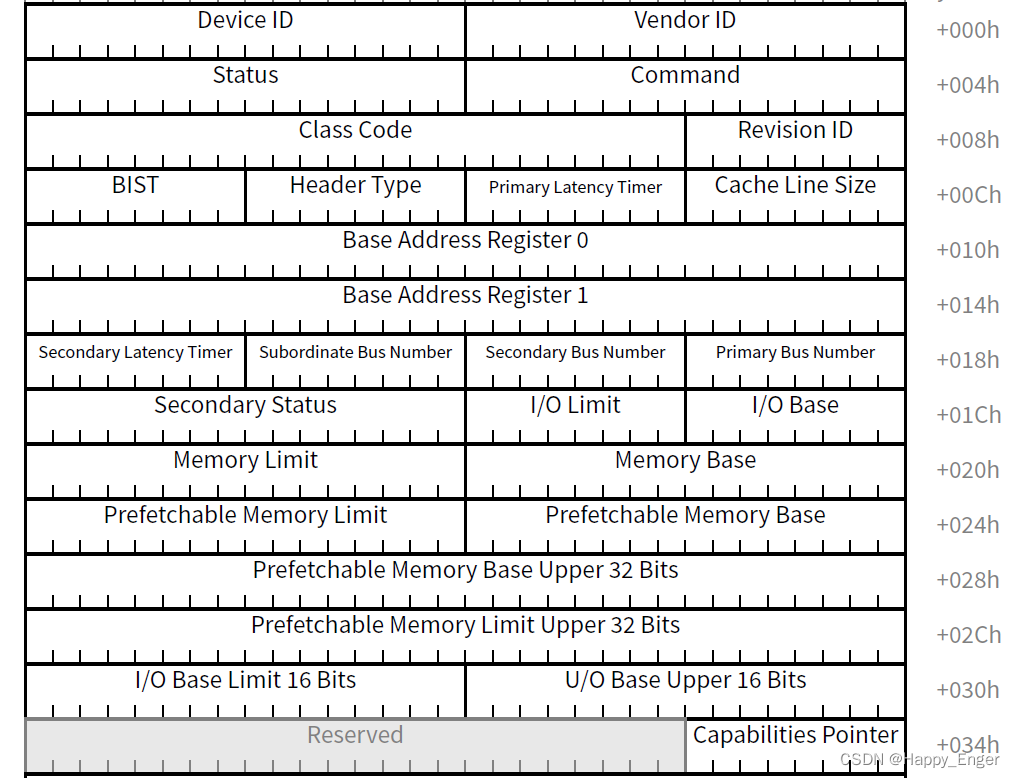

PcieAddrPtr.Pcie.Offset = PCI_BRIDGE_PRIMARY_BUS_REGISTER_OFFSET;

// 读 PRIMARY_BUS 寄存器, 如上图

Status = RegisterRead (&PcieAddrPtr, &Data32);

if (EFI_ERROR(Status)) {

continue;

}

// 解析获取 Secondary Bus Number 以及 Subordinate Bus Number

SecBus = (UINT8)(Data32 >> 8);

SubBus = (UINT8)(Data32 >> 16);

CXL_DEBUG_LOG ("\t\t Secondary bus is %x and subordinate bus is %x\n", SecBus, SubBus);

// 检查 Bus 范围是否正确

if ((SecBus <= StartBus) || (SecBus > EndBus) || \

(SubBus <= StartBus) || (SubBus > EndBus)) {

CXL_DEBUG_LOG ("\t\t Out of range bus detected. Do not process bus\n");

continue;

}

// 该函数递归的配置所有 CXL 桥设备以及 EP 设备。对于所有设备,如果支持 .cache 能力,使能其 .cache 能力,最终状态更新到 New 中

ParseCxl20Hierarchy (KtiInternalGlobal, Seg, StackId, SecBus, SubBus, &New);

DevicePara->CacheEnable = New.CacheEnable;

CXL_DEBUG_LOG ("\t\t CacheEable = %x\n", New.CacheEnable);

//

// Configure Bridge Device

//

if (DevicePara->CacheEnable == 1) {

// 如果设备支持 .cache 能力,配置 CXL 2.0 桥,结果更新在 DevicePara

ConfigureCxl20Bridge (Seg, StartBus, Dev, Fun, DevicePara);

}

} else {

CXL_DEBUG_LOG ("\t\t Is a End Point Device\n");

// 如果是一个 EP 设备,配置 CXL 2.0 设备,结果更新在 DevicePara

ConfigureCxl20Device(Seg, StartBus, Dev, Fun, DevicePara);

}

// 主要配置安全组件寄存器的安全策略

ConfigureCxlCompRegsBDF(KtiInternalGlobal, Seg, StartBus, Dev, Fun);

if ((Fun == 0) && (!PciIsMultiFunDevBdf (Seg, StartBus, Dev, Fun))) {

// 如果设备不是一个多功能的,设置完第一个就可以退出了

break;

}

}

}

CXL_DEBUG_LOG ("\tConfigure Cxl2 hierarchy for Soc %x Bus Range %x to %x End\n", \

Seg, StartBus, EndBus);

}

1.19. ConfigureCxlStackUncore()

- 该函数配置非核心功能参数,为 CXL hierarchy,Clear errors, security level, link power management, Arb-Mux parameters等。此函数每一个 stack 只执行一次。

/**

ConfigureCxlStackUncore configures Uncore functional parameters for CXL hierarchy

Clear errors, security level, link power management, Arb-Mux parameters, and WA if

any. This function is executed only only per stack.

@param[in] KtiInternalGlobal Pointer to the KTI RC internal global structure

@param[in] SocketId CPU socket number or Pci segment number

@param[in] StackId Stack Number to configure

@retval

**/

VOID

ConfigureCxlStackUncore (

IN KTI_HOST_INTERNAL_GLOBAL *KtiInternalGlobal,

IN UINT8 SocketId,

IN UINT8 StackId

)

{

UINT8 CxlSecLvl;

UINT8 CxlStcge, CxlSdcge, CxlDlcge, CxlLtcge;

KTI_HOST_IN *KtiSetup;

IP_CXLCM_INST *IpCxlcmInst;

IP_CSI_STATUS CsiStatus;

IP_CXLCM_LINK_ERROR_STATUS_TABLE LinkErrorTable;

KtiSetup = KTISETUP;

// 参数检查

if (SocketId >= MAX_SOCKET) {

return;

}

// 获取 IPFW 实例

IpCxlcmInst = CxlcmIpWrGetInst(SocketId, StackId);

if (IpCxlcmInst == NULL) {

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "\nCXL: Can't find CXLCM IPFW instance.\n"));

return;

}

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "\nCXL: Collect and clear Error Status for RAS.\n"));

//

// Read errors for CXLCM Port

// TODO: errors should be read from all ports - not only port0 - but for now SaveCxlErrorStatus supports only one port

// 读端口错误,错误应该从所有端口读,但是现在只支持端口0一个端口

CsiStatus = IpCxlcmCollectAndClearErrors (IpCxlcmInst, IpCxlcmPort0, &LinkErrorTable);

SaveCxlErrorStatus (SocketId, StackId, CXL_UNCORRECTABLE_ERROR, LinkErrorTable.UceStatusTable.Data); // Save

SaveCxlErrorStatus (SocketId, StackId, CXL_CORRECTABLE_ERROR, LinkErrorTable.CeStatusTable.Data); // Save

if (CsiStatus != IpCsiStsSuccess) {

KtiDebugPrintFatal ((KTI_DEBUG_ERROR, "\n\nFailed to collect and clear Error Status for RAS.\n\n"));

}

// 配置安全策略

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "\nCXL: Configure Security Policy.\n"));

CxlSecLvl = IpCxlcmFeatValTrusted;

if (KTISETUP->DfxParm.DfxCxlSecLvl < CXL_SECURITY_AUTO) {

CxlSecLvl = KTISETUP->DfxParm.DfxCxlSecLvl;

}

CsiStatus = IpCxlcmSetControl (IpCxlcmInst, IpCxlcmIp, IpCxlcmFeatIdSecurityLevel, CxlSecLvl);

if (CsiStatus != IpCsiStsSuccess) {

KtiDebugPrintFatal ((KTI_DEBUG_ERROR, "\n\nFailed to configure CXL security policy!!\n\n"));

}

//

// TODO: set for both ports?

// 平台相关,无资料

IpCxlcmSetControl (IpCxlcmInst, IpCxlcmPort0, IpCxlcmFeatIdLinkPowerManagement, 0x1FA0);

IpCxlcmSetControl (IpCxlcmInst, IpCxlcmPort2, IpCxlcmFeatIdLinkPowerManagement, 0x1FA0);

KtiDebugPrintInfo0 ((KTI_DEBUG_INFO0, "\nCXL: Configure the CXL.Arb-Mux Clock Gating Enable bits\n"));

CxlStcge = CxlSdcge = CxlDlcge = CxlLtcge = KTI_ENABLE;

if (KtiSetup->DfxParm.DfxCxlStcge < KTI_AUTO) {

CxlStcge = KtiSetup->DfxParm.DfxCxlStcge;

}

if (KtiSetup->DfxParm.DfxCxlSdcge < KTI_AUTO) {

CxlSdcge = KtiSetup->DfxParm.DfxCxlSdcge;

}

if (KtiSetup->DfxParm.DfxCxlDlcge < KTI_AUTO) {

CxlDlcge = KtiSetup->DfxParm.DfxCxlDlcge;

}

if (KtiSetup->DfxParm.DfxCxlLtcge < KTI_AUTO) {

CxlLtcge = KtiSetup->DfxParm.DfxCxlLtcge;

}

// 配置 ARB MUX ,无资料

ConfigureCxlArbMuxCge (SocketId, StackId, CxlStcge, CxlSdcge, CxlDlcge, CxlLtcge);

//

// Enable CXL Cache on this stack

// 使能这个 stack 的 .cache。代码中未发现任何操作

IpCxlcmIpConfigComplete (IpCxlcmInst);

IpCxlcmEnableCxlcm (IpCxlcmInst);

}

1.20. InitializeComputeExpressLink20()

- 配置 CXL 2.0 架构的非核心参数

/**

InitializeComputeExpressLink20 configures uncore parameters to cxl 20 hierarchy.

@param[in] SocketData - Pointer to socket specific data

@param[in] KtiInternalGlobal - Pointer to the KTI RC internal global structure

@retval None

**/

VOID

EFIAPI

InitializeComputeExpressLink20 (

IN KTI_SOCKET_DATA *SocketData,

IN KTI_HOST_INTERNAL_GLOBAL *KtiInternalGlobal,

IN STACK_RES_DISTRIBUTION_HOB *StackResDistHob

)

{

UINT8 SocketId;

UINT8 StackId;

UINT8 Index;

BOOLEAN ConfigStack;

UINT8 NumberOfEntries;

STACK_RES_DISTRIBUTION *ResEntry;

UINT8 Device;

UINT8 Fun;

ConfigStack = TRUE;

StackId = 0xFF;

SocketId = 0xFF;

CXL_DEBUG_LOG ("\tCxl bus hierarchy config start\n");

NumberOfEntries = StackResDistHob->NumberOfEntries;

CXL_DEBUG_LOG ("\t%d bus hierarchies found in system config\n", NumberOfEntries);

for (Index = 0; Index < NumberOfEntries; Index++) {

ResEntry = &StackResDistHob->StackResDistList[Index];

if (StackId != ResEntry->StackId) { // In loop StackId shall have previous loop stack

ConfigStack = TRUE;

}

StackId = ResEntry->StackId;

SocketId = ResEntry->SocketId;

CXL_DEBUG_LOG ("\tSoc %x Stk %x res pool is -\n", SocketId, StackId);

CXL_DEBUG_LOG ("\tBus Range: %x to %x", ResEntry->BusBase, ResEntry->BusLimit);

CXL_DEBUG_LOG (" MmioL Range: %x to %x", ResEntry->MmiolBase, ResEntry->MmiolLimit);

CXL_DEBUG_LOG (" MmioH Range: %lx to %lx", ResEntry->MmiohBase, ResEntry->MmiohLimit);

CXL_DEBUG_LOG (" RcrbBase %x. \n", ResEntry->RcrbBase);

if(SocketId >= MAX_SOCKET) {

KtiDebugPrintFatal ((KTI_DEBUG_ERROR, "Seg %x cannot be greater than MAX_SOCKET %x", \

SocketId, MAX_SOCKET));

continue;

}

if ((SocketData->Cpu[SocketId].Valid == FALSE) || // Check for Cpu population

(SocketData->Cpu[SocketId].SocType != SOCKET_TYPE_CPU) || // Check for Non-Cpu Type node

(StackPresent(SocketId, StackId) == FALSE)) { // Check for Stack present

KtiDebugPrintFatal ((KTI_DEBUG_ERROR, "Cpu Valid = %s", \

(SocketData->Cpu[SocketId].Valid == TRUE)?L"TRUE":L"FALSE"));

KtiDebugPrintFatal ((KTI_DEBUG_ERROR, "Cpu Type = %s", \

(SocketData->Cpu[SocketId].SocType == SOCKET_TYPE_CPU)?L"CPU":L"Non-CPU"));

KtiDebugPrintFatal ((KTI_DEBUG_ERROR, "Stack Enabled = %s", \

(StackPresent(SocketId, StackId) == TRUE)?L"TRUE":L"FALSE"));

continue;

}

for (Device = 0; Device <= PCI_MAX_DEVICE; Device++) {

for (Fun = 0; Fun <= PCI_MAX_FUNC; Fun++) {

// 遍历 所有设备及功能

if (PciIsDevicePresentBdf(SocketId, ResEntry->BusBase, Device, Fun)) {

// 如果设备存在

if (GetCxlPortType (SocketId, ResEntry->BusBase, Device, Fun) == CXL20_PORT) {

// 且是 CXL2.0 端口,设置端口是 CXL2.0 capable

SetStackCxl20Capable(SocketId, StackId);

break;

}

}

}

}

if (GetCxlStatus (SocketId, StackId) != AlreadyInCxlMode) {

CXL_DEBUG_LOG ("\tNon-Cxl Mode\n");

continue;

}

if (ResEntry->RcrbBase != 00) {

// 该函数配置 CXL1.1 EP 设备和桥设备正确的功能

ConfigureCxl11Hierarchy (KtiInternalGlobal, ResEntry, SocketId, StackId);

} else {

// 该函数递归的配置所有 CXL 2.0 桥设备以及 EP 设备

ConfigureCxl20Hierarchy (KtiInternalGlobal, ResEntry, SocketId, StackId);

}

if (ConfigStack) {

ConfigureCxlStackUncore (KtiInternalGlobal, SocketId, StackId);

ConfigStack = FALSE;

}

}

CXL_DEBUG_LOG ("\tCxl bus hierarchy config end\n");

}