Soft Actor-Critic(SAC算法)

强化学习—— Soft Actor-Critic(SAC算法

- 1. 基本概念

- 1.1 soft Q-value

- 1.2 soft state value function

- 1.3 Soft Policy Evaluation

- 1.4 policy improvement

- 1.5 soft policy improvemrnt

- 1.5 soft policy iteration

- 2. soft actor critic

- 2.1 soft value function

- 2.2 soft Q-function

- 2.3 policy improvement

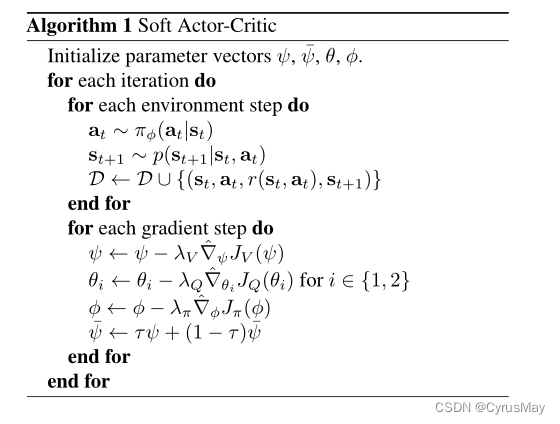

- 3. 算法流程

1. 基本概念

1.1 soft Q-value

τ π Q ( s t , a t ) = r ( s t , a t ) + γ ⋅ E s t + 1 ∼ p [ V ( s t + 1 ) ] \tau ^\pi Q(s_t,a_t)=r(s_t,a_t) + \gamma \cdot E_{s_{t +1}\sim p}[V(s_{t+1})] τπQ(st,at)=r(st,at)+γ⋅Est+1∼p[V(st+1)]

1.2 soft state value function

V ( s t ) = E a t ∼ π [ Q ( s t , a t ) − α ⋅ l o g π ( a t ∣ s t ) ] V(s_t)=E_{a_t \sim \pi}[Q(s_t,a_t)-\alpha \cdot log\pi(a_t|s_t)] V(st)=Eat∼π[Q(st,at)−α⋅logπ(at∣st)]

1.3 Soft Policy Evaluation

Q

k

+

1

=

τ

π

Q

k

Q^{k+1}=\tau^\pi Q^k

Qk+1=τπQk

当k趋于无穷时,

Q

k

Q^k

Qk将收敛至

π

\pi

π的soft Q-value。

证明:

r

π

(

s

t

,

a

t

)

=

r

(

s

t

,

a

t

)

+

γ

⋅

E

s

t

+

1

∼

p

[

H

(

π

(

⋅

∣

s

t

+

1

)

)

]

r_\pi(s_t,a_t)=r(s_t,a_t)+\gamma \cdot E_{s_{t+1}\sim p}[H(\pi(\cdot | s_{t+1}))]

rπ(st,at)=r(st,at)+γ⋅Est+1∼p[H(π(⋅∣st+1))]

Q

(

s

t

,

a

t

)

=

r

(

s

t

,

a

t

)

+

γ

⋅

E

s

t

+

1

∼

p

[

H

(

π

(

⋅

∣

s

t

+

1

)

)

+

E

s

t

+

1

,

a

t

+

1

∼

ρ

π

[

Q

(

s

t

+

1

,

a

t

+

1

)

]

Q(s_t,a_t) = r(s_t,a_t)+\gamma \cdot E_{s_{t+1}\sim p}[H(\pi(\cdot | s_{t+1})) + E_{s_{t+1},a_{t+1}\sim \rho_\pi}[Q(s_{t+1},a_{t+1})]

Q(st,at)=r(st,at)+γ⋅Est+1∼p[H(π(⋅∣st+1))+Est+1,at+1∼ρπ[Q(st+1,at+1)]

Q

(

s

t

,

a

t

)

=

r

(

s

t

,

a

t

)

+

γ

⋅

E

s

t

+

1

,

a

t

+

1

∼

ρ

π

[

−

l

o

g

(

π

(

a

t

+

1

∣

s

t

+

1

)

)

+

E

s

t

+

1

,

a

t

+

1

∼

ρ

π

[

Q

(

s

t

+

1

,

a

t

+

1

)

]

Q(s_t,a_t) = r(s_t,a_t)+\gamma \cdot E_{s_{t+1},a_{t+1}\sim \rho_\pi}[-log(\pi(a_{t+1} | s_{t+1})) + E_{s_{t+1},a_{t+1}\sim \rho_\pi}[Q(s_{t+1},a_{t+1})]

Q(st,at)=r(st,at)+γ⋅Est+1,at+1∼ρπ[−log(π(at+1∣st+1))+Est+1,at+1∼ρπ[Q(st+1,at+1)]

Q

(

s

t

,

a

t

)

=

r

(

s

t

,

a

t

)

+

γ

⋅

E

s

t

+

1

,

a

t

+

1

∼

ρ

π

[

Q

(

s

t

+

1

,

a

t

+

1

)

−

l

o

g

(

π

(

a

t

+

1

∣

s

t

+

1

)

)

Q(s_t,a_t) = r(s_t,a_t)+\gamma \cdot E_{s_{t+1},a_{t+1}\sim \rho_\pi}[Q(s_{t+1},a_{t+1})-log(\pi(a_{t+1} | s_{t+1}))

Q(st,at)=r(st,at)+γ⋅Est+1,at+1∼ρπ[Q(st+1,at+1)−log(π(at+1∣st+1))

当|A|<∞时,可以保证熵有界,因而能保证收敛。

1.4 policy improvement

π n e w = a r g m i n π ′ ∈ Π D K L ( π ′ ( ⋅ ∣ s t ) ∣ ∣ e x p ( Q π o l d ( s t , ⋅ ) ) Z π o l d ( s t ) ) \pi_{new}=argmin_{\pi^{'}\in \Pi}D_{KL}(\pi^{'}(\cdot|s_t)||\frac{exp(Q^{\pi_{old}}(s_t,\cdot))}{Z^{\pi_{old}}(s_t)}) πnew=argminπ′∈ΠDKL(π′(⋅∣st)∣∣Zπold(st)exp(Qπold(st,⋅)))

1.5 soft policy improvemrnt

Q

π

n

e

w

(

s

t

,

a

t

)

≥

Q

π

o

l

d

(

s

t

,

a

t

)

Q^{\pi_{new}}(s_t,a_t)≥Q^{\pi_{old}}(s_t,a_t)

Qπnew(st,at)≥Qπold(st,at)

s.t.为:

π

o

l

d

∈

Π

,

(

s

t

,

a

t

)

∈

S

×

A

,

∣

A

∣

<

∞

\pi_{old}\in \Pi,(s_t,a_t)\in S × A, |A| < ∞

πold∈Π,(st,at)∈S×A,∣A∣<∞

证明如下:

π

n

e

w

=

a

r

g

m

i

n

π

′

∈

Π

D

K

L

(

π

′

(

⋅

∣

s

t

)

∣

∣

e

x

p

(

Q

π

o

l

d

(

s

t

,

⋅

)

−

l

o

g

(

Z

(

s

t

)

)

)

)

=

a

r

g

m

i

n

π

′

∈

Π

J

π

o

l

d

(

π

′

(

⋅

∣

s

t

)

)

\pi_{new}=argmin_{\pi^{'}\in \Pi}D_{KL}(\pi^{'}(\cdot|s_t)||exp(Q^{\pi_{old}}(s_t,\cdot)-log(Z(s_t))))\\ =argmin_{\pi^{'}\in \Pi}J_{\pi_{old}}(\pi^{'}(\cdot|s_t))

πnew=argminπ′∈ΠDKL(π′(⋅∣st)∣∣exp(Qπold(st,⋅)−log(Z(st))))=argminπ′∈ΠJπold(π′(⋅∣st))

J

π

o

l

d

(

π

′

(

⋅

∣

s

t

)

)

=

E

a

t

∼

π

′

[

l

o

g

(

π

′

(

s

t

,

a

t

)

)

−

Q

π

o

l

d

(

s

t

,

a

t

)

+

l

o

g

(

Z

(

s

t

)

)

]

J_{\pi_{old}}(\pi^{'}(\cdot|s_t)) = E_{a_t \sim \pi^{'}}[log(\pi^{'}(s_t,a_t))-Q^{\pi_{old}}(s_t,a_t)+log(Z(s_t))]

Jπold(π′(⋅∣st))=Eat∼π′[log(π′(st,at))−Qπold(st,at)+log(Z(st))]

由于一直可以取

π

n

e

w

=

π

o

l

d

\pi_{new}=\pi_{old}

πnew=πold,所有总能满足:

E

a

t

∼

π

n

e

w

[

l

o

g

(

π

n

e

w

(

a

t

∣

s

t

)

)

−

Q

π

o

l

d

(

s

t

,

a

t

)

]

≤

E

a

t

∈

π

o

l

d

[

l

o

g

(

π

o

l

d

(

a

t

∣

s

t

)

)

−

Q

π

o

l

d

(

s

t

,

a

t

)

]

E_{a_t\sim \pi_{new}}[log(\pi_{new}(a_t|s_t))-Q^{\pi_{old}}(s_t,a_t)]≤E_{a_t \in \pi_{old}}[log(\pi_{old}(a_t|s_t))-Q^{\pi_{old}}(s_t,a_t)]

Eat∼πnew[log(πnew(at∣st))−Qπold(st,at)]≤Eat∈πold[log(πold(at∣st))−Qπold(st,at)]

E

a

t

∼

π

n

e

w

[

l

o

g

(

π

n

e

w

(

a

t

∣

s

t

)

)

−

Q

π

o

l

d

(

s

t

,

a

t

)

]

≤

−

V

π

o

l

d

(

s

t

)

E

a

t

∼

π

n

e

w

[

Q

π

o

l

d

(

s

t

,

a

t

)

−

l

o

g

(

π

n

e

w

(

a

t

∣

s

t

)

)

]

≥

V

π

o

l

d

(

s

t

)

E_{a_t\sim \pi_{new}}[log(\pi_{new}(a_t|s_t))-Q^{\pi_{old}}(s_t,a_t)]≤ - V^{\pi_{old}}(s_t)\\E_{a_t\sim \pi_{new}}[Q^{\pi_{old}}(s_t,a_t)-log(\pi_{new}(a_t|s_t))]≥V^{\pi_{old}}(s_t)

Eat∼πnew[log(πnew(at∣st))−Qπold(st,at)]≤−Vπold(st)Eat∼πnew[Qπold(st,at)−log(πnew(at∣st))]≥Vπold(st)

Q

π

o

l

d

(

s

t

,

a

t

)

=

r

(

s

t

,

a

t

)

+

γ

⋅

E

s

t

+

1

∼

p

[

V

π

o

l

d

(

s

t

+

1

)

]

≤

r

(

s

t

,

a

t

)

+

γ

⋅

E

s

t

+

1

∼

p

E

a

t

+

1

∼

π

n

e

w

[

Q

π

o

l

d

(

s

t

,

a

t

)

−

l

o

g

(

π

n

e

w

(

a

t

∣

s

t

)

]

≤

.

.

.

.

.

.

.

.

.

.

≤

Q

π

n

e

w

(

s

t

,

a

t

)

Q^{\pi_{old}}(s_t,a_t)=r(s_t,a_t)+\gamma \cdot E_{s_{t+1}\sim p }[V^{\pi_{old}}(s_{t+1})]\\ ≤r(s_t,a_t)+\gamma \cdot E_{s_{t+1}\sim p E_{a_{t+1}\sim \pi_{new}}}[Q^{\pi_{old}}(s_t,a_t)-log(\pi_{new}(a_t|s_t)]\\ ≤..........\\ ≤Q^{\pi_{new}}(s_t,a_t)

Qπold(st,at)=r(st,at)+γ⋅Est+1∼p[Vπold(st+1)]≤r(st,at)+γ⋅Est+1∼pEat+1∼πnew[Qπold(st,at)−log(πnew(at∣st)]≤..........≤Qπnew(st,at)

1.5 soft policy iteration

假设:

∣

A

∣

<

∞

;

π

∈

Π

|A|<∞;\pi\in\Pi

∣A∣<∞;π∈Π

经过不断地soft policy evaluation和policy improvement,最终policy会收敛至

π

⋆

\pi^{\star}

π⋆,其满足

Q

π

⋆

(

s

t

,

a

t

)

≥

Q

π

(

s

t

,

a

t

)

;其中

π

∈

Π

Q^{\pi^\star}(s_t,a_t)≥Q^{\pi}(s_t,a_t);其中\pi\in\Pi

Qπ⋆(st,at)≥Qπ(st,at);其中π∈Π

2. soft actor critic

2.1 soft value function

- loss function

J V ( ψ ) = E s t ∼ D [ 1 2 ( V ψ ( s t ) − E a t ∼ π ϕ [ Q θ ( s t , a t ) − l o g ( π ϕ ( a t ∣ s t ) ) ) ] 2 ] J_V(\psi) = E_{s_t\sim D}[\frac{1}{2}(V_\psi(s_t)-E_{a_t\sim \pi_\phi}[Q_{\theta}(s_t,a_t)-log(\pi_\phi(a_t|s_t)))]^2] JV(ψ)=Est∼D[21(Vψ(st)−Eat∼πϕ[Qθ(st,at)−log(πϕ(at∣st)))]2] - gradient

∇ ^ ψ J V ( ψ ) = ∇ ψ V ψ ( s t ) ⋅ ( V ψ ( s t ) − Q θ ( s t , a t ) + l o g ( π ϕ ( a t ∣ s t ) ) ) \hat\nabla_\psi J_V(\psi)=\nabla_\psi V_\psi(s_t)\cdot(V_\psi(s_t)-Q_\theta(s_t,a_t)+log(\pi_\phi(a_t|s_t))) ∇^ψJV(ψ)=∇ψVψ(st)⋅(Vψ(st)−Qθ(st,at)+log(πϕ(at∣st)))

2.2 soft Q-function

- loss function

J Q ( θ ) = E ( s t , a t ) ∼ D [ 1 2 ( Q θ ( s t , a t ) − Q ^ ( s t , a t ) ) 2 ] J_Q(\theta)=E_{(s_t,a_t)\sim D}[\frac{1}{2}(Q_\theta(s_t,a_t)-\hat Q(s_t,a_t))^2] JQ(θ)=E(st,at)∼D[21(Qθ(st,at)−Q^(st,at))2]

Q ^ ( s t , a t ) = r ( s t , a t ) + γ ⋅ E s t + 1 ∼ p [ V ψ ˉ ( s t + 1 ) ] \hat Q(s_t,a_t)=r(s_t,a_t)+\gamma\cdot E_{s_{t+1}\sim p}[V_{\bar{\psi}} (s_{t+1})] Q^(st,at)=r(st,at)+γ⋅Est+1∼p[Vψˉ(st+1)] - gradient

∇ ^ θ J Q ( θ ) = ∇ θ Q θ ( s t , a t ) ⋅ [ Q θ ( s t , a t ) − r ( s t , a t ) − γ ⋅ V ψ ˉ ( s t + 1 ) ] \hat\nabla_\theta J_Q(\theta)=\nabla_\theta Q_\theta(s_t,a_t)\cdot[Q_\theta(s_t,a_t)-r(s_t,a_t)-\gamma \cdot V_{\bar\psi}(s_{t+1})] ∇^θJQ(θ)=∇θQθ(st,at)⋅[Qθ(st,at)−r(st,at)−γ⋅Vψˉ(st+1)]

2.3 policy improvement

- loss function

J π ( ϕ ) = E s t ∼ D [ D K L ( π ϕ ( ⋅ ∣ s t ) ∣ ∣ e x p ( Q θ ( s t , ⋅ ) ) Z θ ( s t ) ) ] J_\pi(\phi)=E_{s_t\sim D}[D_{KL}(\pi_\phi(\cdot|s_t)||\frac{exp(Q_\theta(s_t,\cdot))}{Z_\theta(s_t)})] Jπ(ϕ)=Est∼D[DKL(πϕ(⋅∣st)∣∣Zθ(st)exp(Qθ(st,⋅)))]

reparameterize the policy

a t = f ϕ ( ϵ t ; s t ) = f ϕ μ ( s t ) + ϵ t ⋅ f ϕ σ ( s t ) a_t=f_\phi(\epsilon_t;s_t)=f_\phi^\mu(s_t)+\epsilon_t\cdot f_\phi^\sigma(s_t) at=fϕ(ϵt;st)=fϕμ(st)+ϵt⋅fϕσ(st)

J π ( ϕ ) = E s t ∼ D ; ϵ t ∈ N [ l o g ( π ϕ ( f ϕ ( ϵ t ; s t ) ∣ s t ) ) − Q θ ( s t , f ϕ ( ϵ t ; s t ) ) ] J_\pi(\phi)=E_{s_t\sim D;\epsilon_t\in N}[log(\pi_\phi(f_\phi(\epsilon_t;s_t)|s_t))-Q_\theta(s_t,f_\phi(\epsilon_t;s_t))] Jπ(ϕ)=Est∼D;ϵt∈N[log(πϕ(fϕ(ϵt;st)∣st))−Qθ(st,fϕ(ϵt;st))] - gradient

∇ θ E q θ ( Z ) [ f θ ( Z ) ] = E q θ ( Z ) [ ∂ f θ ( Z ) ∂ θ ] + E q θ ( Z ) [ d f θ ( Z ) d Z ⋅ d Z d θ ] \nabla_\theta E_{q_\theta(Z)}[f_\theta(Z)]=E_{q_\theta(Z)}[\frac{\partial f_\theta(Z)}{\partial \theta}] + E_{q_\theta(Z)}[\frac{df_\theta(Z)}{dZ}\cdot\frac{dZ}{d\theta}] ∇θEqθ(Z)[fθ(Z)]=Eqθ(Z)[∂θ∂fθ(Z)]+Eqθ(Z)[dZdfθ(Z)⋅dθdZ]

∇ ^ ϕ J π ( ϕ ) = ∇ ϕ l o g ( π ϕ ( a t ; s t ) ∣ s t ) ) + ∇ ϕ f ϕ ( ϵ t ; s t ) ⋅ ( ∇ a t l o g ( π ( a t ∣ s t ) ) − ∇ a t Q θ ( s t , a t ) ) \hat \nabla_\phi J_\pi(\phi)=\nabla_\phi log(\pi_\phi(a_t;s_t)|s_t))+\nabla_{\phi}f_\phi(\epsilon_t;s_t)\cdot(\nabla_{a_t}log(\pi(a_t|s_t))-\nabla_{a_t} Q_\theta(s_t,a_t)) ∇^ϕJπ(ϕ)=∇ϕlog(πϕ(at;st)∣st))+∇ϕfϕ(ϵt;st)⋅(∇atlog(π(at∣st))−∇atQθ(st,at))

3. 算法流程

By CyrusMay 2022.09.06

世界 再大 不过 你和我

用最小回忆 堆成宇宙

————五月天(因为你 所以我)————