DolphinScheduler安装与配置

DolphinScheduler概述

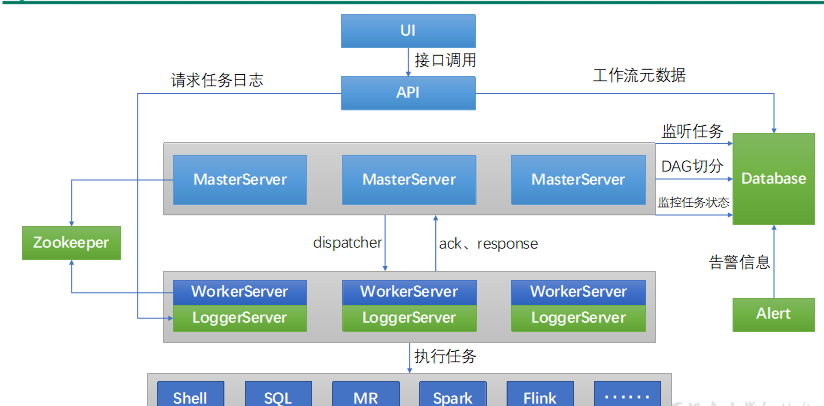

Apache DolphinScheduler是一个分布式、易扩展的可视化DAG工作流任务调度平台。致力于解决数据处理流程中错综复杂的依赖关系,使调度系统在数据处理流程中开箱即用。

DolphinScheduler的主要角色如下:

MasterServer采用分布式无中心设计理念,MasterServer主要负责 DAG 任务切分、任务提交、任务监控,并同时监听其它MasterServer和WorkerServer的健康状态。

WorkerServer也采用分布式无中心设计理念,WorkerServer主要负责任务的执行和提供日志服务。

ZooKeeper服务,系统中的MasterServer和WorkerServer节点都通过ZooKeeper来进行集群管理和容错。

Alert服务,提供告警相关服务。

API接口层,主要负责处理前端UI层的请求。

UI,系统的前端页面,提供系统的各种可视化操作界面。

安装调度器

一、上传DolphinScheduler安装包到hadoop102节点的/opt/software目录

二、解压安装包到当前目录

注:解压目录并非最终的安装目录

tar -zxvf apache-dolphinscheduler-2.0.5-bin

创建元数据库及用户

DolphinScheduler 元数据存储在关系型数据库中,故需创建相应的数据库和用户。

(1)创建数据库

CREATE DATABASE dolphinscheduler DEFAULT CHARACTER SET utf8 DEFAULT COLLATE utf8_general_ci;

2)创建用户

CREATE USER 'dolphinscheduler'@'%' IDENTIFIED BY 'dolphinscheduler';

注:

若出现以下错误信息,表明新建用户的密码过于简单。

ERROR 1819 (HY000): Your password does not satisfy the current policy requirements

可提高密码复杂度或者执行以下命令降低MySQL密码强度级别。

mysql> set global validate_password_policy=0;

mysql> set global validate_password_length=4;

(3)赋予用户相应权限

mysql> GRANT ALL PRIVILEGES ON dolphinscheduler.* TO 'dolphinscheduler'@'%';

mysql> flush privileges;

配置一键部署脚本

修改解压目录下的conf/config目录下的install_config.conf文件。

[root@hadoop102 apache-dolphinscheduler-2.0.5-bin]$ vim conf/config/install_config.conf

修改内容如下

#

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

#

# ---------------------------------------------------------

# INSTALL MACHINE

# ---------------------------------------------------------

# A comma separated list of machine hostname or IP would be installed DolphinScheduler,

# including master, worker, api, alert. If you want to deploy in pseudo-distributed

# mode, just write a pseudo-distributed hostname

# Example for hostnames: ips="ds1,ds2,ds3,ds4,ds5", Example for IPs: ips="192.168.8.1,192.168.8.2,192.168.8.3,192.168.8.4,192.168.8.5"

ips="hadoop102,hadoop103,hadoop104"

# 将要部署任一 DolphinScheduler 服务的服务器主机名或 ip 列表

# Port of SSH protocol, default value is 22. For now we only support same port in all `ips` machine

# modify it if you use different ssh port

sshPort="22"

# A comma separated list of machine hostname or IP would be installed Master server, it

# must be a subset of configuration `ips`.

# Example for hostnames: masters="ds1,ds2", Example for IPs: masters="192.168.8.1,192.168.8.2"

masters="hadoop102"

# master 所在主机名列表,必须是 ips 的子集

# A comma separated list of machine <hostname>:<workerGroup> or <IP>:<workerGroup>.All hostname or IP must be a

# subset of configuration `ips`, And workerGroup have default value as `default`, but we recommend you declare behind the hosts

# Example for hostnames: workers="ds1:default,ds2:default,ds3:default", Example for IPs: workers="192.168.8.1:default,192.168.8.2:default,192.168.8.3:default"

workers="hadoop102:default,hadoop103:default,hadoop104:default"

# worker主机名及队列,此处的 ip 必须在 ips 列表中

# A comma separated list of machine hostname or IP would be installed Alert server, it

# must be a subset of configuration `ips`.

# Example for hostname: alertServer="ds3", Example for IP: alertServer="192.168.8.3"

alertServer="hadoop103"

# 告警服务所在服务器主机名

# A comma separated list of machine hostname or IP would be installed API server, it

# must be a subset of configuration `ips`.

# Example for hostname: apiServers="ds1", Example for IP: apiServers="192.168.8.1"

apiServers="hadoop104"

# api服务所在服务器主机名

# A comma separated list of machine hostname or IP would be installed Python gateway server, it

# must be a subset of configuration `ips`.

# Example for hostname: pythonGatewayServers="ds1", Example for IP: pythonGatewayServers="192.168.8.1"

# pythonGatewayServers="ds1"

# 不需要的配置项,可以保留默认值,也可以用 # 注释

# The directory to install DolphinScheduler for all machine we config above. It will automatically be created by `install.sh` script if not exists.

# Do not set this configuration same as the current path (pwd)

installPath="/opt/module/dolphinscheduler"

# DS 安装路径,如果不存在会创建

# The user to deploy DolphinScheduler for all machine we config above. For now user must create by yourself before running `install.sh`

# script. The user needs to have sudo privileges and permissions to operate hdfs. If hdfs is enabled than the root directory needs

# to be created by this user

deployUser="atguigu"

# 部署用户,任务执行服务是以 sudo -u {linux-user} 切换不同 Linux 用户的方式来实现多租户运行作业,因此该用户必须有免密的 sudo 权限。

# The directory to store local data for all machine we config above. Make sure user `deployUser` have permissions to read and write this directory.

dataBasedirPath="/tmp/dolphinscheduler"

# 前文配置的所有节点的本地数据存储路径,需要确保部署用户拥有该目录的读写权限

# ---------------------------------------------------------

# DolphinScheduler ENV

# ---------------------------------------------------------

# JAVA_HOME, we recommend use same JAVA_HOME in all machine you going to install DolphinScheduler

# and this configuration only support one parameter so far.

javaHome="/opt/module/jdk1.8.0_212"

# JAVA_HOME 路径

# DolphinScheduler API service port, also this is your DolphinScheduler UI component's URL port, default value is 12345

apiServerPort="12345"

# ---------------------------------------------------------

# Database

# NOTICE: If database value has special characters, such as `.*[]^${}\+?|()@#&`, Please add prefix `\` for escaping.

# ---------------------------------------------------------

# The type for the metadata database

# Supported values: ``postgresql``, ``mysql`, `h2``.

# 注意:数据库相关配置的 value 必须加引号,否则配置无法生效

DATABASE_TYPE="mysql"

# 数据库类型

# Spring datasource url, following <HOST>:<PORT>/<database>?<parameter> format, If you using mysql, you could use jdbc

# string jdbc:mysql://127.0.0.1:3306/dolphinscheduler?useUnicode=true&characterEncoding=UTF-8 as example

# SPRING_DATASOURCE_URL=${SPRING_DATASOURCE_URL:-"jdbc:h2:mem:dolphinscheduler;MODE=MySQL;DB_CLOSE_DELAY=-1;DATABASE_TO_LOWER=true"}

SPRING_DATASOURCE_URL="jdbc:mysql://hadoop102:3306/dolphinscheduler?useUnicode=true&characterEncoding=UTF-8"

# 数据库 URL

# Spring datasource username

# SPRING_DATASOURCE_USERNAME=${SPRING_DATASOURCE_USERNAME:-"sa"}

SPRING_DATASOURCE_USERNAME="dolphinscheduler"

# 数据库用户名

# Spring datasource password

# SPRING_DATASOURCE_PASSWORD=${SPRING_DATASOURCE_PASSWORD:-""}

SPRING_DATASOURCE_PASSWORD="dolphinscheduler"

# 数据库密码

# ---------------------------------------------------------

# Registry Server

# ---------------------------------------------------------

# Registry Server plugin name, should be a substring of `registryPluginDir`, DolphinScheduler use this for verifying configuration consistency

registryPluginName="zookeeper"

# 注册中心插件名称,DS 通过注册中心来确保集群配置的一致性

# Registry Server address.

registryServers="hadoop102:2181,hadoop103:2181,hadoop104:2181"

# 注册中心地址,即 Zookeeper 集群的地址

# Registry Namespace

registryNamespace="dolphinscheduler"

# DS 在 Zookeeper 的结点名称

# ---------------------------------------------------------

# Worker Task Server

# ---------------------------------------------------------

# Worker Task Server plugin dir. DolphinScheduler will find and load the worker task plugin jar package from this dir.

taskPluginDir="lib/plugin/task"

# resource storage type: HDFS, S3, NONE

resourceStorageType="HDFS"

# 资源存储类型

# resource store on HDFS/S3 path, resource file will store to this hdfs path, self configuration, please make sure the directory exists on hdfs and has read write permissions. "/dolphinscheduler" is recommended

resourceUploadPath="/dolphinscheduler"

# 资源上传路径

# if resourceStorageType is HDFS,defaultFS write namenode address,HA, you need to put core-site.xml and hdfs-site.xml in the conf directory.

# if S3,write S3 address,HA,for example :s3a://dolphinscheduler,

# Note,S3 be sure to create the root directory /dolphinscheduler

defaultFS="hdfs://mycluster"

# 默认文件系统

# if resourceStorageType is S3, the following three configuration is required, otherwise please ignore

s3Endpoint="http://192.168.xx.xx:9010"

s3AccessKey="xxxxxxxxxx"

s3SecretKey="xxxxxxxxxx"

# resourcemanager port, the default value is 8088 if not specified

resourceManagerHttpAddressPort="8088"

# yarn RM http 访问端口

# if resourcemanager HA is enabled, please set the HA IPs; if resourcemanager is single node, keep this value empty

yarnHaIps="hadoop102,hadoop103"

# Yarn RM 高可用 ip,若未启用 RM 高可用,则将该值置空

# if resourcemanager HA is enabled or not use resourcemanager, please keep the default value; If resourcemanager is single node, you only need to replace 'yarnIp1' to actual resourcemanager hostname

singleYarnIp=""

# Yarn RM 主机名,若启用了 HA 或未启用 RM,保留默认值

# who has permission to create directory under HDFS/S3 root path

# Note: if kerberos is enabled, please config hdfsRootUser=

hdfsRootUser="atguigu"

# 拥有 HDFS 根目录操作权限的用户

# kerberos config

# whether kerberos starts, if kerberos starts, following four items need to config, otherwise please ignore

kerberosStartUp="false"

# kdc krb5 config file path

krb5ConfPath="$installPath/conf/krb5.conf"

# keytab username,watch out the @ sign should followd by \\

keytabUserName="hdfs-mycluster\\@ESZ.COM"

# username keytab path

keytabPath="$installPath/conf/hdfs.headless.keytab"

# kerberos expire time, the unit is hour

kerberosExpireTime="2"

# use sudo or not

sudoEnable="true"

# worker tenant auto create

workerTenantAutoCreate="false"

将必要配置文件拷贝到配置目录:

[root@hadoop102 apache-dolphinscheduler-2.0.5-bin]$ cp /opt/moudle/hadoop/etc/hadoop/core-site.xml conf

[root@hadoop102 apache-dolphinscheduler-2.0.5-bin]$ cp /opt/moudle/hadoop/etc/hadoop/hdfs-site.xml conf

初始化数据库

(1)拷贝MySQL驱动到DolphinScheduler的解压目录下的lib中,要求使用 MySQL JDBC Driver 8.0.16。

[root@hadoop102apache-dolphinscheduler-2.0.5-bin]$cp/opt/software/mysql-connector-java-8.0.16.jar lib/

(2)执行数据库初始化脚本

数据库初始化脚本位于DolphinScheduler解压目录下的script目录中,即/opt/software/ds/apache-dolphinscheduler-2.0.5-bin/script/。

[root@hadoop102 apache-dolphinscheduler-2.0.5-bin]$ script/create-dolphinscheduler.sh

一键部署DolphinScheduler

(1)启动Zookeeper集群

[root@hadoop102 apache-dolphinscheduler-2.0.5-bin]$ zk.sh start

(2)一键部署并启动DolphinScheduler

[root@hadoop102 apache-dolphinscheduler-2.0.5-bin]$ ./install.sh

(3)查看DolphinScheduler进程

--------- hadoop102 ----------

29139 ApiApplicationServer

28963 WorkerServer

3332 QuorumPeerMain

2100 DataNode

28902 MasterServer

29081 AlertServer

1978 NameNode

29018 LoggerServer

2493 NodeManager

29551 Jps

--------- hadoop103 ----------

29568 Jps

29315 WorkerServer

2149 NodeManager

1977 ResourceManager

2969 QuorumPeerMain

29372 LoggerServer

1903 DataNode

--------- hadoop104 ----------

1905 SecondaryNameNode

27074 WorkerServer

2050 NodeManager

2630 QuorumPeerMain

1817 DataNode

27354 Jps

27133 LoggerServer

(4)访问DolphinScheduler UI地址在APIServer的位置

DolphinScheduler UI地址为http://hadoop104:12345/dolphinscheduler

初始用户的用户名为:admin,密码为dolphinscheduler123

DolphinScheduler启停命令

DolphinScheduler的启停脚本均位于其安装目录的bin目录下。

1)一键启停所有服务

./bin/start-all.sh

./bin/stop-all.sh

注意同Hadoop的启停脚本进行区分。

2)启停 Master

./bin/dolphinscheduler-daemon.sh start master-server

./bin/dolphinscheduler-daemon.sh stop master-server

3)启停 Worker

./bin/dolphinscheduler-daemon.sh start worker-server

./bin/dolphinscheduler-daemon.sh stop worker-server

4)启停 Api

./bin/dolphinscheduler-daemon.sh start api-server

./bin/dolphinscheduler-daemon.sh stop api-server

5)启停 Logger

./bin/dolphinscheduler-daemon.sh start logger-server

./bin/dolphinscheduler-daemon.sh stop logger-server

6)启停 Alert

./bin/dolphinscheduler-daemon.sh start alert-server

./bin/dolphinscheduler-daemon.sh stop alert-server