Python开源项目RestoreFormer(++)——人脸重建(Face Restoration),模糊清晰、划痕修复及黑白上色的实践

有关 python anaconda 及运行环境的安装与设置请参阅:

Python开源项目CodeFormer——人脸重建(Face Restoration),模糊清晰、划痕修复及黑白上色的实践![]() https://blog.csdn.net/beijinghorn/article/details/134334021

https://blog.csdn.net/beijinghorn/article/details/134334021

1 RESTOREFORMER

https://github.com/wzhouxiff/RestoreFormer

1.1 进化史Updating

- 20230915 Update an online demo Huggingface Gradio

- 20230915 A more user-friendly and comprehensive inference method refer to our RestoreFormer++

- 20230116 For convenience, we further upload the test datasets, including CelebA (both HQ and LQ data), LFW-Test, CelebChild-Test, and Webphoto-Test, to OneDrive and BaiduYun.

- 20221003 We provide the link of the test datasets.

- 20220924 We add the code for metrics in scripts/metrics.

1.2 论文RestoreFormer

This repo includes the source code of the paper: "RestoreFormer: High-Quality Blind Face Restoration from Undegraded Key-Value Pairs" (CVPR 2022) by Zhouxia Wang, Jiawei Zhang, Runjian Chen, Wenping Wang, and Ping Luo.

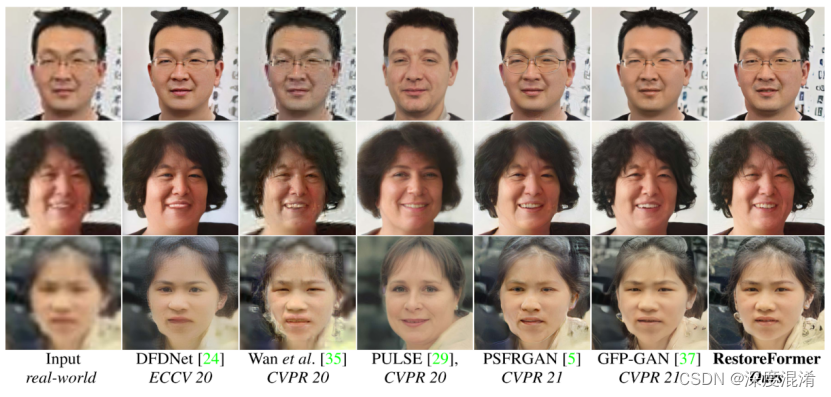

RestoreFormer tends to explore fully-spatial attentions to model contextual information and surpasses existing works that use local operators. It has several benefits compared to prior arts. First, it incorporates a multi-head coross-attention layer to learn fully-spatial interations between corrupted queries and high-quality key-value pairs. Second, the key-value pairs in RestoreFormer are sampled from a reconstruction-oriented high-quality dictionary, whose elements are rich in high-quality facial features specifically aimed for face reconstruction.

1.3 运行环境Environment

python>=3.7

pytorch>=1.7.1

pytorch-lightning==1.0.8

omegaconf==2.0.0

basicsr==1.3.3.4

Warning Different versions of pytorch-lightning and omegaconf may lead to errors or different results.

1.4 数据集与模型Preparations of dataset and models

1.4.1 Dataset:

Training data: Both HQ Dictionary and RestoreFormer in our work are trained with FFHQ which attained from FFHQ repository. The original size of the images in FFHQ are 1024x1024. We resize them to 512x512 with bilinear interpolation in our work. Link this dataset to ./data/FFHQ/image512x512.

https://pan.baidu.com/s/1SjBfinSL1F-bbOpXiD0nlw?pwd=nren

1.4.2 测试数据Test data:

CelebA-Test-HQ: OneDrive; BaiduYun(code mp9t)

https://pan.baidu.com/s/1tMpxz8lIW50U8h00047GIw?pwd=mp9t

CelebA-Test-LQ: OneDrive; BaiduYun(code 7s6h)

https://pan.baidu.com/s/1y6ZcQPCLyggj9VB5MgoWyg?pwd=7s6h

LFW-Test: OneDrive; BaiduYun(code 7fhr). Note that it was align with dlib.

https://pan.baidu.com/s/1UkfYLTViL8XVdZ-Ej-2G9g?pwd=7fhr

CelebChild: OneDrive; BaiduYun(code rq65)

https://pan.baidu.com/s/1pGCD4TkhtDsmp8emZd8smA?pwd=rq65

WepPhoto-Test: OneDrive; BaiduYun(code nren)

https://pan.baidu.com/s/1SjBfinSL1F-bbOpXiD0nlw?pwd=nren

Model: Both pretrained models used for training and the trained model of our RestoreFormer can be attained from OneDrive or BaiduYun(code x6nn). Link these models to ./experiments.

https://pan.baidu.com/s/1EO7_1dYyCuORpPNosQgogg?pwd=x6nn

1.5 测试Test

sh scripts/test.sh

1.6 自训练Training

sh scripts/run.sh

Note.

The first stage is to attain HQ Dictionary by setting conf_name in scripts/run.sh to 'HQ_Dictionary'.

The second stage is blind face restoration. You need to add your trained HQ_Dictionary model to ckpt_path in config/RestoreFormer.yaml and set conf_name in scripts/run.sh to 'RestoreFormer'.

Our model is trained with 4 V100 GPUs.

1.7 度量 Metrics

sh scripts/metrics/run.sh

Note.

You need to add the path of CelebA-Test dataset in the script if you want get IDD, PSRN, SSIM, LIPIS.

1.8 引用 Citation

@article{wang2022restoreformer,

title={RestoreFormer: High-Quality Blind Face Restoration from Undegraded Key-Value Pairs},

author={Wang, Zhouxia and Zhang, Jiawei and Chen, Runjian and Wang, Wenping and Luo, Ping},

booktitle={The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2022}

}

1.9 知识 Acknowledgement

We thank everyone who makes their code and models available, especially Taming Transformer, basicsr, and GFPGAN.

1.10 联系 Contact

For any question, feel free to email wzhoux@connect.hku.hk or zhouzi1212@gmail.com.

2 RESTOREFORMER++

https://github.com/wzhouxiff/RestoreFormerPlusPlus

2.1 进化史ToDo List

20230915 Update an online demo Huggingface Gradio

20230915 Provide a user-friendly method for inference.

It is avaliable for background SR with RealESRGAN.

basicsr should be upgraded to 1.4.2.

20230914 Upload model

20230914 Realse Code

20221120 Introducing the project.

2.2 论文RestoreFormer++

This repo is a official implementation of "RestoreFormer++: Towards Real-World Blind Face Restoration from Undegraded Key-Value Paris".

https://arxiv.org/pdf/2308.07228.pdf

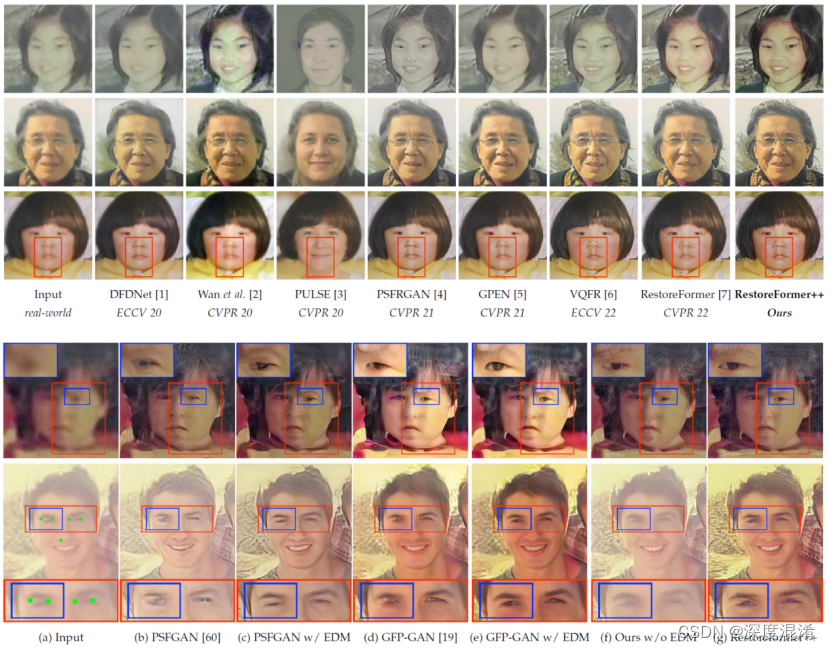

RestoreFormer++ is an extension of our RestoreFormer. It proposes to restore a degraded face image with both fidelity and realness by using the powerful fully-spacial attention mechanisms to model the abundant contextual information in the face and its interplay with our reconstruction-oriented high-quality priors. Besides, it introduces an extending degrading model (EDM) that contains more realistic degraded scenarios for training data synthesizing, which helps to enhance its robustness and generalization towards real-world scenarios. Our results compared with the state-of-the-art methods and performance with/without EDM are in following:

RestoreFormer++是RestoreFormer的扩展。它提出了利用强大的全空间注意机制来模拟人脸中丰富的上下文信息及其与我们面向重构的高质量先验的相互作用,以保真度和真实度恢复退化的人脸图像。此外,它还引入了一个扩展的退化模型(EDM),该模型包含更真实的退化场景,用于训练数据合成,这有助于增强其鲁棒性和对真实场景的泛化。我们的结果与最先进的方法和性能有/没有EDM的比较如下:

2.3 运行环境Environment

python>=3.7

pytorch>=1.7.1

pytorch-lightning==1.0.8

omegaconf==2.0.0

basicsr==1.3.3.4 basicsr>=1.4.2

realesrgan==0.3.0

Warning Different versions of pytorch-lightning and omegaconf may lead to errors or different results.

警告:不同版本的pytorch-lightning和omegaconf可能导致错误或不同的结果。

2.4 数据集与模型Preparations of dataset and models

Dataset:

Training data: Both ROHQD and RestoreFormer++ in our work are trained with FFHQ which attained from FFHQ repository. The original size of the images in FFHQ are 1024x1024. We resize them to 512x512 with bilinear interpolation in our work. Link this dataset to ./data/FFHQ/image512x512.

https://github.com/NVlabs/ffhq-dataset

Test data: CelebA-Test, LFW-Test, WebPhoto-Test, and CelebChild-Test

https://pan.baidu.com/s/1iUvBBFMkjgPcWrhZlZY2og?pwd=test

http://vis-www.cs.umass.edu/lfw/#views

https://xinntao.github.io/projects/gfpgan

训练数据:在我们的工作中,ROHQD和RestoreFormer++都是用FFHQ库获得的FFHQ训练的。FFHQ中的图像的原始大小是1024x1024。在我们的工作中,我们用双线性插值将它们调整为512x512。将此数据集链接到./data/FFHQ/image512x512。

Model: Both pretrained models used for training and the trained model of our RestoreFormer and RestoreFormer++ can be attained from Google Driver. Link these models to ./experiments.

https://connecthkuhk-my.sharepoint.com/:f:/g/personal/wzhoux_connect_hku_hk/EkZhGsLBtONKsLlWRmf6g7AB_VOA_6XAKmYUXLGKuNBsHQ?e=ic2LPl

模型:用于训练的预训练模型和我们的RestoreFormer和RestoreFormer++的训练模型都可以从谷歌盘中获得。将这些模型链接(存放)到:/experiments 文件夹。

2.5 快速指南Quick Inference

python inference.py -i data/aligned -o results/RF++/aligned -v RestoreFormer++ -s 2 --aligned --save

python inference.py -i data/raw -o results/RF++/raw -v RestoreFormer++ -s 2 --save

python inference.py -i data/aligned -o results/RF/aligned -v RestoreFormer -s 2 --aligned --save

python inference.py -i data/raw -o results/RF/raw -v RestoreFormer -s 2 --save

Note: Related codes are borrowed from GFPGAN.

https://github.com/TencentARC/GFPGAN

2.6 测试Test

sh scripts/test.sh

scripts/test.sh

exp_name='RestoreFormer'

exp_name='RestoreFormerPlusPlus'

root_path='experiments'

out_root_path='results'

align_test_path='data/aligned'

# unalign_test_path='data/raw'

tag='test'

outdir=$out_root_path'/'$exp_name'_'$tag

if [ ! -d $outdir ];then

mkdir -m 777 $outdir

fi

CUDA_VISIBLE_DEVICES=0 python -u scripts/test.py \

--outdir $outdir \

-r $root_path'/'$exp_name'/last.ckpt' \

-c 'configs/'$exp_name'.yaml' \

--test_path $align_test_path \

--aligned

This codebase is available for both RestoreFormer and RestoreFormerPlusPlus. Determinate the specific model with exp_name.

这个代码库可用于RestoreFormer和RestoreFormer++。使用exp_name确定特定的模型。

Setting the model path with root_path

使用root_path设置模型路径

Restored results are save in out_root_path

恢复的结果将保存在out_root_path中

Put the degraded face images in test_path

将退化的人脸图像放入test_path中

If the degraded face images are aligned, set --aligned, else remove it from the script. The provided test images in data/aligned are aligned, while images in data/raw are unaligned and contain several faces.

如果退化的人脸图像对齐,设置对齐,否则将其从脚本中删除。所提供的数据/对齐中的测试图像是对齐的,而数据/原始中的图像是未对齐的,并且包含多个面。

2.7 自我训练Training

sh scripts/run.sh

scripts/run.sh

export BASICSR_JIT=True

# For RestoreFormer

# conf_name='HQ_Dictionary'

# conf_name='RestoreFormer'

# For RestoreFormer++

conf_name='ROHQD'

conf_name='RestoreFormerPlusPlus'

# gpus='0,1,2,3,4,5,6,7'

# node_n=1

# ntasks_per_node=8

root_path='PATH_TO_CHECKPOINTS'

gpus='0,'

node_n=1

ntasks_per_node=1

gpu_n=$(expr $node_n \* $ntasks_per_node)

python -u main.py \

--root-path $root_path \

--base 'configs/'$conf_name'.yaml' \

-t True \

--postfix $conf_name'_gpus'$gpu_n \

--gpus $gpus \

--num-nodes $node_n \

--random-seed True \

This codebase is available for both RestoreFormer and RestoreFormerPlusPlus. Determinate the training model with conf_name. 'HQ_Dictionary' and 'RestoreFormer' are for RestoreFormer, while 'ROHQD' and 'RestoreFormerPlusPlus' are for RestoreFormerPlusPlus.

While training 'RestoreFormer' or 'RestoreFormerPlusPlus', 'ckpt_path' in the corresponding configure files in configs/ sholud be updated with the path of the trained model of 'HQ_Dictionary' or 'ROHQD'.

这个代码库可用于RestoreFormer和RestoreFormer++。用conf_name确定训练模型。“HQ_Dictionary”和“RestoreFormer”用于RestoreFormer,而“ROHQD”和“RestoreFormer”用于RestoreFormer。

在训练“RestoreFormer”或“RestoreFormer++”时,配置中相应配置文件中的“ckpt_path”将更新训练模型的“HQ_Dictionary”或“ROHQD”的路径。

2.8 指标Metrics

sh scripts/metrics/run.sh

Note.

You need to add the path of CelebA-Test dataset in the script if you want get IDD, PSRN, SSIM, LIPIS.

Related metric models are in ./experiments/pretrained_models/

如果您想获得IDD,PSRN,SSIM,LIPIS,您需要在脚本中添加CelebA-测试数据集的路径。

相关的度量模型在。/experiments/pretrained_models/

2.9 引用Citation

@article{wang2023restoreformer++,

title={RestoreFormer++: Towards Real-World Blind Face Restoration from Undegraded Key-Value Paris},

author={Wang, Zhouxia and Zhang, Jiawei and Chen, Tianshui and Wang, Wenping and Luo, Ping},

booktitle={IEEE Transactions on Pattern Analysis and Machine Intelligence (T-PAMI)},

year={2023}

}

@article{wang2022restoreformer,

title={RestoreFormer: High-Quality Blind Face Restoration from Undegraded Key-Value Pairs},

author={Wang, Zhouxia and Zhang, Jiawei and Chen, Runjian and Wang, Wenping and Luo, Ping},

booktitle={The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2022}

}

2.10 联系Contact

For any question, feel free to email wzhoux@connect.hku.hk or zhouzi1212@gmail.com.

如有任何问题,请随时发邮件至wzhoux@connect.hku.hk或zhouzi1212@gmail.com。

这两个代码都写的不好,效率低,效果差,有点应付论文的意思。