【云原生 | Kubernetes 系列】---Ceph集群安装部署

1. Ceph版本选择

由于15已经在2022年6月1日终止更新了,且一般选择稳定版小版本大于5的.所以选择安装Ceph 16.2.10 Pacific

| Name | Initial release | Latest | End of life |

|---|---|---|---|

| Quincy | 2022-04-19 | 17.2.3 | 2024-06-01 |

| Pacific | 2021-03-31 | 16.2.10 | 2023-06-01 |

| Octopus | 2020-03-23 | 15.2.17 | 2022-06-01 |

2. 部署方式

常见的部署方式有以下几种:

- ceph-ansible

- ceph-salt

- ceph-container

- ceph-chef

- cephadm

- ceph-deploy #选用这个安装方式

ceph-deploy是一个官方维护基于python的Ceph集群的部署和管理维护工具.

由于cephadm对15.2之前不支持,选择使用ceph-deploy

3. 服务器准备

mon: 8C/8G/200G(不上T) 16C/16G/200G(几十T) 物理机: 48C/96/SSD§*3 千兆万兆都可以

mgr: 4C/8G/200G(不上T) 16C/16G/200G(几十T) 物理机: 36C/64/SSD§*3 千兆万兆都可以

node:

cpu: 1个OSD至少需要1个Core,24核48线程最多放48个磁盘,推荐24个

mem: 1T数据至少1G内存.

磁盘: SSD–>PCIE–>NVME

网卡: 10G–40G–100G

操作系统版本:Ubuntu1804

| 序号 | 机器名 | IP | 用途 |

|---|---|---|---|

| 1 | ceph-mon01 | 192.168.31.81/172.31.31.81 | monitor节点,部署节点 |

| 2 | ceph-mon02 | 192.168.31.82/172.31.31.82 | monitor节点 |

| 3 | ceph-mon03 | 192.168.31.83/172.31.31.83 | monitor节点 |

| 4 | ceph-mgr01 | 192.168.31.84/172.31.31.84 | mgr节点 |

| 5 | ceph-mgr02 | 192.168.31.85/172.31.31.85 | mgr节点 |

| 6 | ceph-node01 | 192.168.31.86/172.31.31.86 | node节点 |

| 7 | ceph-node02 | 192.168.31.87/172.31.31.87 | node节点 |

| 8 | ceph-node03 | 192.168.31.88/172.31.31.88 | node节点 |

| 9 | ceph-node04 | 192.168.31.89/172.31.31.89 | node节点 |

4. Ceph部署准备

- 时间同步(时间偏差会造成ceph异常)

*/5 * * * * /usr/sbin/ntpdate time1.aliyun.com &> /dev/null && hwclock -w &> /dev/null

- 添加Ceph镜像仓库

wget -q -O- 'https://mirrors.tuna.tsinghua.edu.cn/ceph/keys/release.asc' | sudo apt-key add -

sudo apt-get -y install python apt-transport-https ca-certificates curl software-properties-common

sudo echo "deb https://mirrors.tuna.tsinghua.edu.cn/ceph/debian-pacific bionic main" >> /etc/apt/sources.list

sudo apt update

- 所有节点,创建cephadmin用户

groupadd -r -g 2022 cephadmin && useradd -r -m -s /bin/bash -u 2022 -g 2022 cephadmin && echo cephadmin:root123 |chpasswd

- 授予cephadmin用户sudo权限

echo "cephadmin ALL=(ALL:ALL) NOPASSWD: ALL" >> /etc/sudoers

- 所有服务器之间设置免密

ssh-keygen

ssh-copy-id cephadmin@192.168.31.81

ssh-copy-id cephadmin@172.31.31.81

ssh-copy-id cephadmin@172.31.31.82

ssh-copy-id cephadmin@192.168.31.82

ssh-copy-id cephadmin@192.168.31.83

ssh-copy-id cephadmin@192.168.31.84

ssh-copy-id cephadmin@192.168.31.85

ssh-copy-id cephadmin@192.168.31.86

ssh-copy-id cephadmin@192.168.31.87

ssh-copy-id cephadmin@192.168.31.88

ssh-copy-id cephadmin@192.168.31.89

ssh-copy-id cephadmin@172.31.31.82

ssh-copy-id cephadmin@172.31.31.83

ssh-copy-id cephadmin@172.31.31.84

ssh-copy-id cephadmin@172.31.31.85

ssh-copy-id cephadmin@172.31.31.86

ssh-copy-id cephadmin@172.31.31.87

ssh-copy-id cephadmin@172.31.31.88

ssh-copy-id cephadmin@172.31.31.89

- 机器名解析

127.0.0.1 localhost

172.31.31.81 ceph-mon01

172.31.31.82 ceph-mon02

172.31.31.83 ceph-mon03

172.31.31.84 ceph-mgr01

172.31.31.85 ceph-mgr02

172.31.31.86 ceph-node01

172.31.31.87 ceph-node02

172.31.31.88 ceph-node03

172.31.31.89 ceph-node04

5. 安装ceph-deploy

root@ceph-mgr01:~# apt install -y python-pip

root@ceph-mgr01:~# pip install ceph-deploy

root@ceph-mgr01:~# pip install ceph-deploy==2.0.1 -i https://mirrors.aliyun.com/pypi/simple

root@ceph-mgr01:~# ceph-deploy --version

2.0.1

5.1 ceph-deploy参数

| 参数 | 含义 |

|---|---|

| new | 启动一个新的集群,并且生成配置文件(CLUSTER.conf和keyring) |

| install | 在远端主机上安装包 |

| rgw | 安装Ceph RGW守护进程,对象存储网关,对象存储使用 |

| mgr | Ceph MGR守护进程,ceph dashboard |

| mon | Ceph MON守护进程,ceph 监视器 |

| mds | Ceph MDS守护进程,ceph源数据服务器,ceph-fs使用 |

| gatherkeys | 从指定主机提取新节点验证的keys |

| disk | 管理远程主机磁盘 |

| osd | 在远程主机准备数据磁盘,将指定远程主机的磁盘添加到ceph集群作为osd使用. |

| admin | 推送认证文件和lient.admin到远程服务器 |

| repo | 管理远程主机的仓库 |

| config | 复制ceph.conf(从/到)远程主机 |

| uninstall | 卸载ceph包 |

| purge | 删除远端主机的安装包和所有数据 |

| purgedata | 从/var/lib/ceph删除ceph数据,会删除/etc/ceph下内容 |

| calamari | 安装并配置一个calamari web节点,calamari是一个web监控平台 |

| forgetkeys | 从本地主机删除所有验证的keyring.包括client.admin,monitor,bootstrap等认证文件 |

| pkg | 管理远端主机的安装包 |

6. 安装mon节点

6.1 生成集群配置文件

mkdir ceph-cluster

cd ceph-cluster

ceph-deploy new --cluster-network 172.31.31.0/24 --public-network 192.168.31.0/24 ceph-mon01

# 这里可能会提示要输入yes

| 参数 | 含义 |

|---|---|

| –cluster-network | 数据同步使用 |

| –public-network | 客户端挂载使用 |

执行完后生成3个文件

| 参数 | 含义 |

|---|---|

| ceph.conf | 自动生成的配置文件 |

| ceph-deploy-ceph.log | 初始化日志 |

| ceph.mon.keyring | 用于ceph mon节点内部通讯认证的秘钥环文件 |

6.2 初始化node节点

可以单个node初始化

ceph-deploy install --no-adjust-repos --nogpgcheck ceph-node01

也可以多个node同时初始化

ceph-deploy install --no-adjust-repos --nogpgcheck ceph-node01 ceph-node02 ceph-node03 ceph-node04

# 这里可能会提示要输入yes

| 参数 | 含义 |

|---|---|

| –no-adjust-repos | 不同步仓库文件 |

| –nogpgcheck | 不检查进行校验 |

6.3 配置mon节点

6.3.1 安装ceph-mon

在mon节点上执行

apt install -y ceph-mon

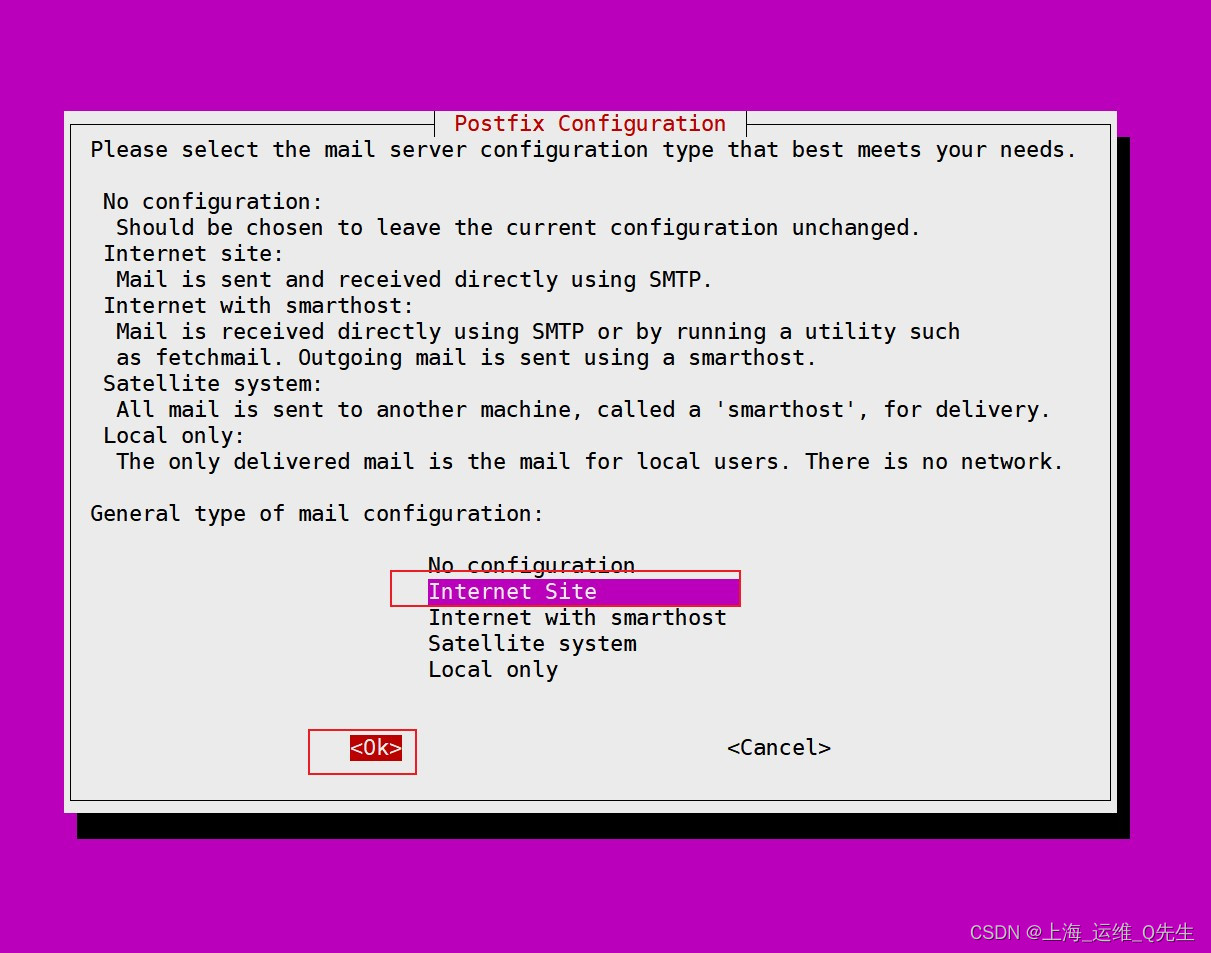

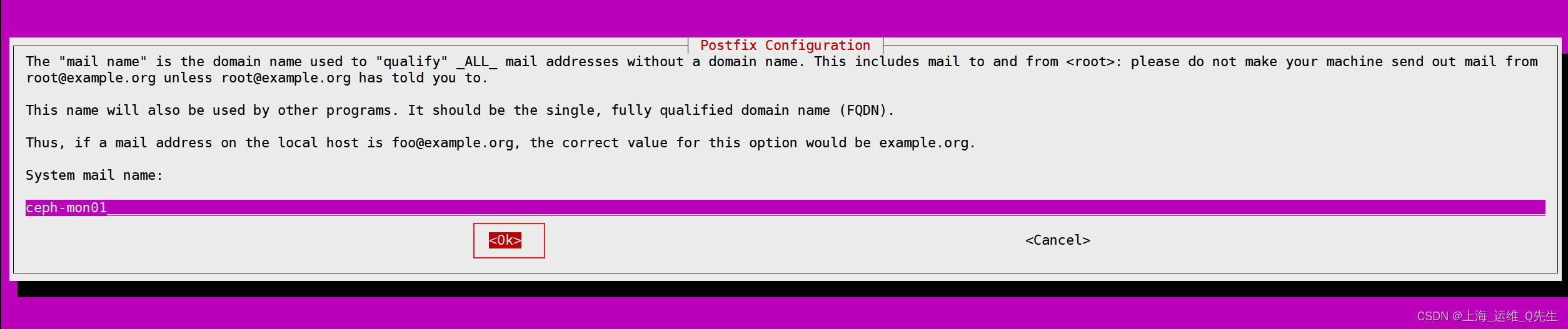

安装过程中会出现

root@ceph-mon01:~# ceph-mon --version

ceph version 16.2.10 (45fa1a083152e41a408d15505f594ec5f1b4fe17) pacific (stable)

6.3.2 初始化ceph-mon

在ceph-deploy节点

初始化mon节点取决于ceph.conf文件的配置.所以再初始化前先确认下文件内容.

cat ceph.conf

[global]

fsid = 86c42734-37fc-4091-b543-be6ff23e5134

public_network = 192.168.31.0/24

cluster_network = 172.31.31.0/24

mon_initial_members = ceph-mon01

mon_host = 192.168.31.81

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

初始化mon节点

ceph-deploy mon create-initial

初始化完后,mon01节点就会启动ceph-mon进程

root@ceph-mon01:~# ps -ef |grep ceph-mon

ceph 33457 1 0 20:16 ? 00:00:00 /usr/bin/ceph-mon -f --cluster ceph --id ceph-mon01 --setuser ceph --setgroup ceph

root 34130 30233 0 20:20 pts/0 00:00:00 grep --color=auto ceph-mon

6.4 秘钥分发

在ceph-deploy节点把配置文件和admin秘钥拷贝至ceph集群需要执行ceph管理命令的节点.从而不需要后期通过ceph命令对ceph集群进行管理配置的时候每次都需要指定ceph-mon节点地址和ceph.client.admin.keyring文件,另外各ceph-mon节点也需要同步ceph的集群配置文件和认证文件

6.4.1 分发秘钥

在所有需要管理ceph的节点都安装

sudo apt install ceph-common -y

再把需要的文件推送给几个节点

ceph-deploy admin ceph-mgr01 ceph-mgr02 ceph-node01 ceph-node02 ceph-node03 ceph-node04

修改秘钥权限

root@ceph-mgr01:~# setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring

root@ceph-mgr01:~# ssh ceph-node01 'setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring'

root@ceph-mgr01:~# ssh ceph-node02 'setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring'

root@ceph-mgr01:~# ssh ceph-node03 'setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring'

root@ceph-mgr01:~# ssh ceph-node04 'setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring'

root@ceph-mgr01:~# ssh ceph-mgr02 'setfacl -m u:cephadmin:rw /etc/ceph/ceph.client.admin.keyring'

6.4.2 分发完成

root@ceph-mgr01:~# ssh ceph-node01 'ls /etc/ceph/'

ceph.client.admin.keyring

ceph.conf

rbdmap

tmpHUGujm

root@ceph-mgr01:~# ssh ceph-node02 'ls /etc/ceph/'

ceph.client.admin.keyring

ceph.conf

rbdmap

tmpZ4VQ0R

root@ceph-mgr01:~# ssh ceph-node03 'ls /etc/ceph/'

ceph.client.admin.keyring

ceph.conf

rbdmap

tmpx9fb5U

root@ceph-mgr01:~# ssh ceph-node04 'ls /etc/ceph/'

ceph.client.admin.keyring

ceph.conf

rbdmap

tmpAkZh_I

root@ceph-mgr01:~# ls /etc/ceph/

ceph.client.admin.keyring ceph.conf rbdmap tmpYNJpSF

7. 配置mgr节点

7.1 安装ceph-mgr

在ceph-mgr01节点上分别安装ceph-mgr包

sudo apt install ceph-mgr -y

7.2 初始化ceph-mgr

ceph-deploy mgr create ceph-mgr01

7.3 确认ceph-mgr安装完毕

可以看到2个mgr都正常运行了

ceph -s

cluster:

id: 86c42734-37fc-4091-b543-be6ff23e5134

health: HEALTH_WARN

client is using insecure global_id reclaim

mon is allowing insecure global_id reclaim

OSD count 0 < osd_pool_default_size 3

services:

mon: 1 daemons, quorum ceph-mon01 (age 49m)

mgr: ceph-mgr01(active, since 8m), standbys: ceph-mgr02

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 0 B used, 0 B / 0 B avail

pgs:

8. OSD节点

8.1 准备OSD节点

node节点安装环境

ceph-deploy install ceph-node01 ceph-node02 ceph-node03 ceph-node04

可以指定版本

ceph-deploy install --release pacific ceph-node01

查看node节点的磁盘信息

ceph-deploy disk list ceph-node01

使用ceph-deploy disk zap擦除node节点上磁盘数据

cephadmin@ceph-mgr01:~/ceph-cluster$ ceph-deploy disk zap ceph-node01 /dev/sdb

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/local/bin/ceph-deploy disk zap ceph-node01 /dev/sdb

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : zap

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7facbd1f87d0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] host : ceph-node01

[ceph_deploy.cli][INFO ] func : <function disk at 0x7facbd2336d0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] disk : ['/dev/sdb']

[ceph_deploy.osd][DEBUG ] zapping /dev/sdb on ceph-node01

[ceph-node01][DEBUG ] connection detected need for sudo

[ceph-node01][DEBUG ] connected to host: ceph-node01

[ceph-node01][DEBUG ] detect platform information from remote host

[ceph-node01][DEBUG ] detect machine type

[ceph-node01][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: Ubuntu 18.04 bionic

[ceph-node01][DEBUG ] zeroing last few blocks of device

[ceph-node01][DEBUG ] find the location of an executable

[ceph-node01][INFO ] Running command: sudo /usr/sbin/ceph-volume lvm zap /dev/sdb

[ceph-node01][WARNIN] --> Zapping: /dev/sdb

[ceph-node01][WARNIN] --> --destroy was not specified, but zapping a whole device will remove the partition table

[ceph-node01][WARNIN] Running command: /bin/dd if=/dev/zero of=/dev/sdb bs=1M count=10 conv=fsync

[ceph-node01][WARNIN] stderr: 10+0 records in

[ceph-node01][WARNIN] 10+0 records out

[ceph-node01][WARNIN] 10485760 bytes (10 MB, 10 MiB) copied, 0.024098 s, 435 MB/s

[ceph-node01][WARNIN] --> Zapping successful for: <Raw Device: /dev/sdb>

看到successful即可

依次擦除其余磁盘

ceph-deploy disk zap ceph-node01 /dev/sdb

ceph-deploy disk zap ceph-node01 /dev/sdc

ceph-deploy disk zap ceph-node01 /dev/sdd

ceph-deploy disk zap ceph-node01 /dev/sde

ceph-deploy disk zap ceph-node02 /dev/sdb

ceph-deploy disk zap ceph-node02 /dev/sdc

ceph-deploy disk zap ceph-node02 /dev/sdd

ceph-deploy disk zap ceph-node02 /dev/sde

ceph-deploy disk zap ceph-node03 /dev/sdb

ceph-deploy disk zap ceph-node03 /dev/sdc

ceph-deploy disk zap ceph-node03 /dev/sdd

ceph-deploy disk zap ceph-node03 /dev/sde

ceph-deploy disk zap ceph-node04 /dev/sdb

ceph-deploy disk zap ceph-node04 /dev/sdc

ceph-deploy disk zap ceph-node04 /dev/sdd

ceph-deploy disk zap ceph-node04 /dev/sde

8.2 添加OSD

数据分类:

| 类型 | 含义 |

|---|---|

| Data | ceph保存的对象数据 |

| Block | rocks DB数据即元数据 |

| block-wal | 数据库的wal日志 |

8.2.1 元数据和数据全放一起

ceph-deploy osd create ceph-node01 --data /dev/sdb

8.2.2 分开放

一般会将wal放到nvme上,如果都是ssd就没必要拆开放了.

预写日志和数据日志分开,这个用的稍微多一点,

ceph-deploy osd create ceph-node01 --data /dev/sdc --block-wal /dev/sde

数据日志和元数据分开

ceph-deploy osd create ceph-node01 --data /dev/sdc --block-db /dev/sdd

全都分开

ceph-deploy osd create ceph-node01 --data /dev/sdc --block-db /dev/sdd --block-wal /dev/sde

8.3 从RADOS移除OSD

cephadmin@ceph-node01:~$ ceph osd crush remove osd.1

removed item id 1 name 'osd.1' from crush map

cephadmin@ceph-node01:~$ ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.00893 root default

-3 0.00099 host ceph-node01

0 hdd 0.00099 osd.0 down 0 1.00000

-5 0.00397 host ceph-node02

2 hdd 0.00099 osd.2 up 1.00000 1.00000

3 hdd 0.00099 osd.3 up 1.00000 1.00000

4 hdd 0.00099 osd.4 up 1.00000 1.00000

5 hdd 0.00099 osd.5 up 1.00000 1.00000

-7 0.00397 host ceph-node03

6 hdd 0.00099 osd.6 up 1.00000 1.00000

7 hdd 0.00099 osd.7 up 1.00000 1.00000

8 hdd 0.00099 osd.8 up 1.00000 1.00000

9 hdd 0.00099 osd.9 up 1.00000 1.00000

1 0 osd.1 down 0 1.00000

cephadmin@ceph-node01:~$ ceph osd crush remove osd.0

removed item id 0 name 'osd.0' from crush map

cephadmin@ceph-node01:~$ ceph osd tree

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 0.00793 root default

-3 0 host ceph-node01

-5 0.00397 host ceph-node02

2 hdd 0.00099 osd.2 up 1.00000 1.00000

3 hdd 0.00099 osd.3 up 1.00000 1.00000

4 hdd 0.00099 osd.4 up 1.00000 1.00000

5 hdd 0.00099 osd.5 up 1.00000 1.00000

-7 0.00397 host ceph-node03

6 hdd 0.00099 osd.6 up 1.00000 1.00000

7 hdd 0.00099 osd.7 up 1.00000 1.00000

8 hdd 0.00099 osd.8 up 1.00000 1.00000

9 hdd 0.00099 osd.9 up 1.00000 1.00000

0 0 osd.0 down 0 1.00000

1 0 osd.1 down 0 1.00000

8.4 创建pool

pool名mypool,32个pg和32个pgp

$ ceph osd pool create mypool 32 32

pool 'mypool' created

$ ceph osd pool ls

device_health_metrics

mypool

$ ceph pg ls-by-pool mypool|awk '{print $1,$2,$15}'

PG OBJECTS ACTING

2.0 0 [3,6,13]p3

2.1 0 [13,6,3]p13

2.2 0 [5,12,9]p5

2.3 0 [5,13,9]p5

2.4 0 [11,7,2]p11

2.5 0 [8,12,4]p8

2.6 0 [11,6,3]p11

2.7 0 [3,7,12]p3

2.8 0 [3,7,11]p3

2.9 0 [11,4,8]p11

2.a 0 [6,13,3]p6

2.b 0 [8,5,10]p8

2.c 0 [6,12,5]p6

2.d 0 [9,3,13]p9

2.e 0 [10,9,2]p10

2.f 0 [8,4,12]p8

2.10 0 [8,11,5]p8

2.11 0 [4,13,9]p4

2.12 0 [7,11,2]p7

2.13 0 [7,4,13]p7

2.14 0 [2,7,13]p2

2.15 0 [7,11,3]p7

2.16 0 [5,7,10]p5

2.17 0 [5,6,11]p5

2.18 0 [9,2,10]p9

2.19 0 [11,4,7]p11

2.1a 0 [3,8,10]p3

2.1b 0 [6,2,12]p6

2.1c 0 [8,4,12]p8

2.1d 0 [7,3,10]p7

2.1e 0 [13,7,5]p13

2.1f 0 [11,3,8]p11

8.5 文件上传

将本地/home/cephadmin/ceph-cluster/ceph.conf上传到mypool中,文件名为ceph.conf

$ sudo rados put ceph.conf /home/cephadmin/ceph-cluster/ceph.conf --pool=mypool

$ rados ls --pool=mypool

ceph.conf

查看文件具体映射关系

$ ceph osd map mypool ceph.conf

osdmap e98 pool 'mypool' (2) object 'ceph.conf' -> pg 2.d52b66c4 (2.4) -> up ([11,7,2], p11) acting ([11,7,2], p11)

映射关系再’mypool’,对象名字叫’ceph.conf’,文件放在pg 2.d52b66c4 (2.4)存储池(2.4 0 [11,7,2]p11),在线的磁盘有up ([11,7,2], p11是主) acting ([11,7,2], p11是主)

8.6 文件下载和删除

下载

$ rados get ceph.conf --pool=mypool /home/cephadmin/test.conf

$ cat /home/cephadmin/test.conf

[global]

fsid = 86c42734-37fc-4091-b543-be6ff23e5134

public_network = 192.168.31.0/24

cluster_network = 172.31.31.0/24

mon_initial_members = ceph-mon01

mon_host = 192.168.31.81

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

删除

$ rados rm ceph.conf --pool=mypool

$ rados ls --pool=mypool

9. Ceph高可用

9.1 安装ceph-mon

在新加节点先安装ceph-mon包

apt install ceph-mon -y

9.1.2 在ceph-deploy上加入新节点

ceph-deploy mon add ceph-mon02

ceph-deploy mon add ceph-mon03

加入完后再执行ceph -s就能看到mon节点数是3了

ceph -s

services:

mon: 3 daemons, quorum ceph-mon01,ceph-mon02,ceph-mon03 (age 1.61575s)

mgr: ceph-mgr01(active, since 45m), standbys: ceph-mgr02

osd: 14 osds: 12 up (since 39m), 12 in (since 39m)

查看mon状态:

可以看到有3个节点ceph-mon01,ceph-mon02,ceph-mon03.其中ceph-mon01是leader.

$ ceph quorum_status --format json-pretty

{

"election_epoch": 12,

"quorum": [

0,

1,

2

],

"quorum_names": [

"ceph-mon01",

"ceph-mon02",

"ceph-mon03"

],

"quorum_leader_name": "ceph-mon01",

"quorum_age": 111,

"features": {

"quorum_con": "4540138297136906239",

"quorum_mon": [

"kraken",

"luminous",

"mimic",

"osdmap-prune",

"nautilus",

"octopus",

"pacific",

"elector-pinging"

]

},

"monmap": {

"epoch": 3,

"fsid": "86c42734-37fc-4091-b543-be6ff23e5134",

"modified": "2022-09-15T05:02:22.038944Z",

"created": "2022-09-14T12:15:59.692998Z",

"min_mon_release": 16,

"min_mon_release_name": "pacific",

"election_strategy": 1,

"disallowed_leaders: ": "",

"stretch_mode": false,

"tiebreaker_mon": "",

"features": {

"persistent": [

"kraken",

"luminous",

"mimic",

"osdmap-prune",

"nautilus",

"octopus",

"pacific",

"elector-pinging"

],

"optional": []

},

"mons": [

{

"rank": 0,

"name": "ceph-mon01",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "192.168.31.81:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "192.168.31.81:6789",

"nonce": 0

}

]

},

"addr": "192.168.31.81:6789/0",

"public_addr": "192.168.31.81:6789/0",

"priority": 0,

"weight": 0,

"crush_location": "{}"

},

{

"rank": 1,

"name": "ceph-mon02",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "192.168.31.82:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "192.168.31.82:6789",

"nonce": 0

}

]

},

"addr": "192.168.31.82:6789/0",

"public_addr": "192.168.31.82:6789/0",

"priority": 0,

"weight": 0,

"crush_location": "{}"

},

{

"rank": 2,

"name": "ceph-mon03",

"public_addrs": {

"addrvec": [

{

"type": "v2",

"addr": "192.168.31.83:3300",

"nonce": 0

},

{

"type": "v1",

"addr": "192.168.31.83:6789",

"nonce": 0

}

]

},

"addr": "192.168.31.83:6789/0",

"public_addr": "192.168.31.83:6789/0",

"priority": 0,

"weight": 0,

"crush_location": "{}"

}

]

}

}

9.2 安装ceph-mgr

9.2.1 安装ceph-mgr包

在ceph-mgr02节点上分别安装ceph-mgr包

sudo apt install ceph-mgr -y

9.2.2 初始化ceph-mgr

ceph-deploy mgr create ceph-mgr02

9.2.3 cehp-mgr主从关系

可以看到ceph-mgr01是主,ceph-mgr02是standbys

ceph -s

mgr: ceph-mgr01(active, since 52m), standbys: ceph-mgr02

10. Ceph报错

10.1 无法ceph-deploy disk list

报错信息如下:

cephadmin@ceph-mgr01:~/ceph-cluster$ ceph-deploy disk list ceph-node01

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (1.5.38): /usr/bin/ceph-deploy disk list ceph-node01

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : list

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7f28658bb9b0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] func : <function disk at 0x7f2865d2b0d0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph_deploy.cli][INFO ] disk : [('ceph-node01', None, None)]

[ceph-node01][DEBUG ] connection detected need for sudo

[ceph-node01][DEBUG ] connected to host: ceph-node01

[ceph-node01][DEBUG ] detect platform information from remote host

[ceph-node01][DEBUG ] detect machine type

[ceph-node01][DEBUG ] find the location of an executable

[ceph_deploy.osd][INFO ] Distro info: Ubuntu 18.04 bionic

[ceph_deploy.osd][DEBUG ] Listing disks on ceph-node01...

[ceph-node01][DEBUG ] find the location of an executable

[ceph_deploy][ERROR ] ExecutableNotFound: Could not locate executable 'ceph-disk' make sure it is installed and available on ceph-node01

解决过程

将1.5.38的ceph-deploy升级到2.0.1故障就排除了.

root@ceph-mgr01:~# apt install -y python-pip

root@ceph-mgr01:~# pip install ceph-deploy

root@ceph-mgr01:~# pip install ceph-deploy==2.0.1 -i https://mirrors.aliyun.com/pypi/simple

root@ceph-mgr01:~# ceph-deploy --version

2.0.1

结果

cephadmin@ceph-mgr01:~/ceph-cluster$ ceph-deploy disk list ceph-node01

[ceph_deploy.conf][DEBUG ] found configuration file at: /home/cephadmin/.cephdeploy.conf

[ceph_deploy.cli][INFO ] Invoked (2.0.1): /usr/local/bin/ceph-deploy disk list ceph-node01

[ceph_deploy.cli][INFO ] ceph-deploy options:

[ceph_deploy.cli][INFO ] username : None

[ceph_deploy.cli][INFO ] verbose : False

[ceph_deploy.cli][INFO ] debug : False

[ceph_deploy.cli][INFO ] overwrite_conf : False

[ceph_deploy.cli][INFO ] subcommand : list

[ceph_deploy.cli][INFO ] quiet : False

[ceph_deploy.cli][INFO ] cd_conf : <ceph_deploy.conf.cephdeploy.Conf instance at 0x7efdde74e7d0>

[ceph_deploy.cli][INFO ] cluster : ceph

[ceph_deploy.cli][INFO ] host : ['ceph-node01']

[ceph_deploy.cli][INFO ] func : <function disk at 0x7efdde7896d0>

[ceph_deploy.cli][INFO ] ceph_conf : None

[ceph_deploy.cli][INFO ] default_release : False

[ceph-node01][DEBUG ] connection detected need for sudo

[ceph-node01][DEBUG ] connected to host: ceph-node01

[ceph-node01][DEBUG ] detect platform information from remote host

[ceph-node01][DEBUG ] detect machine type

[ceph-node01][DEBUG ] find the location of an executable

[ceph-node01][INFO ] Running command: sudo fdisk -l

[ceph-node01][INFO ] Disk /dev/nvme0n1: 2 GiB, 2147483648 bytes, 4194304 sectors

[ceph-node01][INFO ] Disk /dev/nvme0n2: 2 GiB, 2147483648 bytes, 4194304 sectors

[ceph-node01][INFO ] Disk /dev/sda: 40 GiB, 42949672960 bytes, 83886080 sectors

[ceph-node01][INFO ] Disk /dev/sdb: 1 GiB, 1073741824 bytes, 2097152 sectors

[ceph-node01][INFO ] Disk /dev/sdc: 1 GiB, 1073741824 bytes, 2097152 sectors

[ceph-node01][INFO ] Disk /dev/sdd: 1 GiB, 1073741824 bytes, 2097152 sectors

[ceph-node01][INFO ] Disk /dev/sde: 1 GiB, 1073741824 bytes, 2097152 sectors

[ceph-node01][INFO ] Disk /dev/mapper/ubuntu--vg-ubuntu--lv: 20 GiB, 21474836480 bytes, 41943040 sectors

10.2 mon is allowing insecure global_id reclaim去除

告警信息:mon is allowing insecure global_id reclaim

cephadmin@ceph-mgr01:~/ceph-cluster$ ceph -s

cluster:

id: 86c42734-37fc-4091-b543-be6ff23e5134

health: HEALTH_WARN

client is using insecure global_id reclaim

mon is allowing insecure global_id reclaim

services:

mon: 1 daemons, quorum ceph-mon01 (age 4h)

mgr: ceph-mgr01(active, since 12h), standbys: ceph-mgr02

osd: 10 osds: 10 up (since 6s), 10 in (since 14s)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 1.0 GiB used, 9.9 GiB / 11 GiB avail

pgs:

告警原因:

mon使用了非安全通信模式

去除方法:

ceph config set mon auth_allow_insecure_global_id_reclaim false

ceph -s

cluster:

id: 86c42734-37fc-4091-b543-be6ff23e5134

health: HEALTH_OK

services:

mon: 1 daemons, quorum ceph-mon01 (age 4h)

mgr: ceph-mgr01(active, since 13h), standbys: ceph-mgr02

osd: 10 osds: 10 up (since 17m), 10 in (since 17m)

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0 B

usage: 1.0 GiB used, 9.9 GiB / 11 GiB avail

pgs:

10.3 ceph-deploy执行报错

报错内容如下:

[ceph_deploy][ERROR ] ConfigError: Cannot load config: [Errno 2] No such file or directory: 'ceph.conf'; has `ceph-deploy new` been run in this directory?

解决方法:

目录不对,切换到有ceph.conf的目录即可.